Misconfigurations are the number one security concern in the cloud.

That’s not surprising given that you’re moving faster in the AWS Cloud building and deploying several times at day. On top of that, AWS releases new features and services at a furious pace.

This is what makes the cloud exciting to work with.

It’s also what creates the constant challenge of keeping up and keeping track of what’s actually going on. Is what you’ve deployed, what you intended?

Traditional security approaches are broken…both with respect to technology and culturally. You can’t built a strong perimeter when the perimeter no longer exists. You can’t force every deployment through a long review when you’re deploying ten times a day.

What’s come to the forefront in the past couple of years is the idea of guardrails. AWS talks about guardrails, the community talks about guardrails, but what exactly are they? How do you build them? How do they help you?

In this talk, we’ll walk through the process of prioritizing and building simple guardrails to help you and your team avoid misconfigurations and other common security pitfalls in the AWS Cloud.

We’ll use lightly filtered real world examples to show how we can use techniques you’re already familiar with to ensure that your builds in the cloud meet reasonable security standards with minimal effort.

Critically, we’ll do this without burdening your team or slowing down your builds…too much 😉.

Click on the slides for larger versions

What is the #1 security problem in the cloud?

It's not even organized cybercrime.

It's misconfigurations.

Builders expecting one behaviour and seeing another in production.

...but at their core, misconfigurations are really just mistakes.

Whether that's a mistake in not reading the documentation, not testing adequately, or simply misunderstanding the situation, it's a mistake.

The goal of cybersecurity is actually simple. Ignore the formal definitions, it really boils down to this...

To make sure that systems work as intended

Security Controls

At a very high level, there are two types of security controls.

- Detective

-

These controls find issue and raise their hand saying, "I found this."

- Preventatives

-

These controls find an issue and then take action to prevent further problems. They say, "I stopped this."

Now when a security control encounters something suspicious, it needs to come up with a determination.

Is this is a problem?

Despite what you may think, there are three possible answers to this question.

Most security controls—and people—think in that binary, yes/no space.

This is the foundational, allow/deny concept that's critical to security overall.

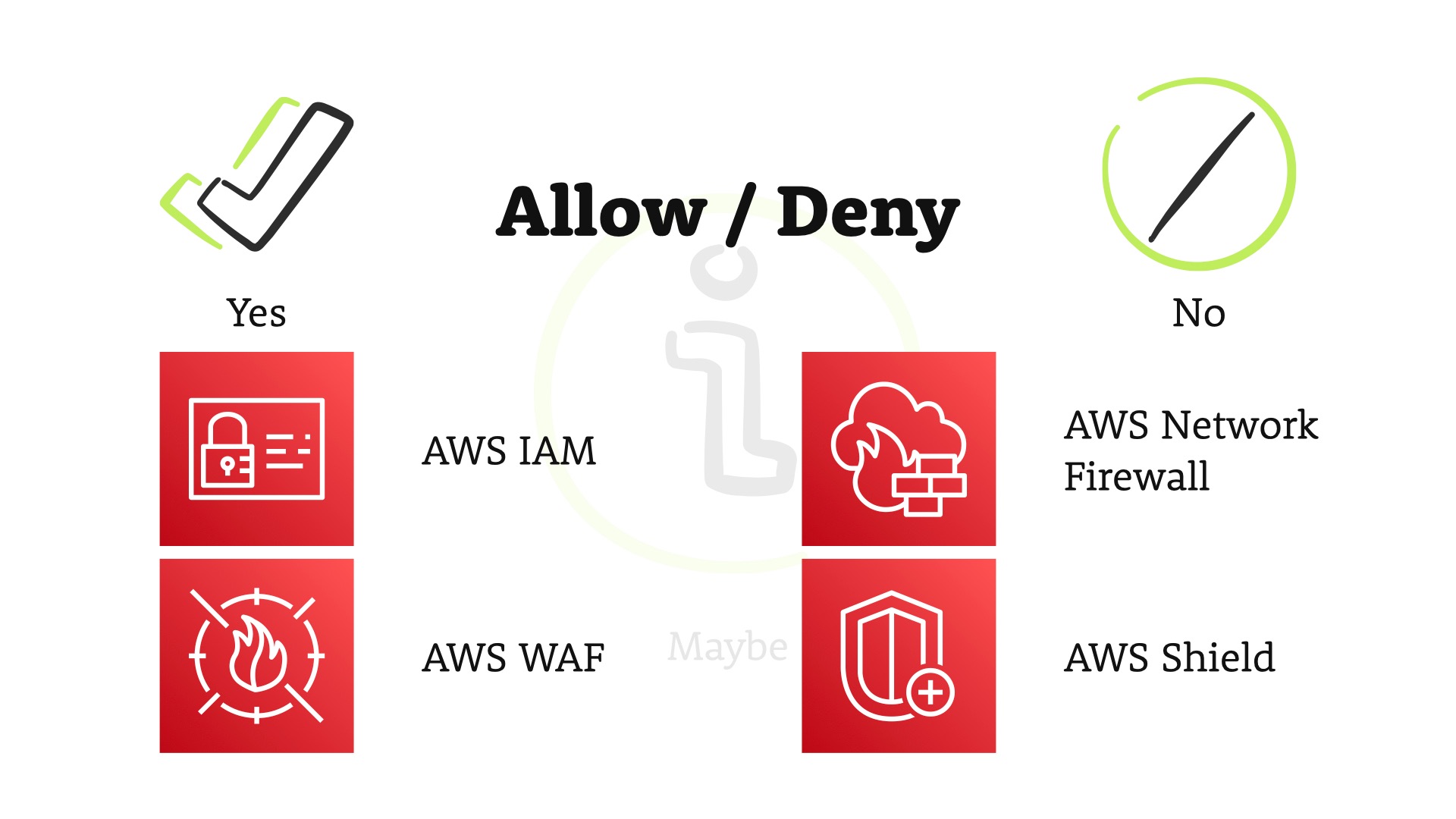

Several key services in the AWS Cloud are security controls that provide this type of yes/no answer.

...but what we want to focus on is that grey area.

The "maybe's"

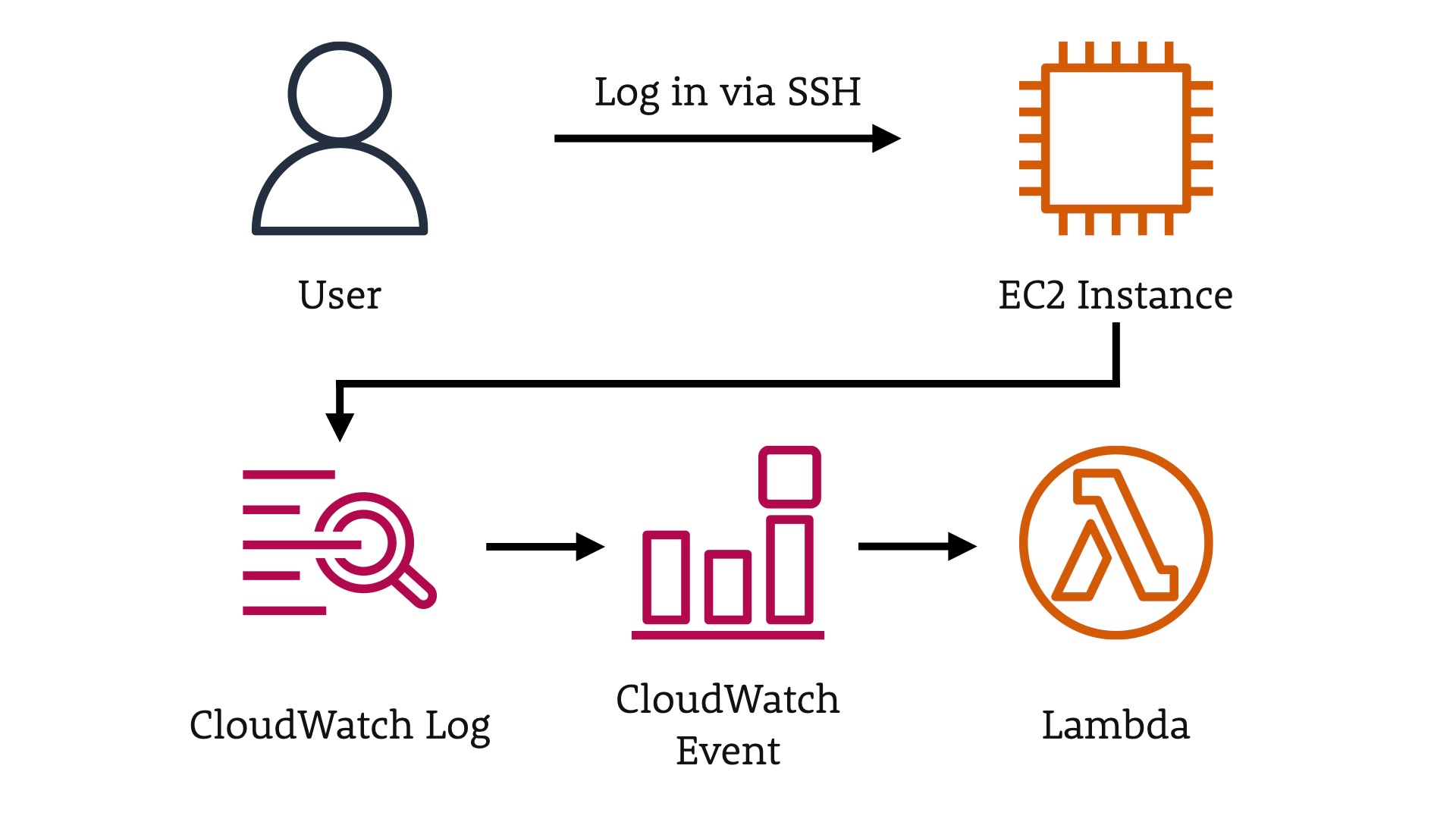

Accessing an EC2 Instance

The scenario is simple, we have a user who is logging in to an EC2 instance.

On that instance, we have an AWS CloudWatch Logs agent running.

That agent is looking at the local system logs and forwarding various events to the AWS CloudWatch service.

In our case, we're interested in the SSH logs that show when users are logging in (or trying to) remotely.

From CloudWatch Logs, a CloudWatch Event is raised which we'll have trigger our AWS Lambda function.

That Lambda function is going to then find the user who logged into the instance in Slack and then ask if it was them logging into the EC2 instance.

If they say yes, they did log in, this is a chance to educate them that they might not want to make this a habit.

Ideally, we don't have anyone logging into production instances. We should be make changes and observing the system in other places.

If they say no, it wasn't them logging in, they we kick off an incident response process because we definitely have a security incident on our hands.

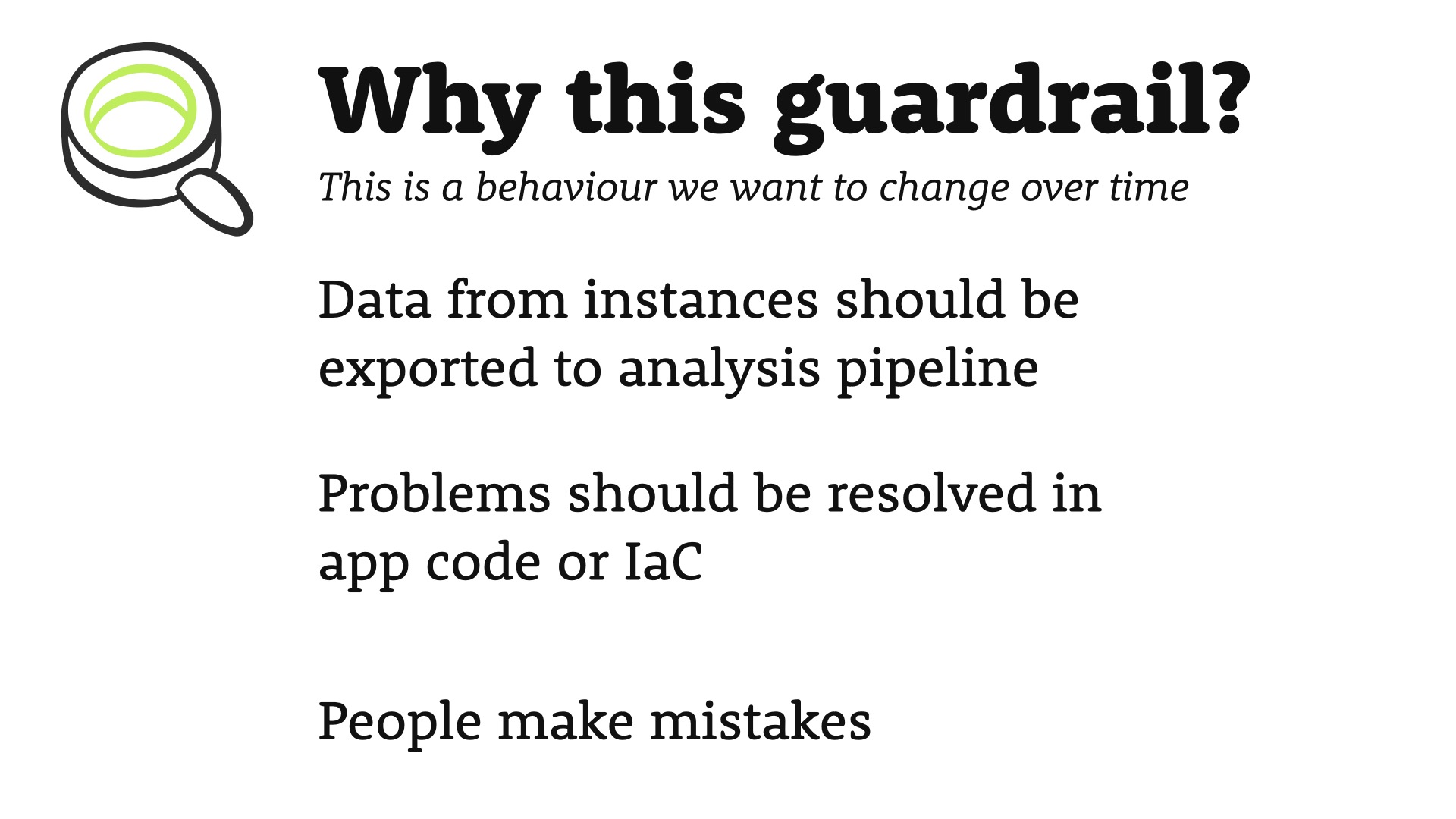

Why this guardrail?

- Data from instances should be exported to an analysis pipeline, not pulled by hand

- Problems with instances, should be resolved in the application code or your infrastructure as code templates (IaC)

- People make mistakes. Remote access could increase the overall risk to your system

Too Many IAM Permissions

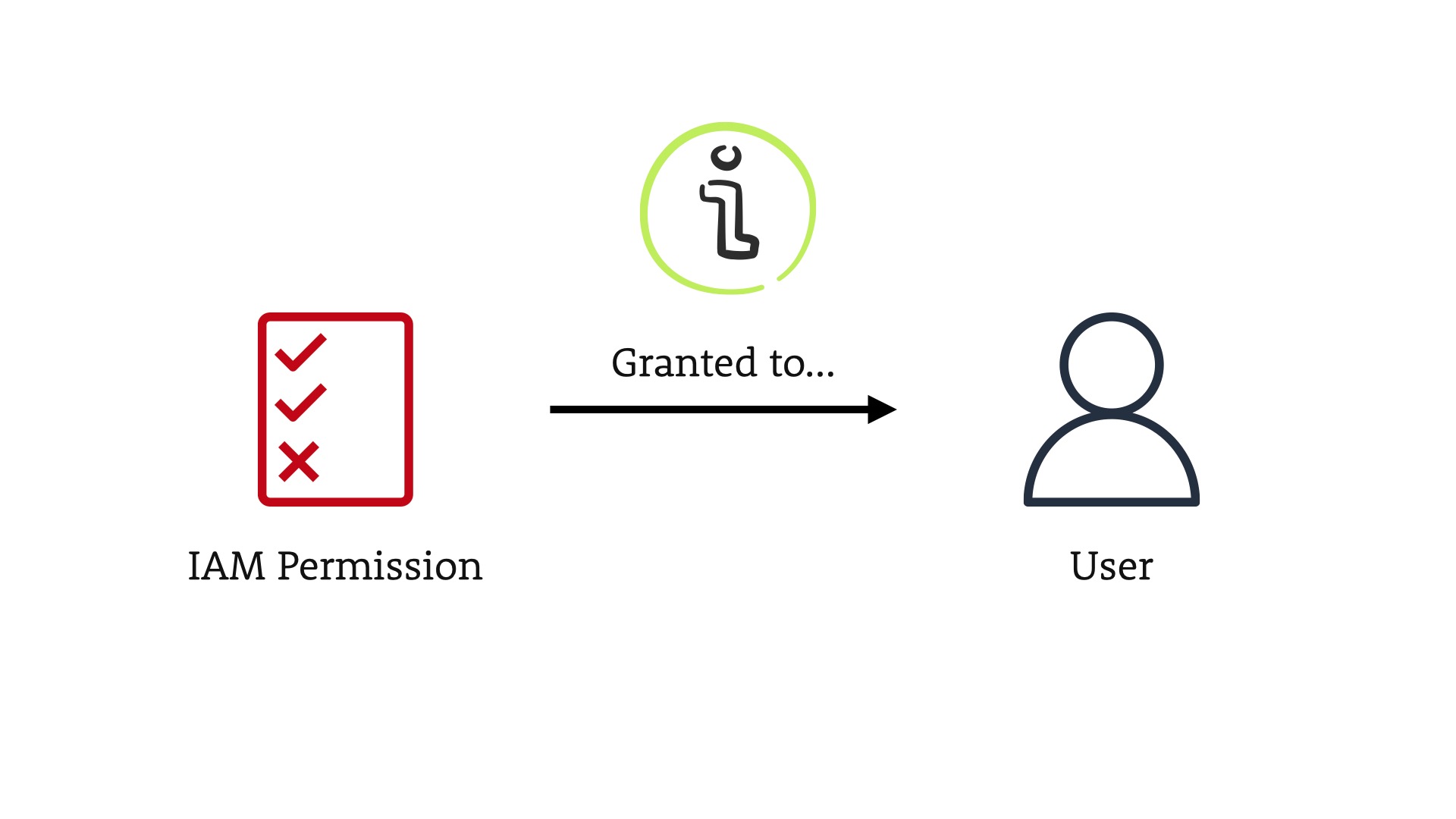

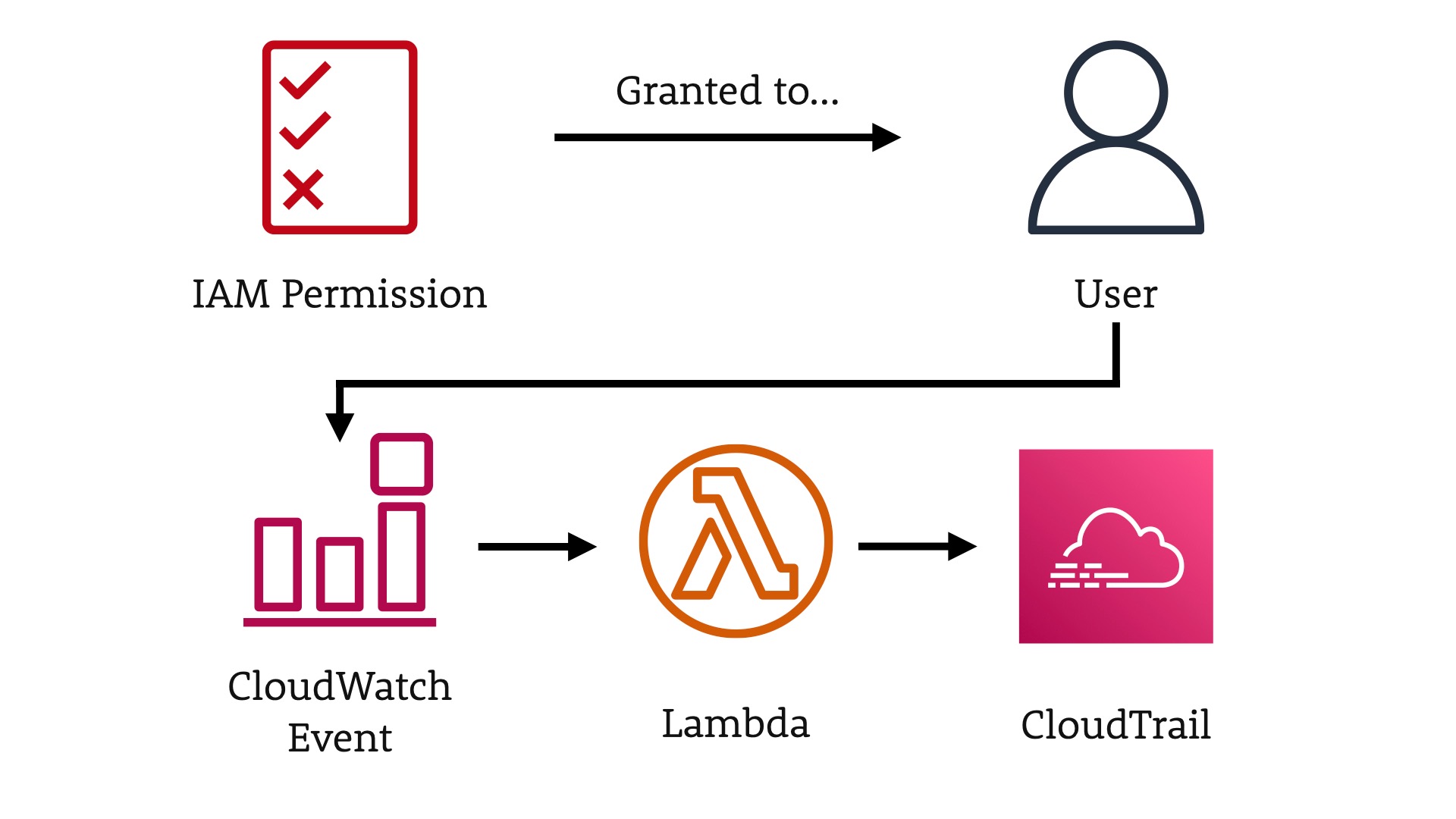

In this scenario, we have an IAM Permission being granted to a User.

When that happens, we'll monitor the CloudWatch Event and then trigger a new Lambda.

That Lambda is going to use the search feature of AWS CloudTrail to see what the user already has access to, what groups or roles might have similar access, etc.

The goal here is to help the user apply the principle of least privilege.

...and of course, we're going to bubble those findings up via Slack.

Again, we take the opportunity to educate the user in context, highlighting the current risk and potential methods of reducing/mitigating those risks.

Why this guardrail?

- Permissions should usually be granted to groups, not users. It's a lot easier to manage that way

- Permissions are never really removed after being granted...despite everyone knowing better

- Roles are the best way to manage and think of the permissions required for a specific task

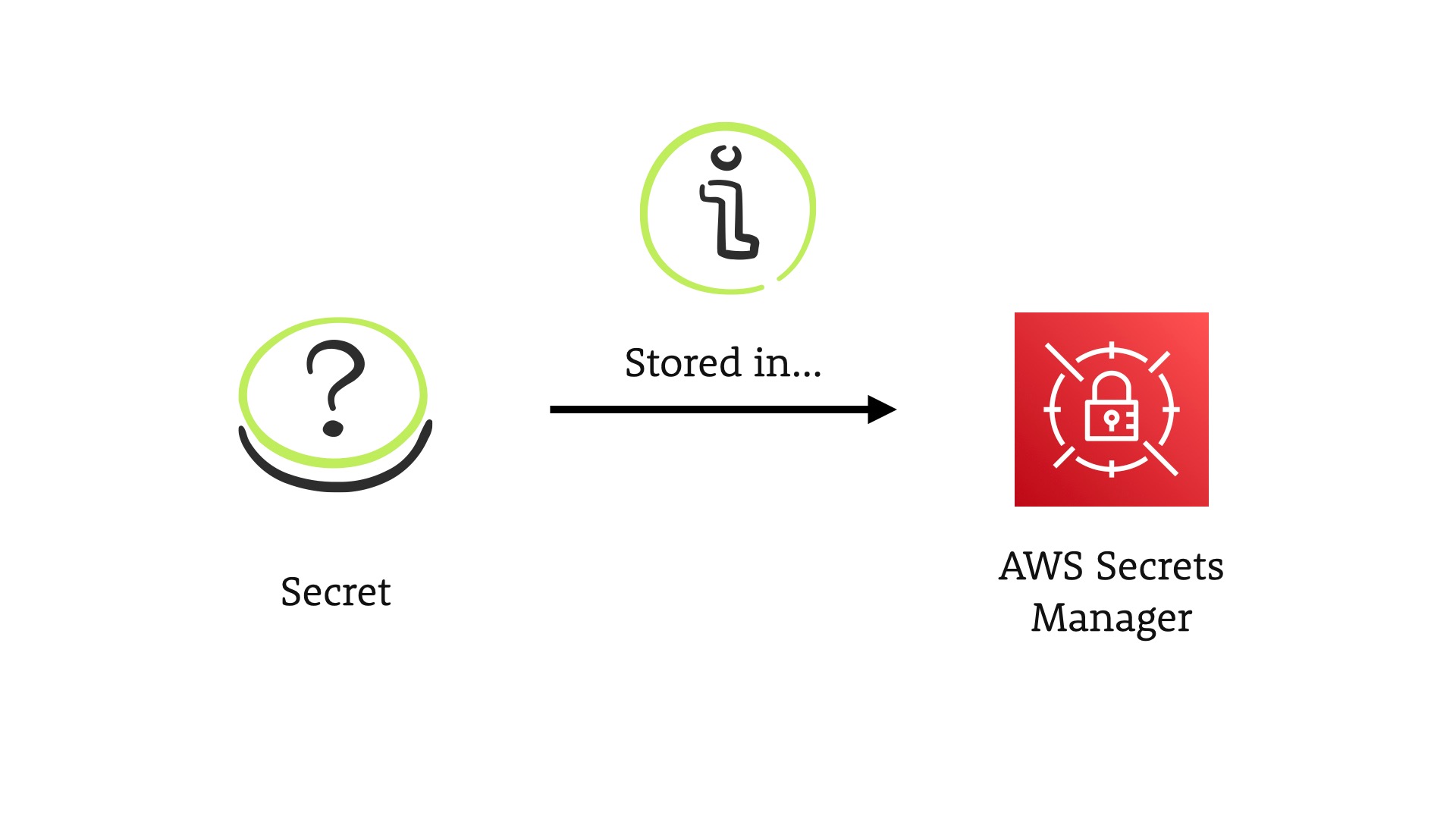

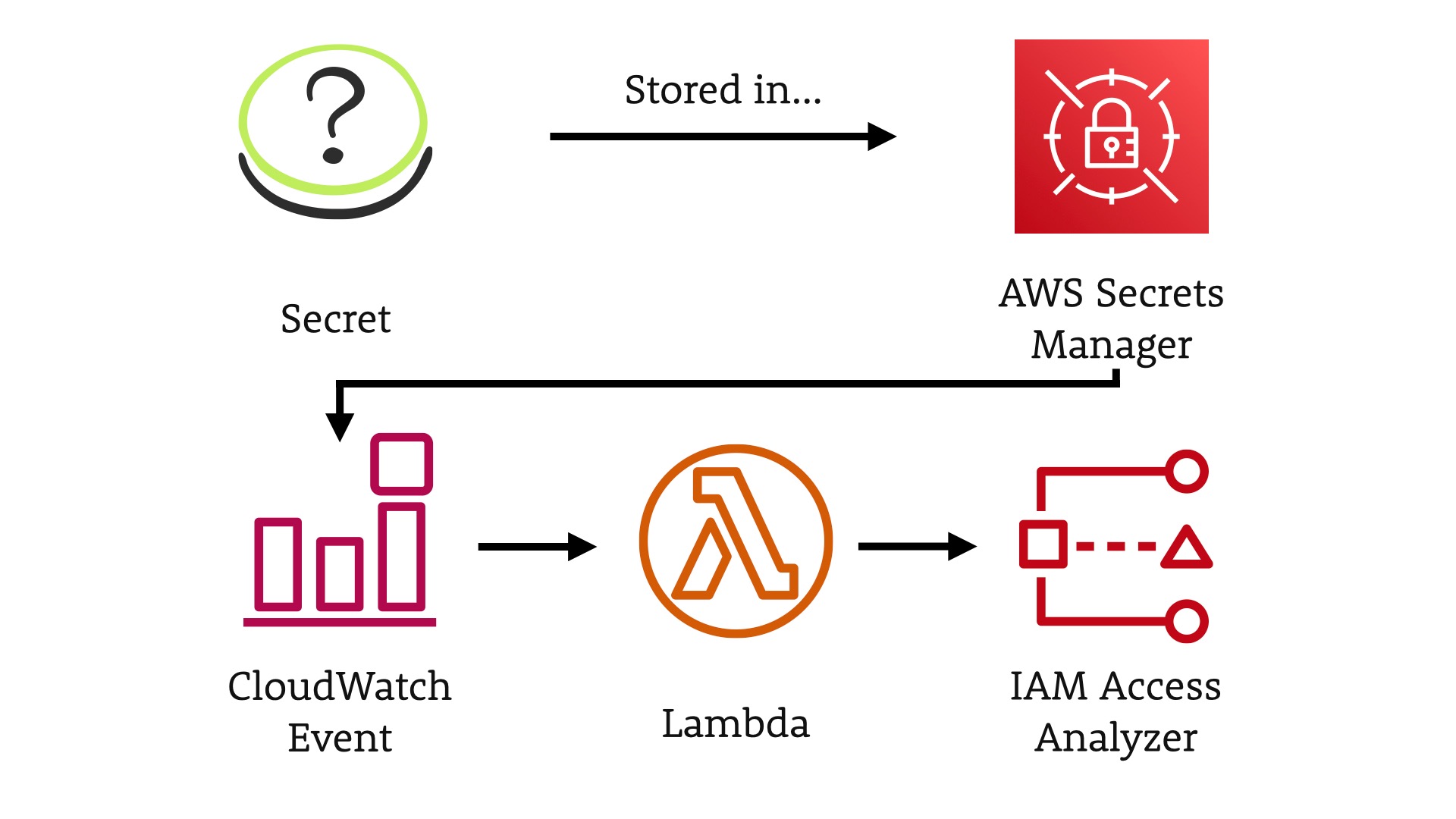

Open Secrets

This scenario tackles the challenge of keeping secrets...secret.

We have a secret of some kind that is being stored in AWS Secrets Manager.

When that secret is stored, we—again—capture the CloudWatch Event and then trigger a Lambda.

Then we use the IAM Access Analyzer to determine who and what can access the secret.

...did you see this one coming? We then use the opportunity to educate the user in context via Slack.

Where we send the messages isn't the key point here. This pattern is just as useful if your organization uses Microsoft Teams, Discord, Google Chat, email, or something else as it's main method of communication.

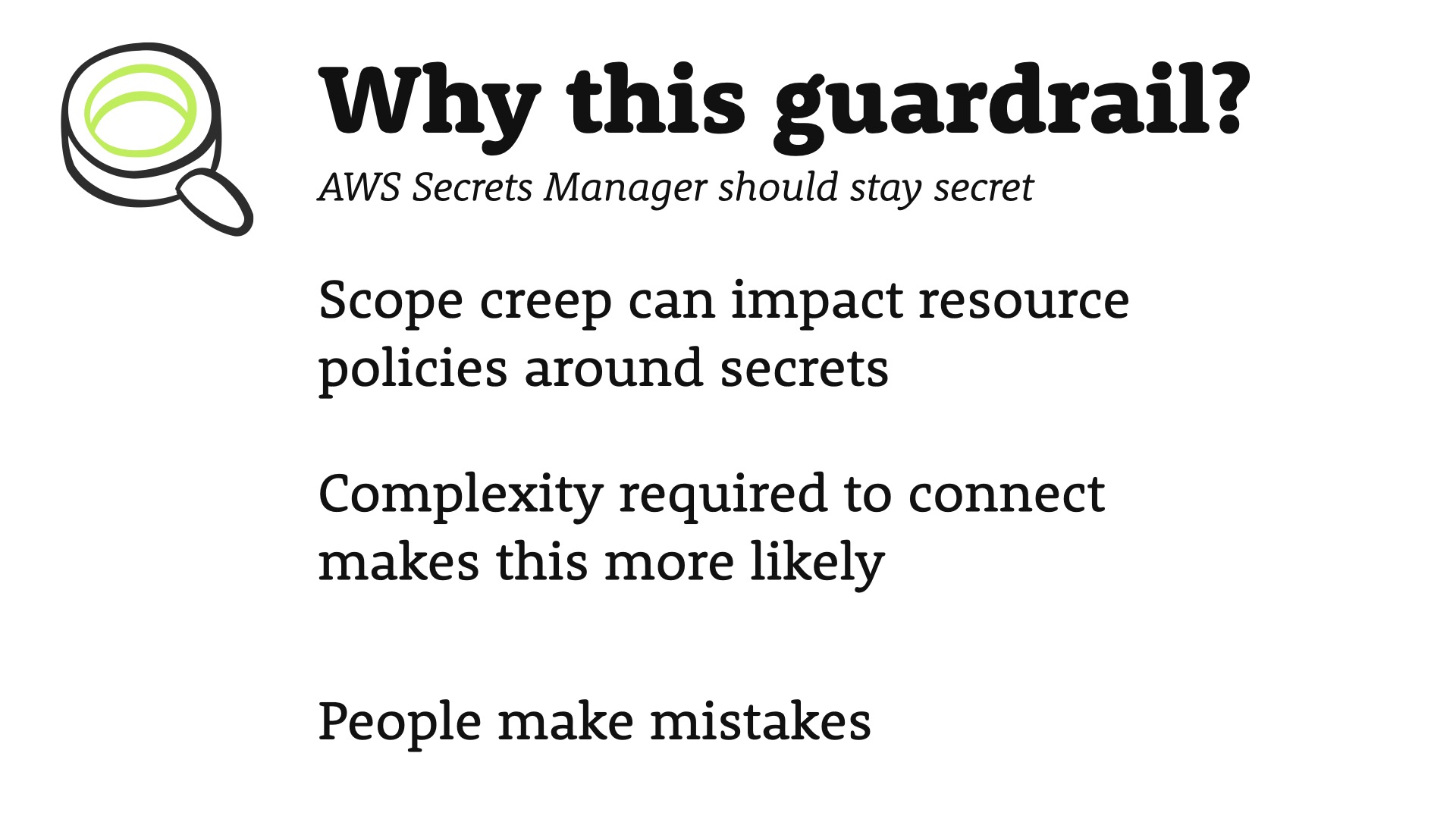

Why this guardrail?

- Scope creep is real! It will impact resource policies around secrets

- Wiring up Secrets Manager can be complication and that complexity leads to more mistakes and possible issues

- People make mistakes...it happens

Take Aways

Remember, there are three possible answers to figuring out is something is a security problem: yes, maybe, or no.

Guardrails tackle the maybe.

Guardrails are an opportunity to educate users in context. It's an opportunity to highlight risk and provide the information required to make an informed decision.

Remember the biggest security challenge in the cloud are misconfigurations.

Strip away all of the phrasing and misconfigurations are simply mistakes.

People make mistakes.

It happens. It will always happen. Guardrails are a great tool to help you educate and inform in order to help reduce the possibility of mistakes happening and then to reduce the impact of the mistakes that do happen.

Thank you!

If you'd like to discuss this more, please reach out on Twitter, where I'm @marknca.