This talk was presented as a keynote at SecTor 2018 in Toronto to an audience of 1,300+ in Oct-2018. I sat down with Danny Bradbury during the conference to discuss the key ideas of this talk.

You can watch the keynote on the SecTor website.

Criminals are winning the battle against security practitioners. Need proof? Look no further than the new headlines in any given week.

Billions of dollars are being spent on the latest and “greatest” tools, and millions of people hours are being exhausted in the defence of our data. Yet with all this effort, it remains trivially easy for most criminals to compromise systems. After decades of practice, we—the security community—seem to be no further ahead. In fact, a strong argument can be made that we’re falling behind…rapidly.

Why? If you ask any cybersecurity professional how to defend an organization, you’ll get answers that maintain the status quo, and excuses citing how good cybercriminals are. Rarely do the answers point to organizational structure, internal incentives, or working with the rest of the organization.

So, why do we not question the current approach despite mounting evidence of its failure, and learn from those mistakes?

In this talk, we’ll explore those failures, the reasons behind them, and what steps we can take to correct them. No one is naive enough to believe there’s a magical solution to cybersecurity, but by asking if a different approach could be more effective, we may just find a more successful way forward!

Slides

Unlike other talks on the site, I've presented the full transcript with the slides here. This talk was a really special one for me and I wanted to showcase it here.

This is not a technical talk.

This is not a talk that’s gonna give you a ton of solutions, right? That may be surprising, but this is a talk that’s designed to help you think a little bit different, maybe point out some things that are affecting your day-to-day work life that you weren’t aware of.

And that could be challenging. That can be really difficult to hear. Sometimes it’s difficult to deliver. With that, I wanna give you a bit of a warning. There’s very emotional content in this presentation. You may feel these types of things.

You may get angry. That’s okay.

You may be shocked. You may be a little bit sad. That’s fine. Okay? That’s totally natural. It’s totally understandable. By the end of it, I hope that we can have a bit of a shared understanding and maybe get to that agreement of that we need to change some of the way that we go about how we work.

Okay? I assume your complete and utter tumbleweed silence is agreement and understanding.

That being said, I think cybersecurity is a fantastic area. It’s a fantastic discipline. It is absolutely full of potential, right?

We are a rocket ship. We are just soaring full of potential.

We are more important than ever.

Unfortunately, and I include myself in this, I am a security professional, obviously by trade, we are taking this rocket ship and we are driving it straight into the ground.

That, as you can imagine, is not a positive thing.

Very few people driving rocket ships are targeting the ground on the way up, right? So why? How are we here? How are we taking all of this potential and squandering it?

Now, we’re not doing it on purpose. Nobody gets out and starts in the morning and says, “You know what? I’m gonna mess up today.” Or, “I’m gonna make really poor decisions.”

There’s been a series of things that have happened to our profession as it has grown up that have led us to this point. And we’re gonna explore some of that today.

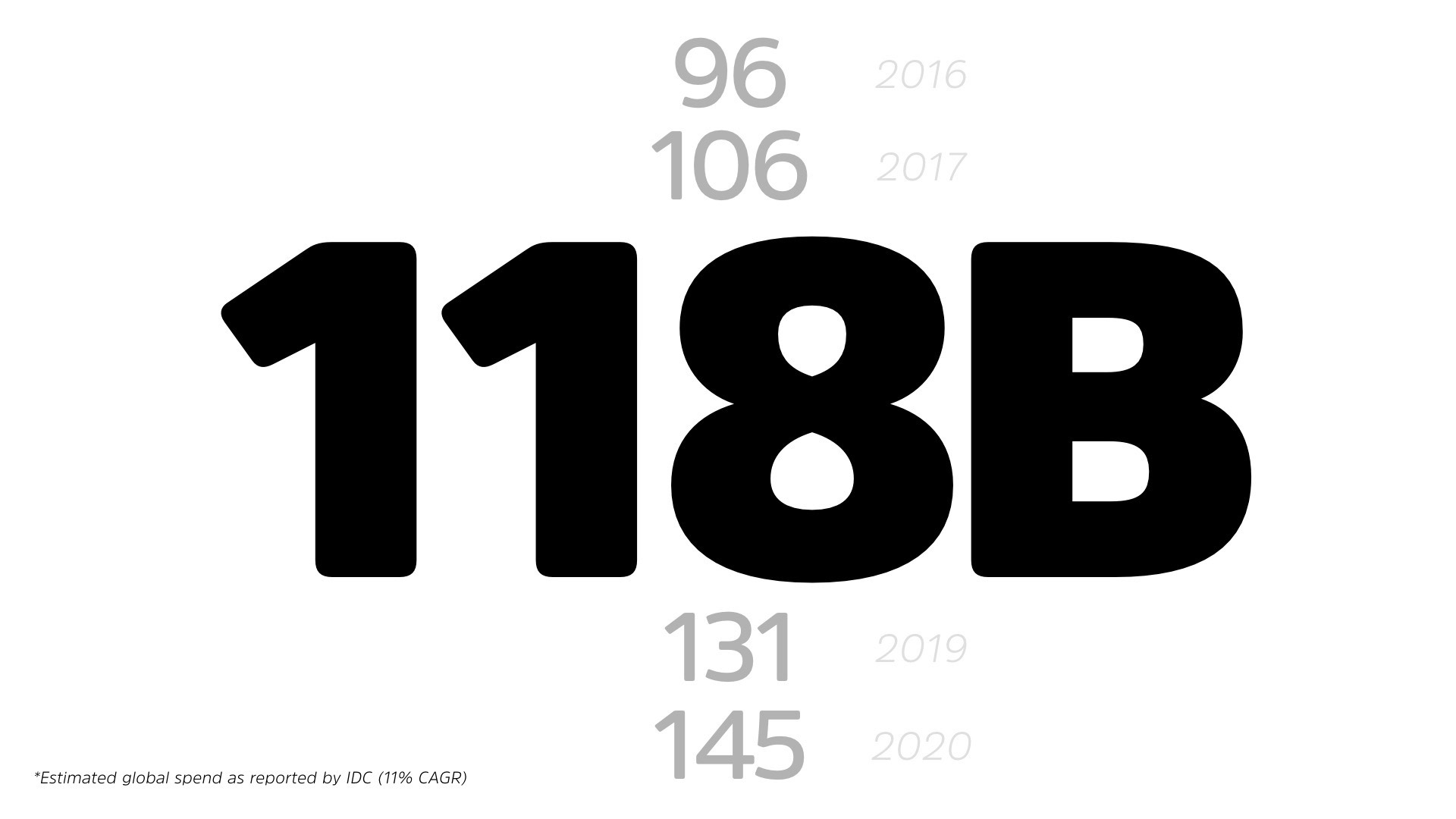

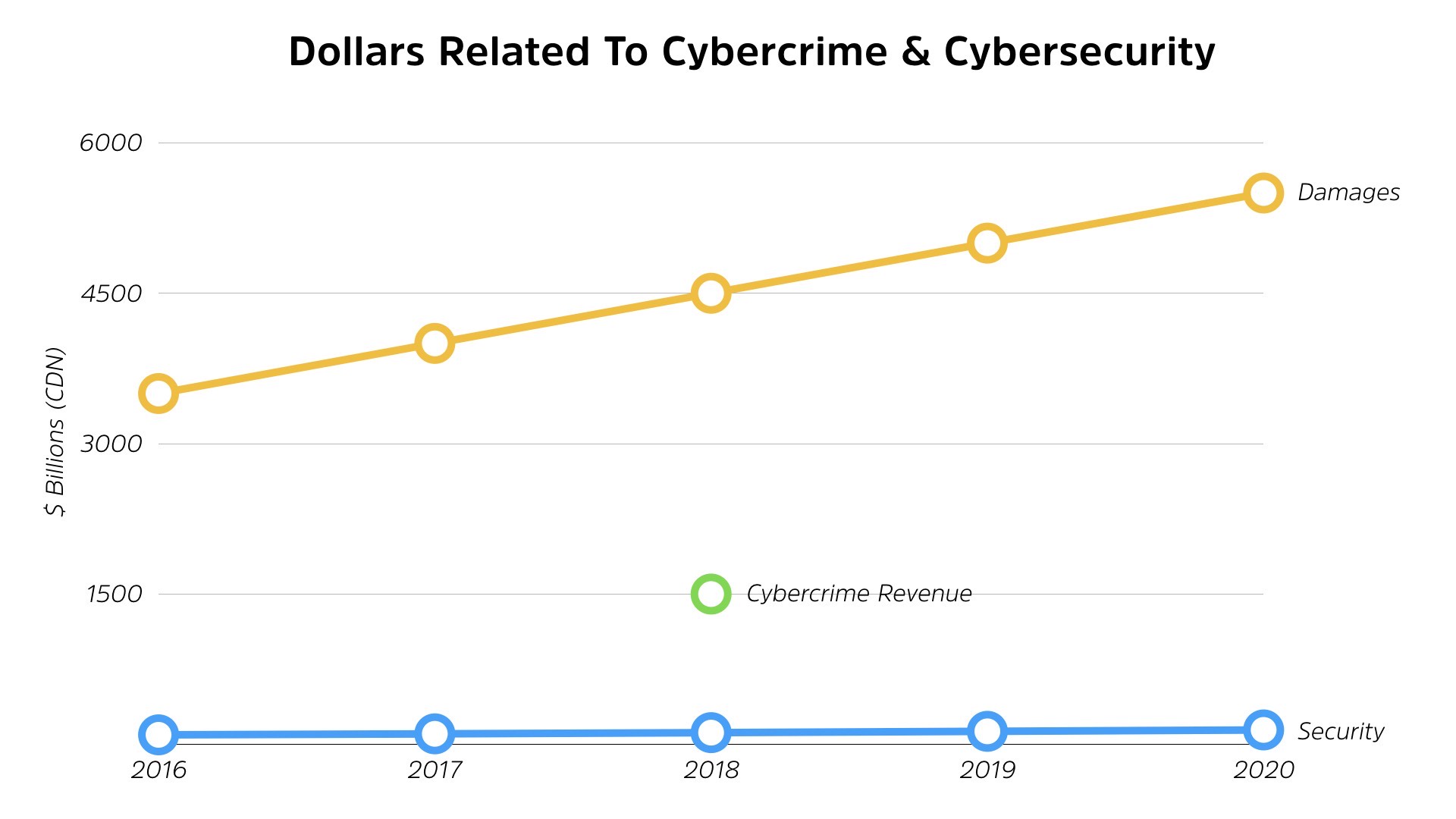

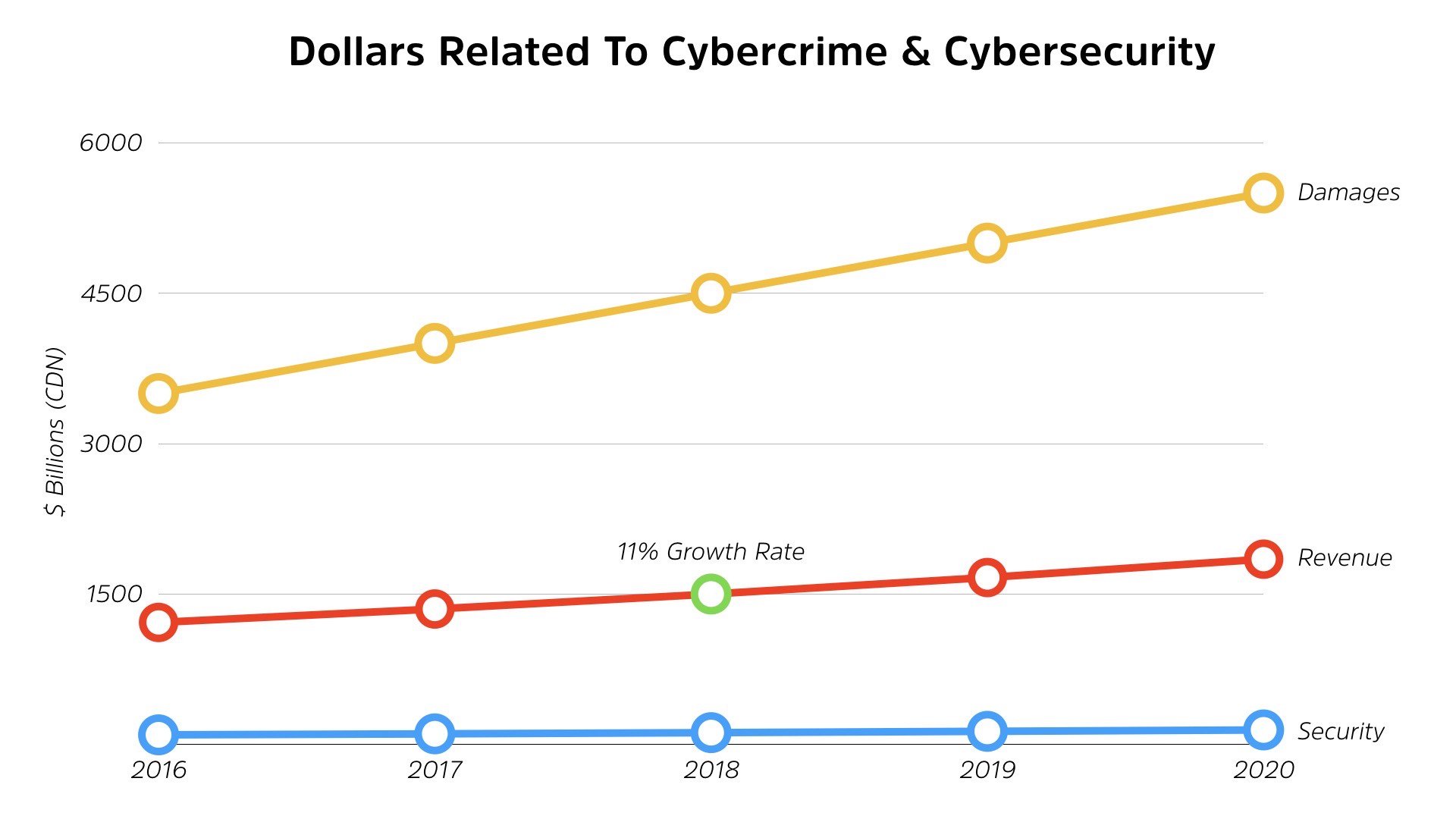

The first though is I’m gonna give you a number. It’s 118 billion … $118 billion, Canadian, is the projected cybersecurity spend globally this year. It’s a lot of money, right?

So globally, not just in Canada, globally, we’re gonna be spending about 118 billion. This has steadily been increasing. It’s been growing by about, 11% compound year over year.

So, in 2016, it was about 96 billion. And by 2020, we’re projecting about $145 billion. So that’s what companies globally are spending on cybersecurity.

And the fact that this number is increasing is a very good thing, right? It shows awareness. It shows that companies are investing in cybersecurity and trying to resolve the problems that we all tackle day to day, right?

Professionally, this is a very good thing for us. Our discipline is in growing, right? The problem is, there are other numbers that are growing just as fast, if not faster.

Give you another number, 15 trillion. So we’ve gone order of magnitude higher here. This is the current estimated, global revenue for cyber criminals. They were making about one-and-a-half trillion dollars. This comes from a study sponsored by Bromium and they did their best job.

The academics who did it at sort of compiling all various data sources from law enforcement around the world to try to figure out how much cyber criminals were bring in.

So $1.5 trillion. It’s a lot of money.

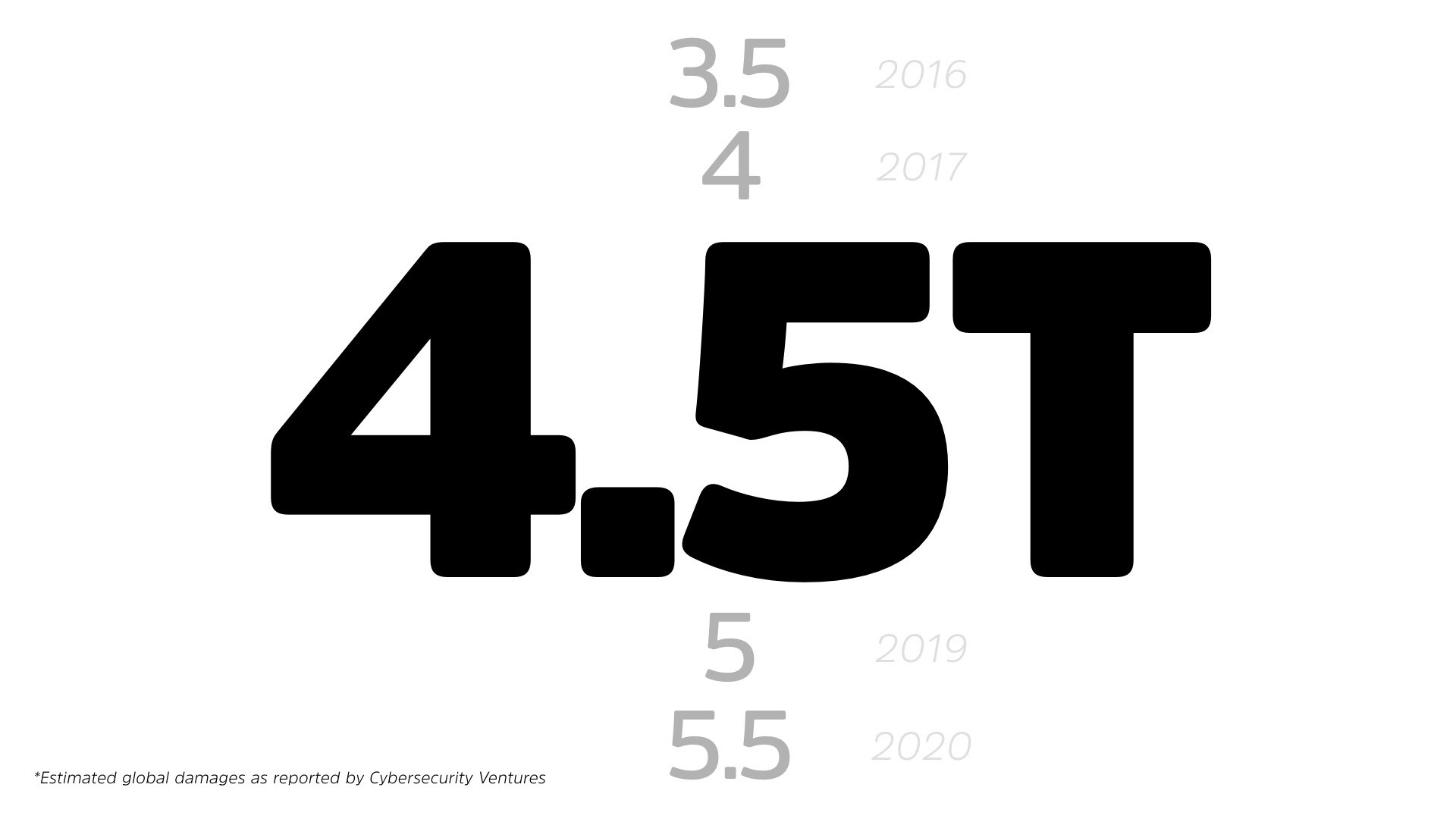

It’s a lot of incentive to keep hacking into systems, to keep stealing data. There’s another number, 4.5 trillion. This is how much damage those cyber criminals will inflict on us this year.

So this accounts for cleaning up things like WannaCry, right? Cleaning up things like NotPetya. These are direct and indirect costs that our businesses and organizations will incur globally this year.

And if you look at the growth on this, this comes from cybersecurity ventures, and they’re trying to track this over the last few years, we started about three-and-a-half billion or trillion, sorry, and we’re projecting 5.5 by 2020.

So half-a-billion dollar or half-a-trillion dollars in growth every year. That’s what we’re incurring. And we’re only spending 118 billion to stop it.

These are not positive numbers. This is a bad thing. If you wanna see them chart it out, this is what it looks like. On the bottom, the blue is us, defensive spending.

Up top, we’ve got damages and we only have the one number for, cyber criminal, I mean, criminal revenue.

But if we apply the same growth rate that we are on spending, which is extremely conservative, as far as the criminal estimates go, this is how this chart looks.

We see the two are significantly, they’re into the trillions. They’re continuing to grow at great rates. We are not catching up, right? Now, we shouldn’t be spending the same amount of money, but the question is, what are we getting for our money?

Are we actually slowing cyber criminals down? We’re spending billions and billions of dollars yet cyber criminals are causing trillions in damage and making off with trillions in profits.

Here’s what it looks like in the headlines from 2016. Yes, MySpace. Ooh, 2018 keynote speaker referencing MySpace, always a good thing. It’s [laughs], it goes to show though, this was, 300-plus millions of accounts that were essentially dormant.

Yet these people’s personal information hadn’t changed. It was still a vulnerability. It was a graveyard of social profiling that was less relatively undefended.

Another one, Weebly.

It’s a website maker, very popular with teachers, and other, small and medium business sort of targets.

43 million credentials stolen in 2016. From, Fonseca, our, Mossack Fonseca for the Panama papers, law firm was breached.

A huge political fallout from this, because it was all their sensitive client data. Again, 2016.

This is, these are firms that spend money on cyber security. These are not people who ignored it. These are people who did their best, yet Still got breached.

2017.

We all know Yahoo, every single Yahoo account, 3 billion and all. I don’t know how they got to 3 billion. That’s a whole nother question, but every single account in Yahoo, and most of us had a Yahoo account at some point, was breached.

Again, it’s not like Yahoo ignored cybersecurity. They may have had different prioritizations, but they’re doing their best. They’re putting effort forward to try to solve this problem.

But 3 billion accounts hacked.

Uber, for all their faults, not bad from a technical perspective, obviously massive, horrendous cultural challenges with the company.

But in 2017 they had a hack that exposed 57 million users.

Worse is, they tried to cover it up with a payment to the hackers from a bug bounty that, appeared after the fact. But again, 57 million accounts were exposed despite the company’s best efforts.

Here’s one that hits closer to home, and apologies to anybody who works for Bell. This is not meant to call you out. It’s simply trying to make, the point that it is relevant to Canadians as well.

A hundred thousand Bell customers exposed in a breach, their second within eight months. And again, we all know, especially for those of you who do work for Bell, Bell takes cyber security extremely seriously. They are a cybersecurity, center of excellence.

They have some of the best in the country working for them. Yet still got breached.

Equifax, right? We all got affected by Equifax for the vast majority of us, 143 million U.S., citizens. 145 million people globally.

Yet, if you look at the testimony from Equifax in front of Congress, if you didn’t know any better, you would pass almost any compliance framework for them. You would give them an ISO 27001 audit pass because they had everything that we preach as best practice.

Right?

They did have a patching process. They frankly ignored it, but they had it in place. They had IPS, they had firewalls, they had all the controls you would expect. They had a strong security awareness program.

They had everything you would put on paper as a best practice, yet they still were responsible for one of the worst breaches in U.S. History.

Twitter. This is an interesting one, 330 million users were forced to change their password.

That’s basically everybody, because of an internal bug. They had a bug in their software, in the process, behind, responsible for passwords.

That instead of hashing them before it wrote to a log file, log to, log to them as plain text before it hashed them later on down the line. A simple mistake that exposed those passwords internally for every single user on the platform.

Again, a company that takes security very seriously still had an issue.

Again, back home. BMO and Simpli, 90,000 Canadians caught in a bank hack. This went through an authentication mechanism. Still, banks we know, especially Canadian banks, highly regulated.

Cyber security is a massive priority for them. And they do it quite well. Still exposed. This does end at some point, just to let you know.

Just this summer, August. Air Canada, 20,000 mobile app users affected by a data breach that potentially included their passport information as well.

Right?

And again, it’s not like Canada ignores cybersecurity. Last one, just from last Friday, though, of course we could have included, Swiss Chalet, East Side Mario’s, and the rest of the restaurant chain that just got popped on Monday.

But here’s Facebook from Friday. And for all of Facebook’s faults, and trust me, there are many, internal cybersecurity is not one of them. They do take it quite seriously despite Alex leaving, 50 million users were impacted by a hack, and this hack actually chained together three vulnerabilities to create a far worse vulnerability.

And the interesting thing about this is, if you look at each of these vulnerabilities in isolation, they weren’t severe enough to immediately respond. And we’re seeing that more and more.

So, we, Trend Micro ran a, competition called, Pwn2Own.

The last two years, the top winners in this competition, so it’s for researchers to demonstrate, zero day exploits. The winners in the last two years have actually chained together, open vulnerabilities that would be classified as sort of a three out of 10 or lower to get root access on various systems, including a VMware exploit.

All right? So we’re seeing this more and more where hackers are using complicated, chains of vulnerabilities to gain access. In this case, it was a video upload feature wi- exploiting the view-as, which is ironically a security tool. It allows you to view your, Facebook profile as somebody else so you can verify that your permissions are properly set.

They exploited this and got the Facebook copy of log-in tokens for 50 million users.

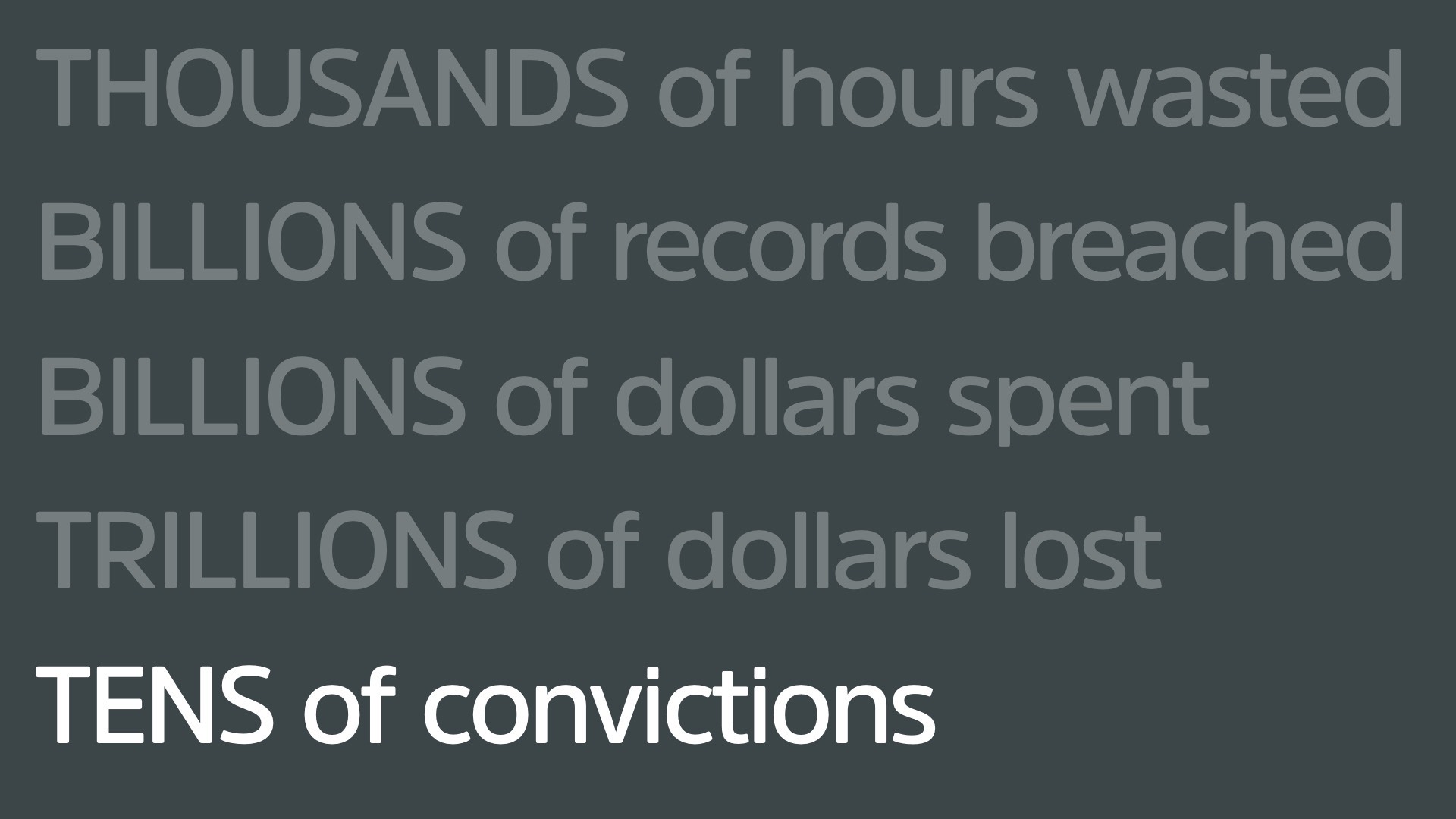

This is a problem. We’ve got thousands of hours wasted. We’ve got billions of records breached. We’ve got billions of dollars spent trying to stop this from happening.

We’ve got trillions of dollars lost. Worse yet, we have tens of convictions of cyber criminals globally, right? You can notice the bottom number is a little out of whack, right?

Everything else is in the billions of trillions. Globally, collaboratively with law enforcement and the security community around the world, we’ve made a handful of convictions. So the economics very much favour cyber criminals. This is a highly profitable business.

I will ask you a simple question. And then I promise I will try to make this happier.

Is this what success looks like?

No.

We are not being successful in our profession. We may have small wins, but globally, we are not winning. We are losing. Why? A very simple question. That’s what we’re gonna dive into for the rest of the time here.

We are gonna look at why we are where we are, and what we can do about it. So pause.

A quick pause. I’ll explain how we got here to delivering a talk like this.

So I work for a company called Trend Micro. I’m the vice president of cloud research.

By training, my education, I’m actually a forensic investigator. I’ve been in private sector for the last, seven years. Was with the federal government for 10 before that.

And before that, in private sector again. I have the opportunity right now to work with a large global research organization, so I get to see a lot of cyber criminal statistics, a lot of malware statistics from around the world, not just from us, but from our partners.

I also get to travel around the world and talk to CIOs, CSOs and CEOs from organizations in every kind of vertical you can think of in every jurisdiction you can think of.

And, of course, I have a cloud focus, but that’s actually relevant to this as well. Because, part of my focus in my own research, ends up being on the edges of what you would consider sort of standard IT. I look a lot at operational technologies.

So things like robots, healthcare devices, tractors, believe it or not, vehicles, airplanes, all that kind of stuff. Real world technology that’s now crossing over into IT. And then in the cloud side, I look at the extreme level of what we consider server-less technologies.

So where you’re building your own custom applications from a bunch of SaaS services and writing as little code as possible.

The interesting thing about both of these areas of technology is they’re complete opposites when it comes to timelines. Things in the server-less world work on millisecond timelines, things in the operational technology world work on years, if not decades, right?

Nobody buys an MRI expecting like a phone to buy a new one next year, right? You as a hospital or a healthcare organization buy an MRI with an expected lifespan of seven to 10 years.

The interesting thing about both of these, besides that, dichotomy, that breakdown of the timeline, is that they’re both pushing the boundaries of what security is capable of, because they’re being, the security around them, the technology around them is being done by generally non-security folks.

So people are taking a fresh look and they’re coming at some interesting solutions and some interesting, ways to tackle this problem.

So what we’re gonna do for this talk for the rest of this keynote, and I promise this is where it starts to get happier, is we’re gonna look at the history. We’re gonna look at how we got here.

We’re gonna look at how we generally handle new capabilities, what our top problems are right now and what our biggest opportunity could be.

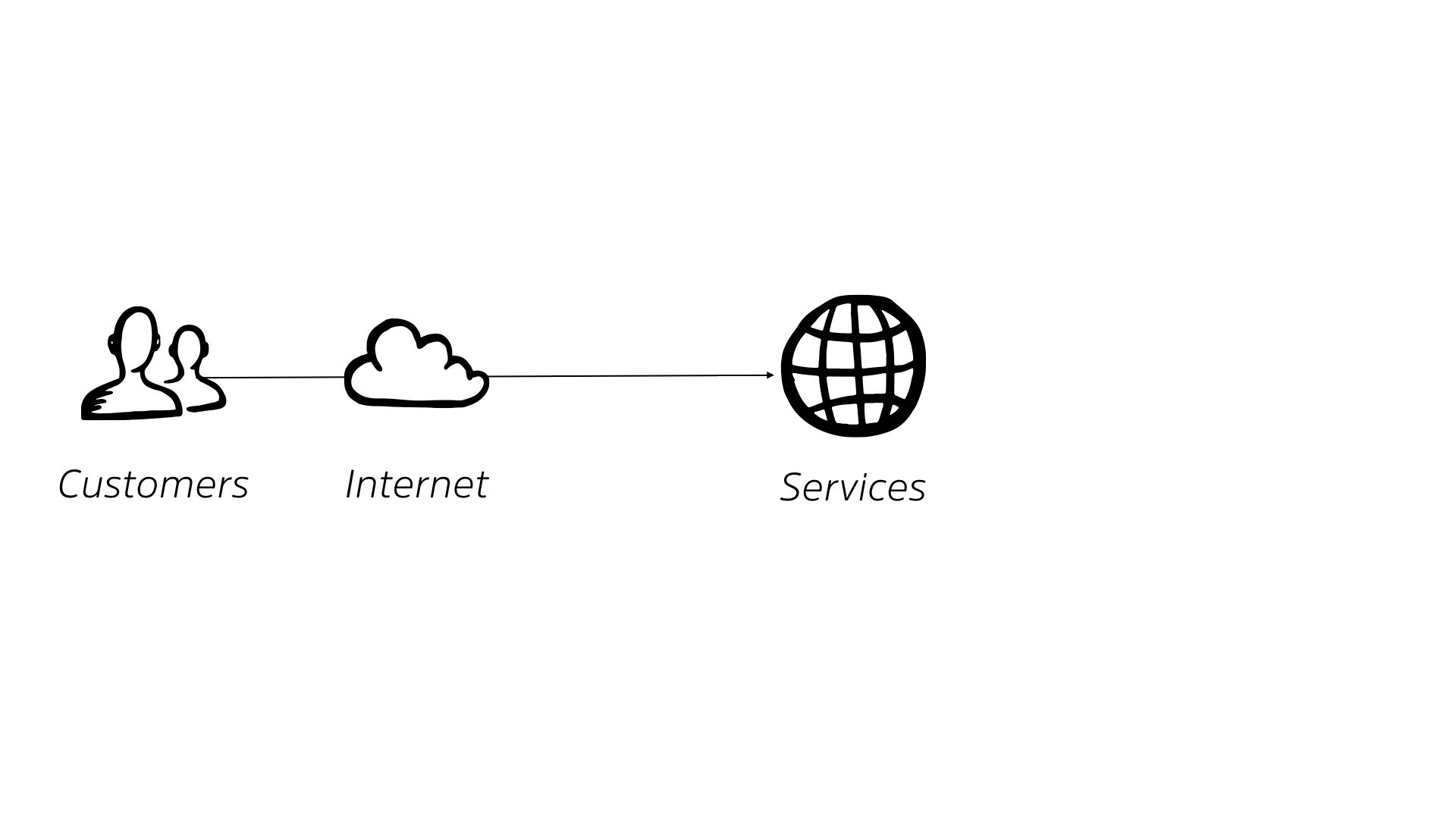

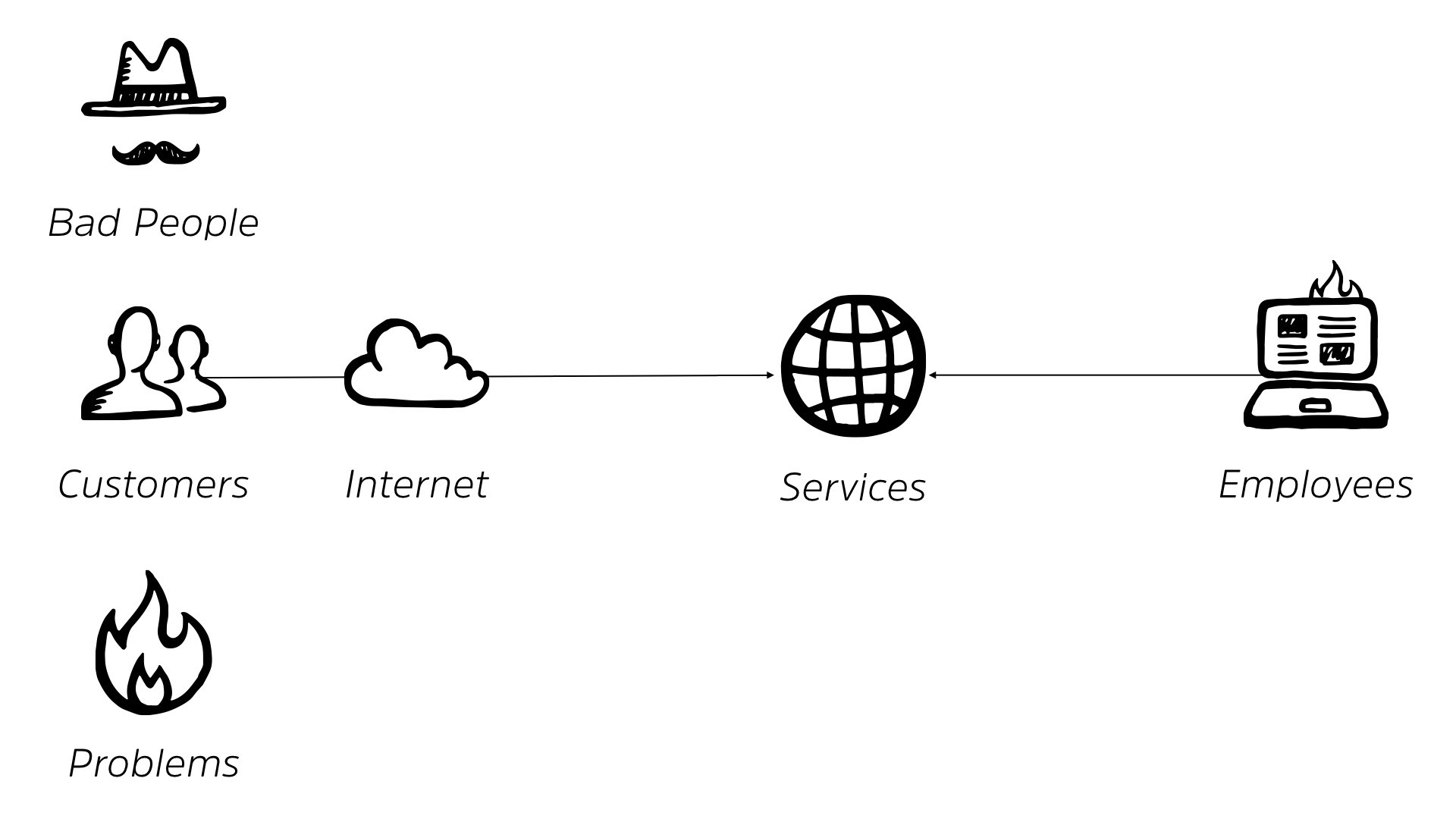

Okay? So let’s look at the history. How did we get here? Well, in the beginning, and I mean very much, we’re going back into the history. When we first started building business IT out, we had some sort of services, right? We had something good.

We had an online store. We had some sort of backend thing that we were offering to other businesses. It really doesn’t matter. We had something that we were offering and we had customers and they were out there on the internet.

Original use of the cloud icon, by the way, random internet thing, not actual cloud in your current context. So those are the gray hairs in the room. And you remember that.

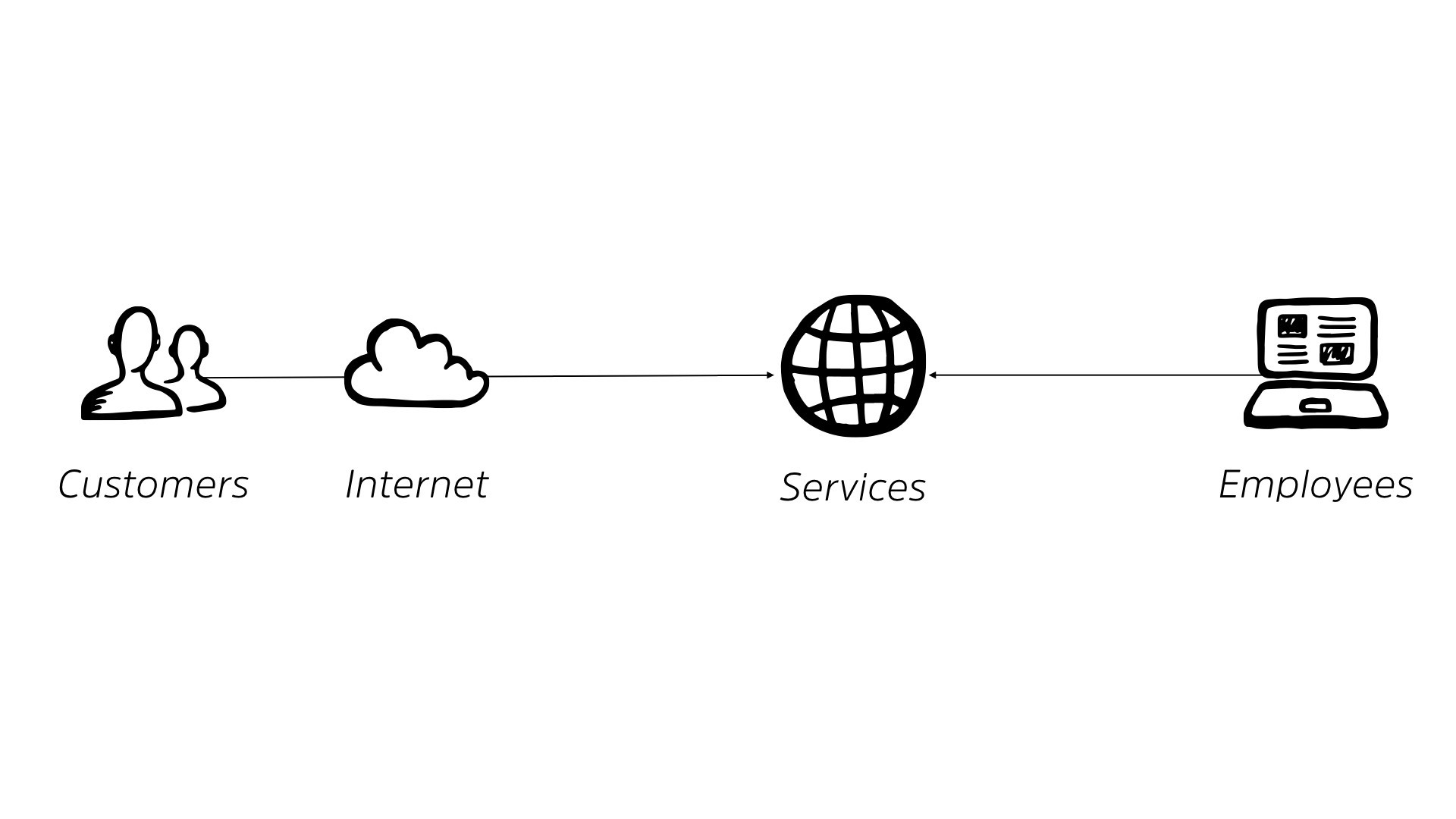

You had customers out in the internet, they connected into your services. This was your business working, right? You were in the age or surfing that internet wave, things were working out really well. You also had employees that connected to these systems.

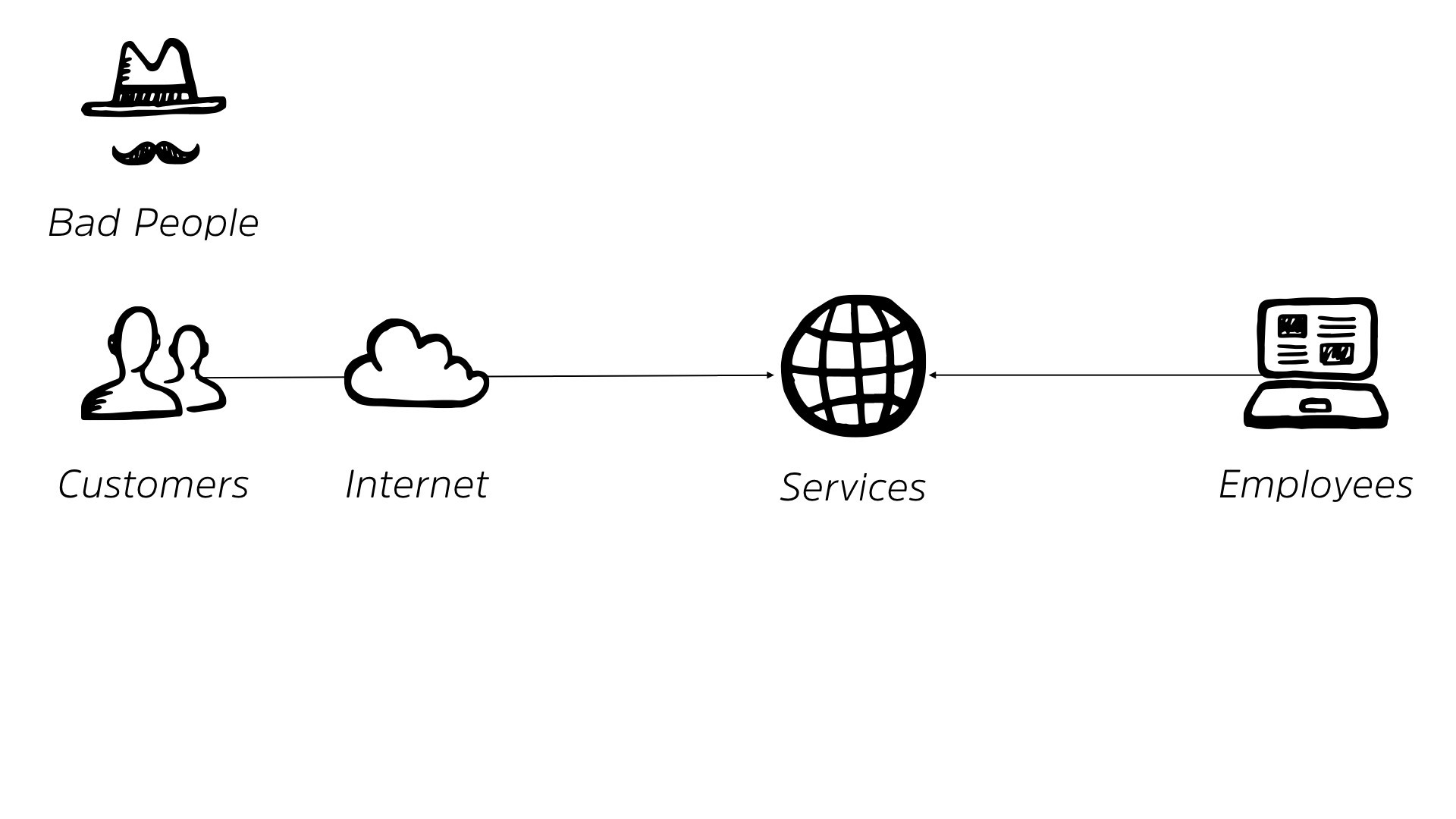

Life was good. Then we started to realize that there were problems. There were bad people on the internet. Shock of shocks, right? We didn’t design the internet with the idea of bad people, but they showed up anyway.

We also have a number of problems that are out online, and we start to see some problems internally from our employees as well. Right?

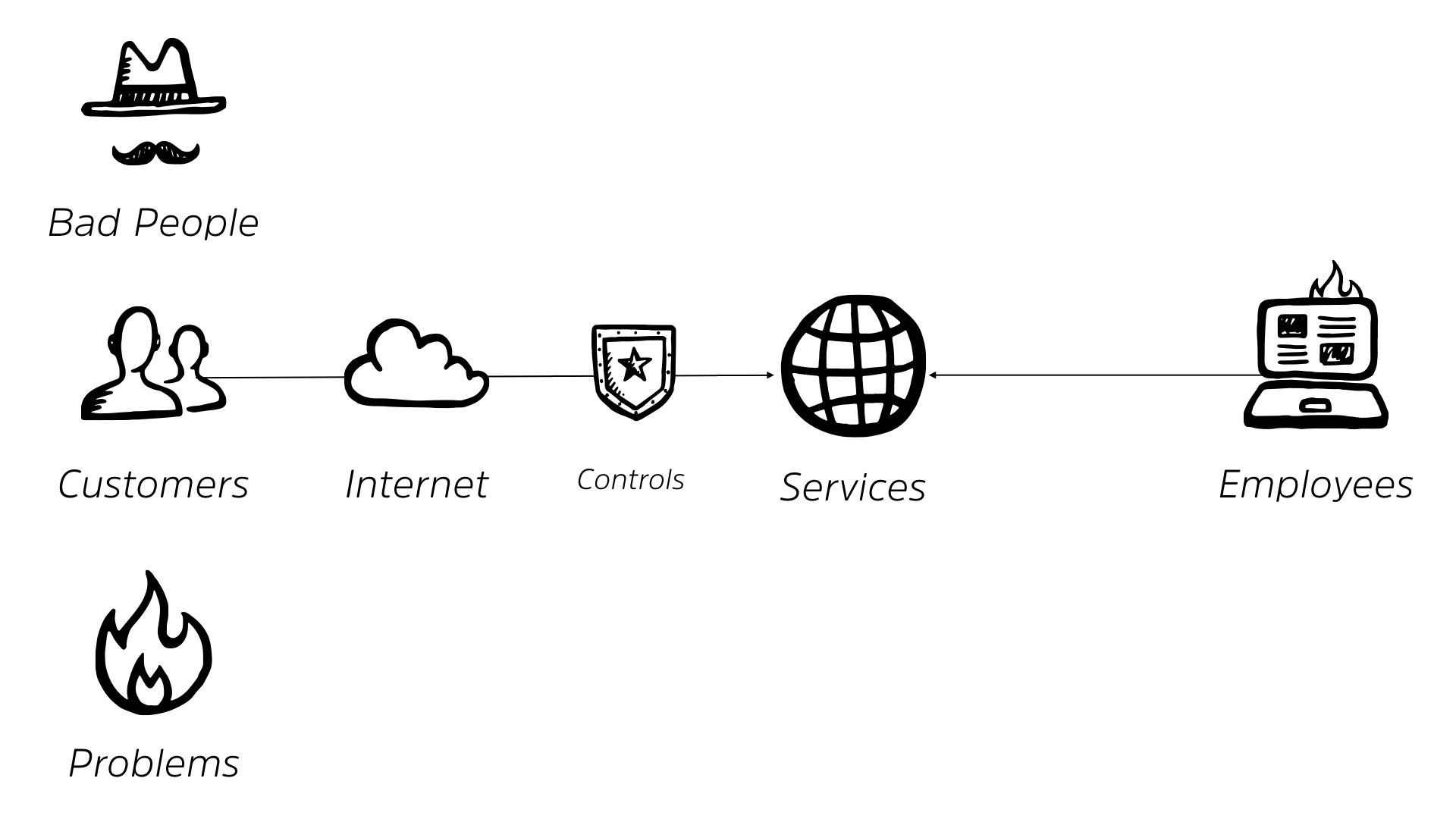

So our rosy little picture starts to break down a bit. So naturally, we’re gonna shove some sort of security controls between us and the internet, right? We’re gonna add some level of protection.

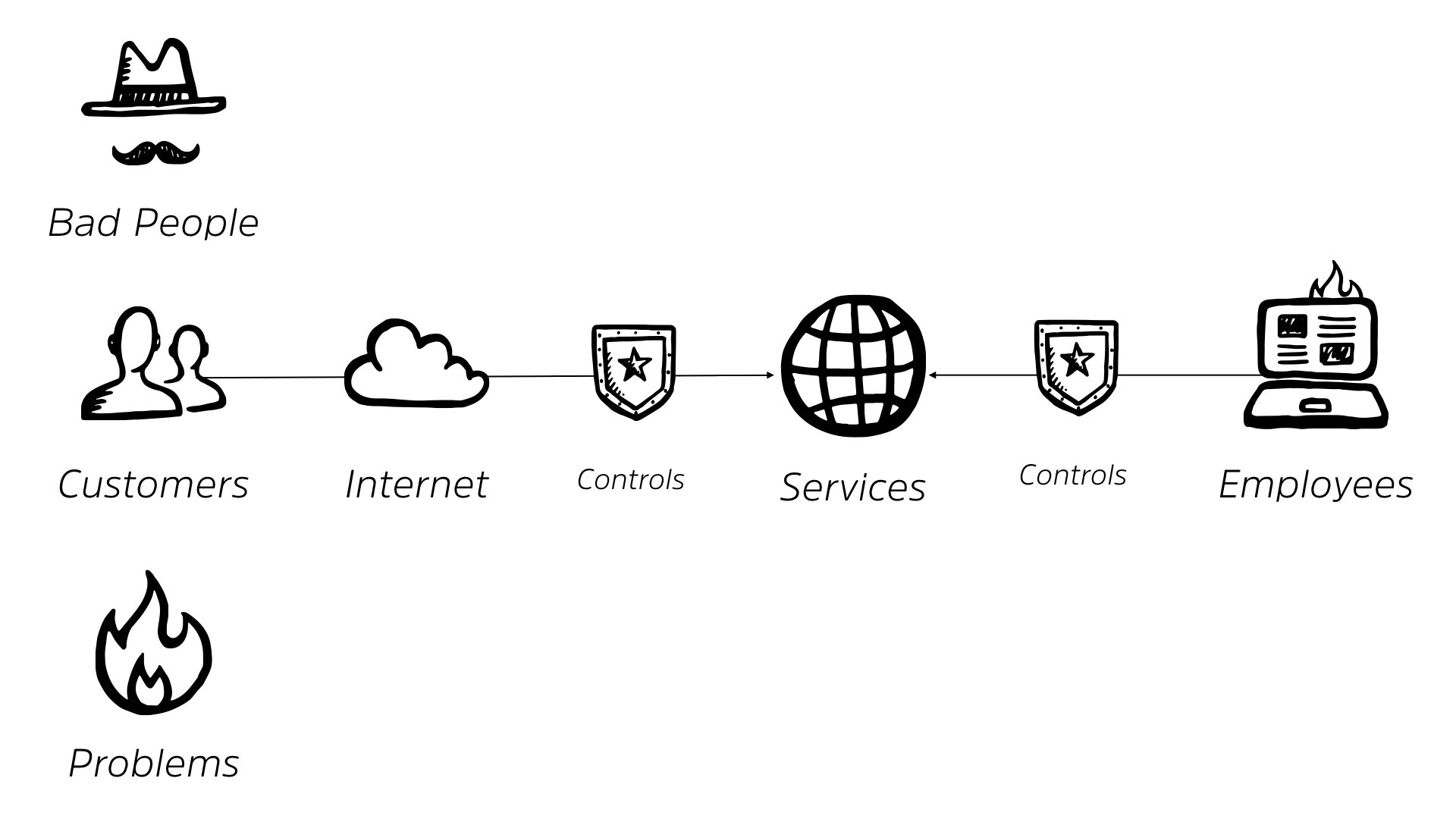

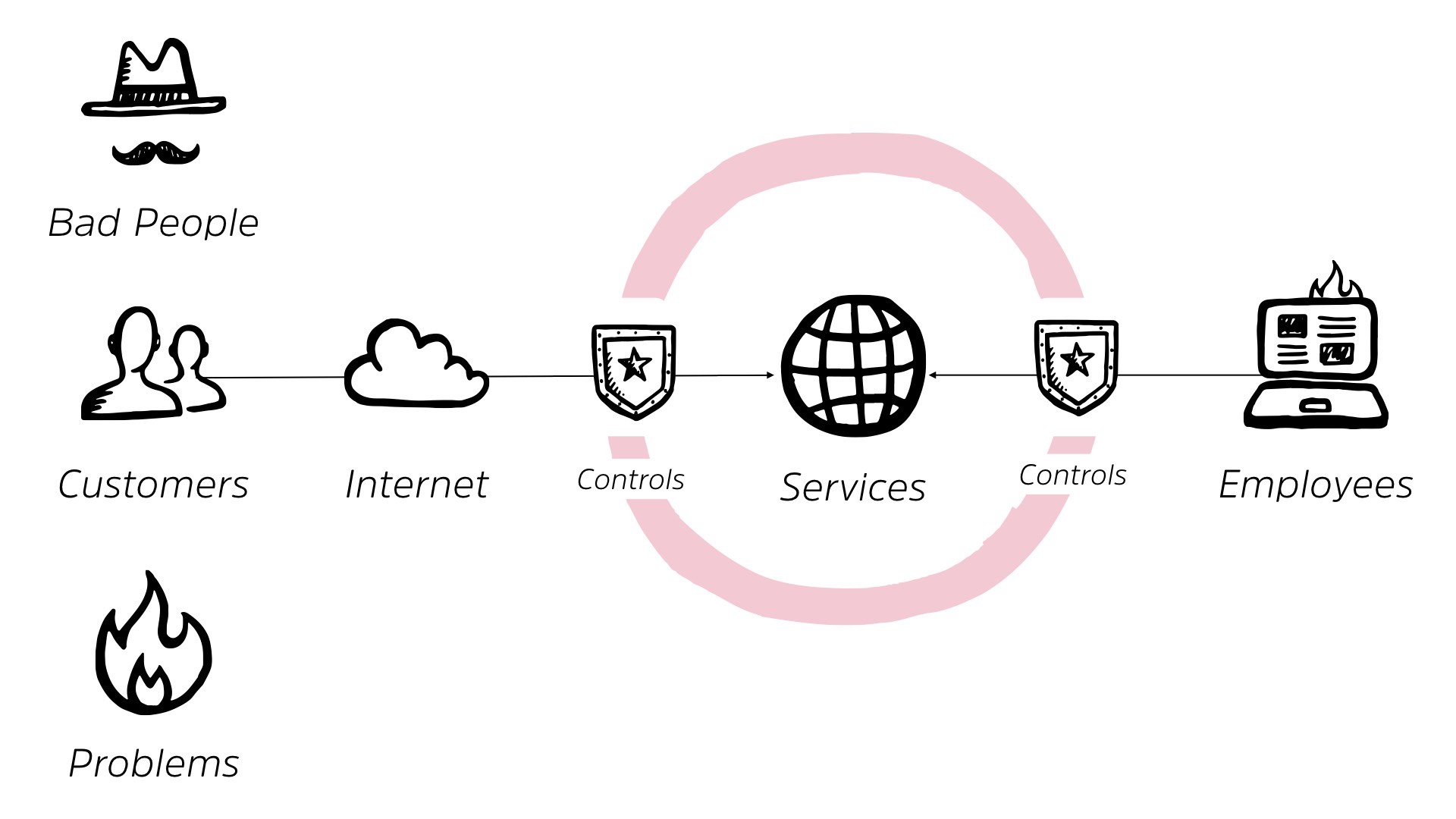

And while we’re at it, we’re gonna add some controls between our services and our employees as well. This is what’s delivered us our traditional perimeter, right? It makes perfect sense when you lay it out like this. We’ve got stuff on the outside.

We’ve got stuff on the inside. We have our golden goose laying that egg and we wanna protect it. So we build out a perimeter around it. We’re all very familiar with this, right?

So with this came to our goal where our goal for cyber security was protecting, you know, the confidentiality, the integrity, the availability of our information.

That’s our good old CIA triad that you then have to explain to everybody outside of security. It’s not that CIA, it’s the other CIA.

CIA, try it. It makes perfect sense. This is what we wanna do. We wanna make sure that they are only the people we want to access our data can access it. That that data is always valid and proper, and that it’s available when we need it.

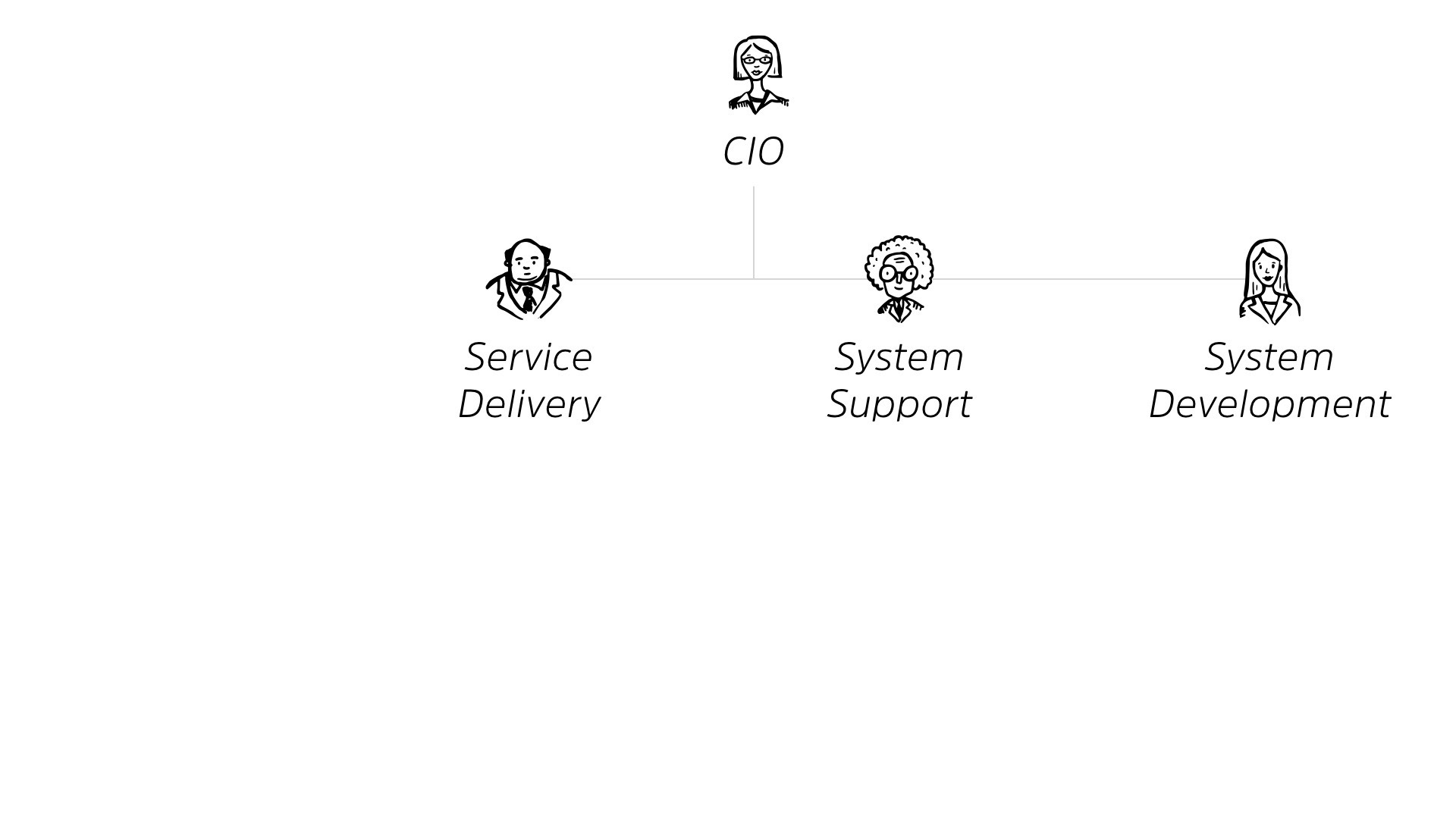

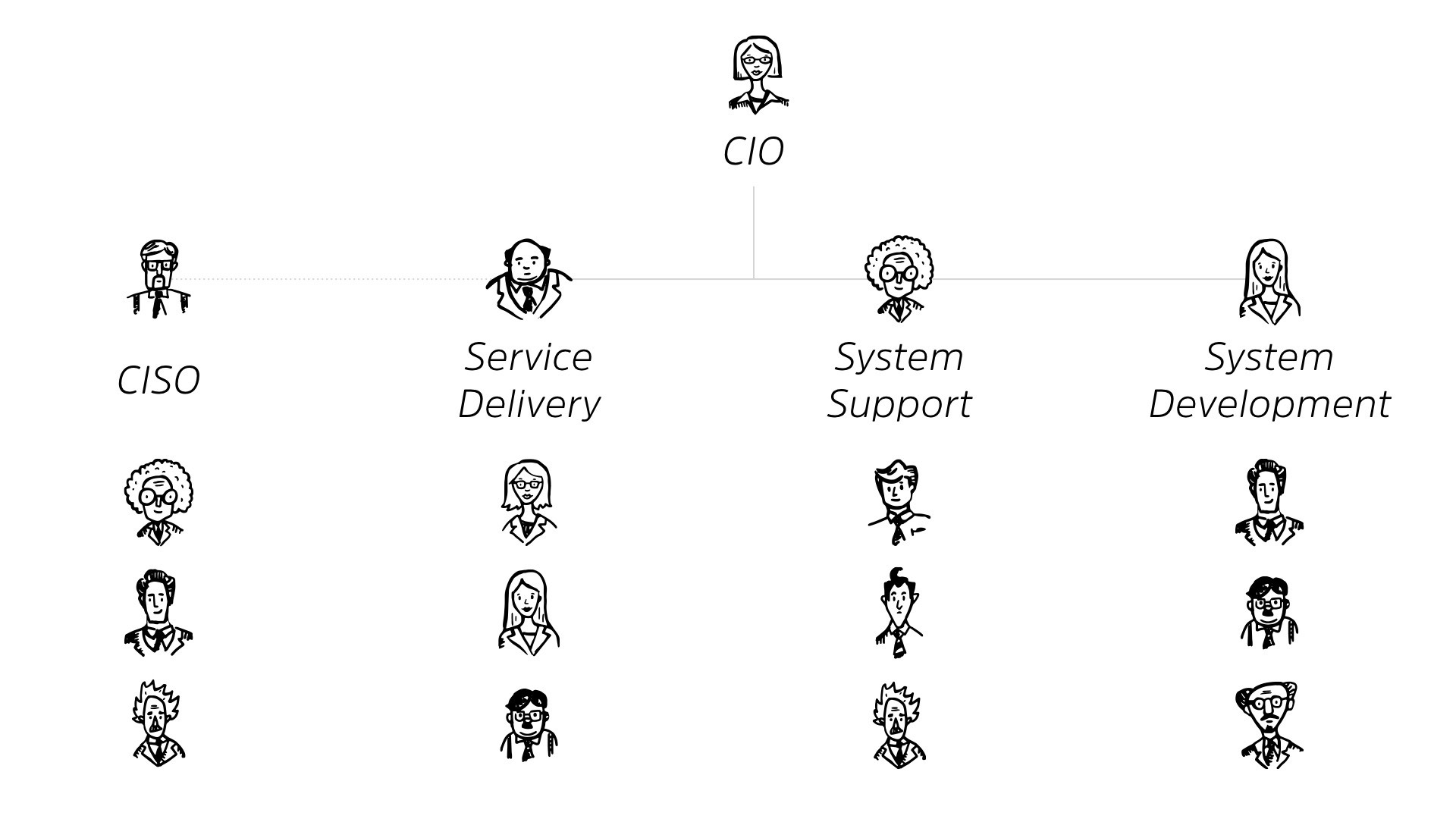

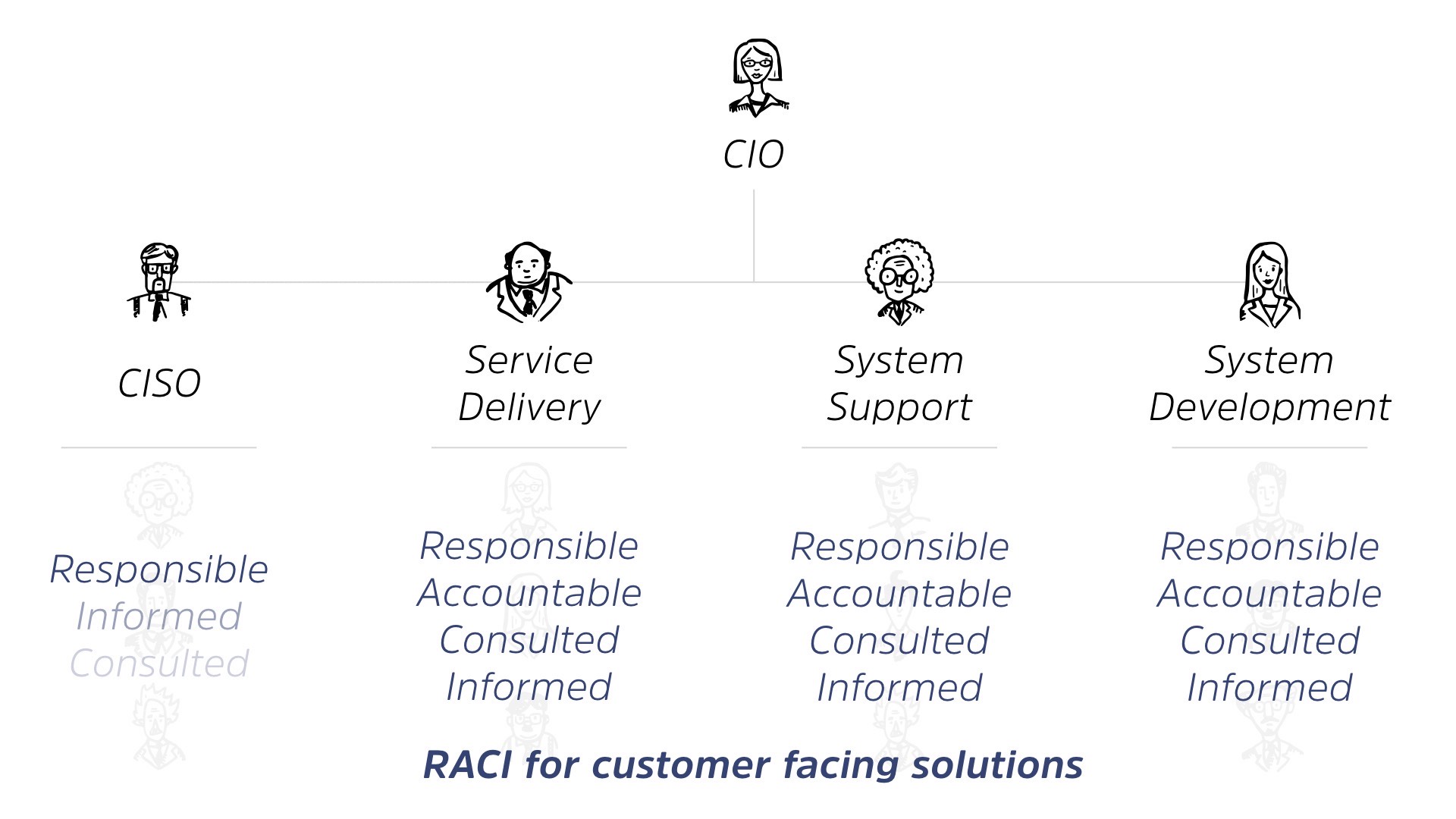

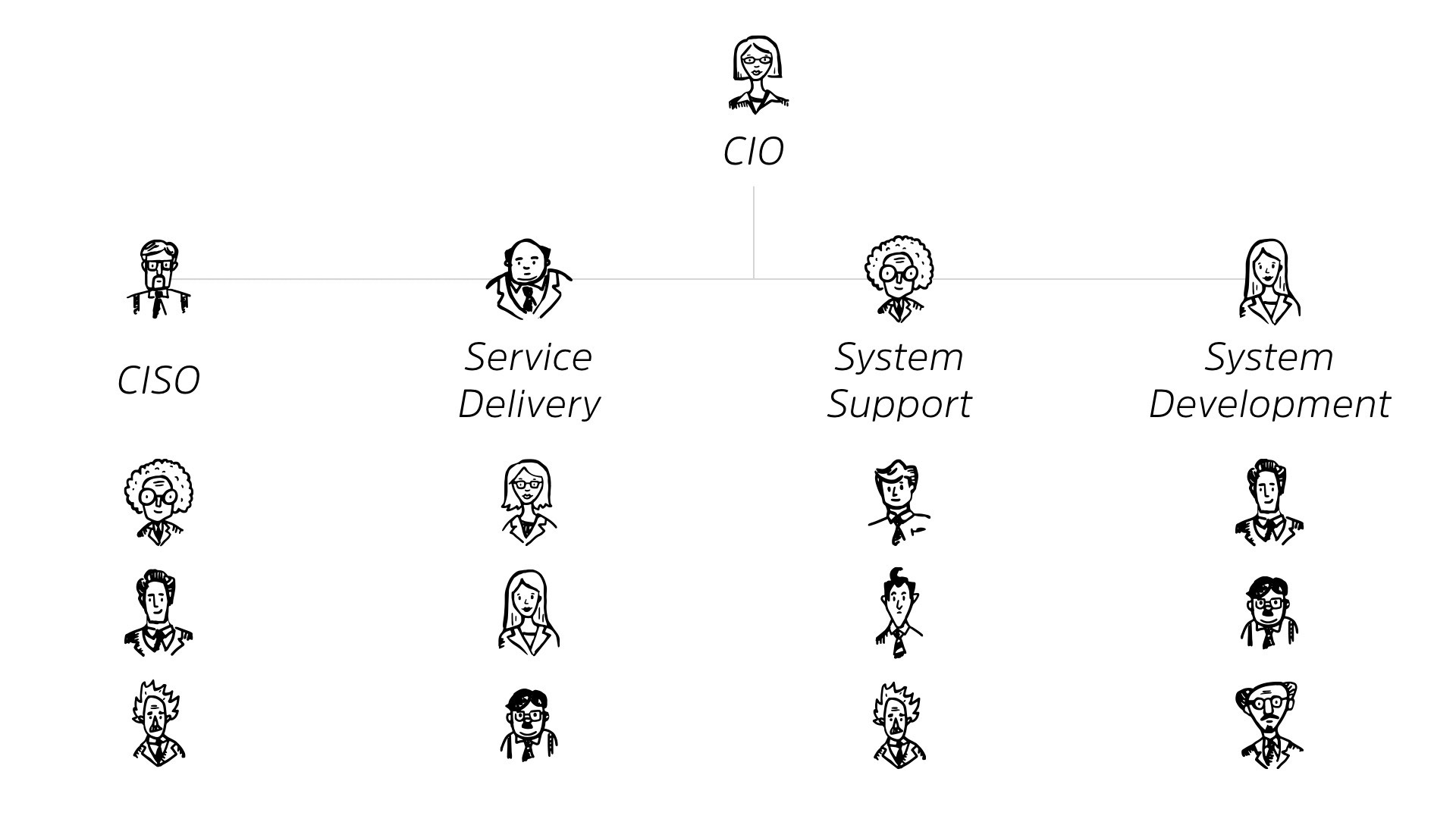

It’s a great goal, nothing wrong with that goal. We all should continue to strive for that goal. But it led to the creation of our organizations and how we lay out our CIO, our information technology within our org.

Now, this is gonna be a generic explanation of it but, depending on your, size of your organization, you either may have more of this consolidated, or you may have more of it expanded out into different areas.

But essentially, you’ve got your CIO at the top of the chart. Under the CIO, there’s someone responsible for service delivery, for system support and for system development, right? They all roll up into the CIO.

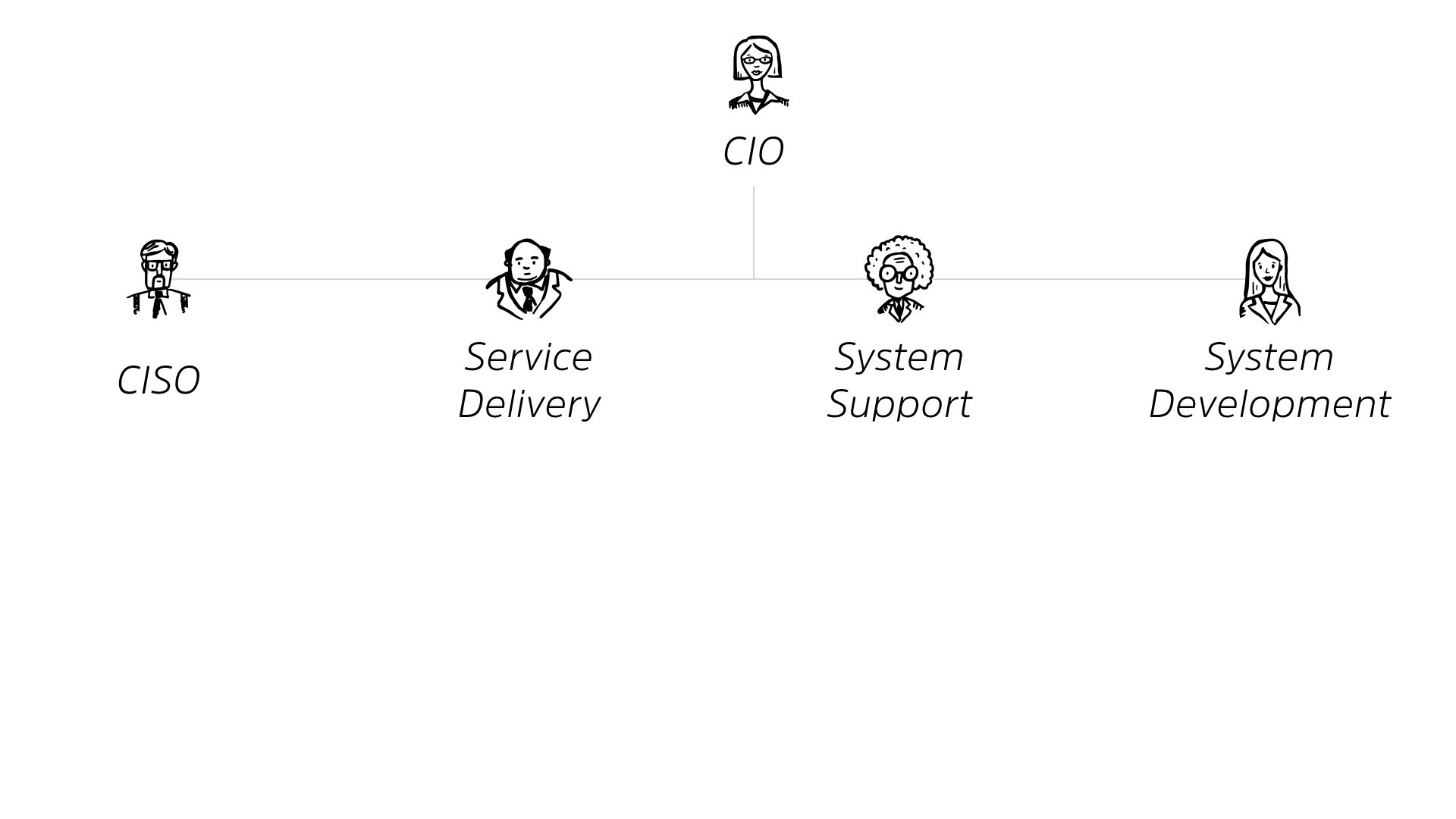

We also have our CSO.

They may or may not be under the CIO, whether they’re under them from an HR perspective or not, they’re essentially reporting up to them, even if they’re independent.

Now, I know that may be controversial, and we’re finally starting to break out of that, but at the end of the day, we’re still part of the CIO’s organization because they have all the stuff we’re trying to defend.

Okay?

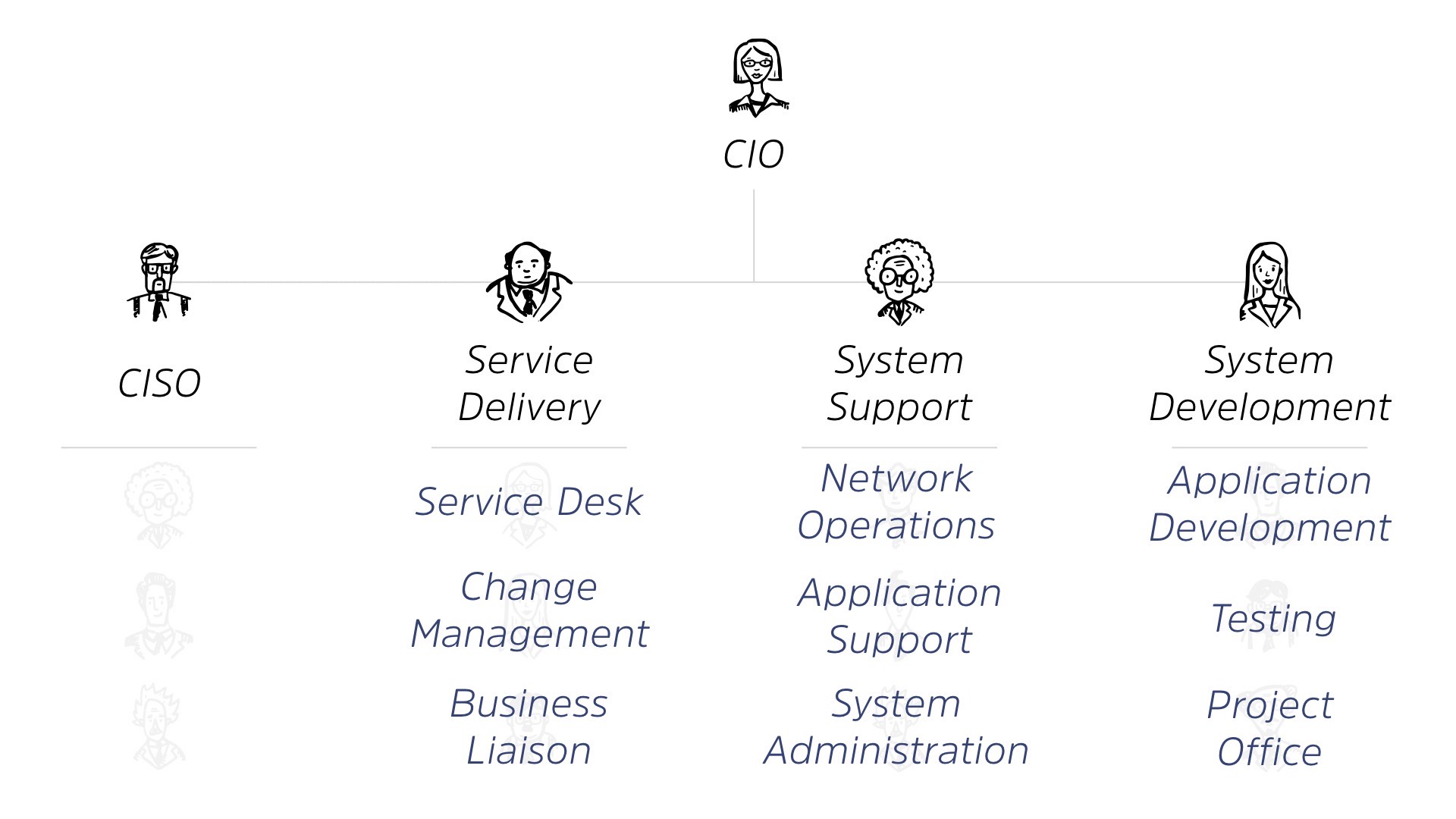

Under each of these, we’ve got teams. We have teams who are doing different kinds of work.

That work loads out to something like service desk, you know, change management, your business liaison with the rest of the organization.

This all falls under service delivery. Under system support, we do things like network operations, application support, system admin, right? They’re the people who keep things working, and under system development, we’ve got application development or customizing off the shelf stuff, testing. And a lot of the time the project office is run out of there. Right?

This makes sense. This is the stuff that we deal with every day.

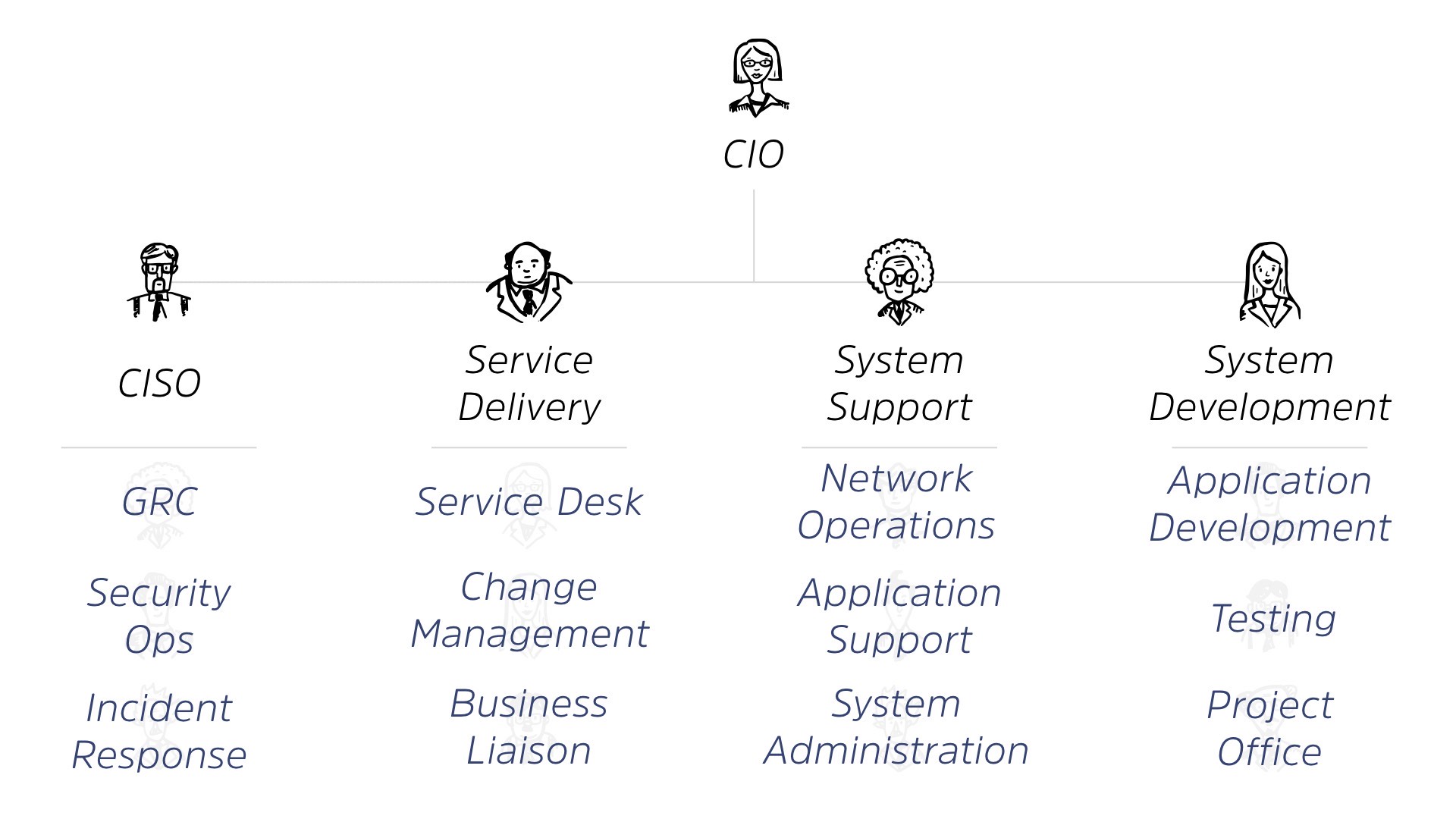

Under our CSO, we’ve got things like governance, risk and compliance. We’ve got security operations. We’ve got quarterbacking incident response, right? So no matter the scale of your organization, it’s something roughly like this.

This makes sense? This lines up with everyone’s worldview?

Yeah. It’s okay to say, yes. I’m gonna stop being mean I promise. Okay.

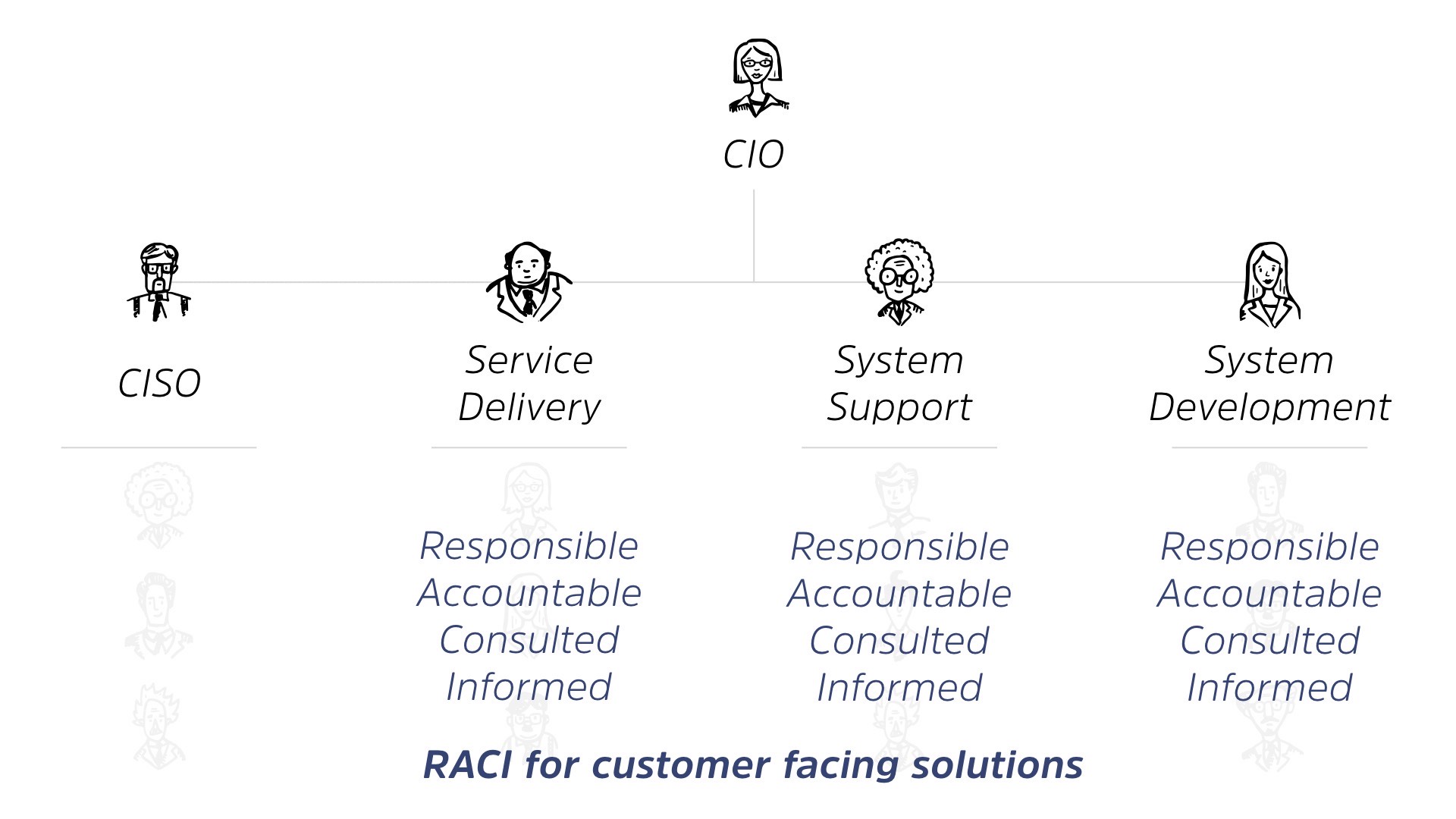

So the interesting thing is, when you start to apply the concept of RACI, so responsible, accountable, consulting and inform, you see a really stark difference between these four categories.

These three, so service delivery, support, and system development, all have an interesting mix of what they’re responsible for, what they’re accountable for. Remember, you can only, ever have one person accountable for something.

They’re always consulted and informed. This triad tends to work really well-ish. As far as IT organizations ever worked well, this is relatively well-established.

When it comes to the security organization, though, we’re definitely responsible for something, right? We are called to the carpet when there’s a breach, right?

The CSO is the one who has to answer to the board, who has to answer to the public. May be shown the door in the event of the breach, but are they informed of everything else that’s going on in the organization? Sometimes. You know, normally late.

And are they consulted? Definitely not with the regularity that they should be, right? So there’s a stark contrast between how we’re positioned in the organization and how things actually get done.

Now, with apologies to the CSOs in the room, the CSO is the worst job in IT. The worst. I say this with a little bit of humour, with a lot of sadness.

The reason why it’s the worst is because it’s your you-know-what on the line, but your teams are not actually directly responsible for anything that you’re called to account for. Right?

So if that web application is breached, you don’t own that web application. The system development team owns that application. The system support team is running that application.

The service delivery team is managing all of the, you know, commercialization, the productization of that web application

You are using controls that are around that application, but it’s still your butt on the line.

That’s a really hard place to be. You are essentially negotiating everything that your team is doing, right? It’s very rare. You can’t just send people out to do stuff, right?

Well, we do, but we do in very limited ways. All right. So I’ll give you an example. Okay? We’re gonna look at how you would a- adjust or how you would evaluate a new capability within your organization, within your security organization.

So this is Joe, he’s our CSO. Hi Joe. We’re sad for Joe, but we’re also happy and optimistic that Joe can do better. Okay? Joe is really worried about a number, and that number is 170.

170 is the average number of days that a persistent threat will sit on the network right now. I say right now like that number changes a lot. It doesn’t. It hasn’t really changed in about a decade.

Okay? It’s gone up and down by maybe a factor of 15, or not a factor 15, but plus, or minus 15 days. But it’s ballpark around 170 days that a persistent attacker will sit on our networks.

Joe doesn’t like that number. We don’t like that number. That’s a bad number, right? That means somebody’s gotten past our defenses and is sitting, doing whatever they want. Enumerating our network, maybe ex-filtrating data for 170 days.

So Joe wants to cut this number down. His team wants to cut this number down. So one of his team proposes a very simple thing, “Let’s start a threat hunting program.”

And I promise, this is not a trick question. How many of you are starting threat hunting programs or have a threat hunting program? Show of hands.

Few. It’s okay. It’s not a trick. I promise, it’s not a trick.

There are other tricks. This is not one of them. Okay. So a decent amount, I’d say maybe 15% of the audience is starting this.

So for those of you that are not down the threat hunting path, essentially threat hunting is the realization that we’re sick and tired of being notified that something has already happened and we are gonna try to get over that wave and actually try to find stuff bad before it causes a real problem.

Right? So you’re gonna go out and proactively look for an issue as opposed to just waiting to get hit by it. Right? It’s a great idea. So the response from Joe is always gonna be, “What do we need? You wanna start up this program? You wanna start up threat hunting. What do we need to do it?”

The answer is gonna be, “Well, we probably need a couple of new tools and we need a couple new team members.” Totally reasonable, right?

“This is the capability that we wanna deploy. We’re gonna need a couple of people to do it.”

So Joe, as a responsible business leader, as a responsible CSO, is gonna think of a couple of things. He’s gonna think of what’s existing on his to-do list.

He’s gonna look it and he’s gonna go, “Well, you know what? I, I, I really wanna make our analytics better.” Because analytics are what lets Joe go to the board and explain the value of security to the organization. Why you’re spending a chunk of that 118 billion globally. Right?

He’s also gonna wanna do things like expand his application security program. It’s really, it started young. It’s showing some promise. He wants to keep investing there. He also wants to fill those open spots in the sock. He’s had requisitions for five or six people.

He can’t get them in fast enough. He wants to make sure he’s focusing on that.

He also wants to deploy that stuff that’s sitting on the shelf, right? We all have those systems or that box that you bought that you might’ve gotten deployed, but aren’t really using, or maybe haven’t even deployed yet. Right?

And then he’s got this threat hunting team idea. So there’s a ton of other priorities that are on Joe’s plate, that are on the security’s team plate. So the question is, how do you evaluate whether that threat hunting is going to be a pro, a good program? Is that gonna push you forward?

Or regardless of whether it’s a good idea or not, Joe looks at this list and knows he needs more people, right? We’re all feeling that pain, we need more people. But if you notice, that list, everything on that list is actually a bandaid solution too.

None of that list actually addresses, if you look at this list, none of them actually address a root cause of a security problem. Right?

They’re the best we can do given our position but none of them actually solve the root of a problem. They’re all about detecting it faster and responding to an issue after it’s occurred. None of them are actually going and plugging that hole.

That’s a problem. Everything’s a bandaid, but this is the world we live in. We’re basically deploying a ton of band-aids, right? Maybe we get cool ones that have Barbie or Star Wars or, you know, Paw Patrol on them, but we’re at the end of the day, just putting a bunch of band-aids on stuff.

So if we go back to this org chart. We had teams underneath it, right? We know we as a CSO, we as a security organization, have a team of people. But as well as I drew it this way to illustrate the responsibilities, it’s not actually how the ratio works.

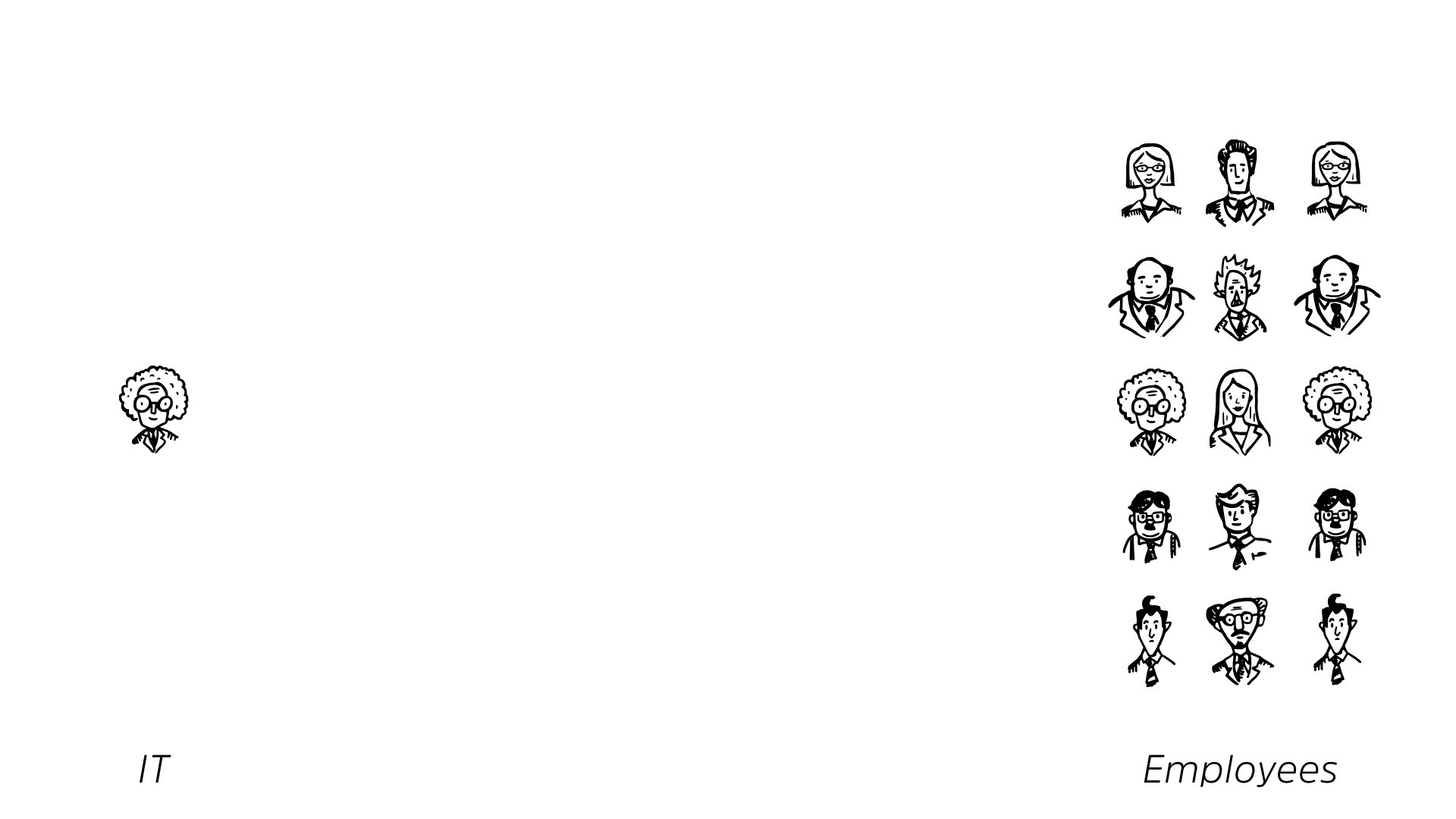

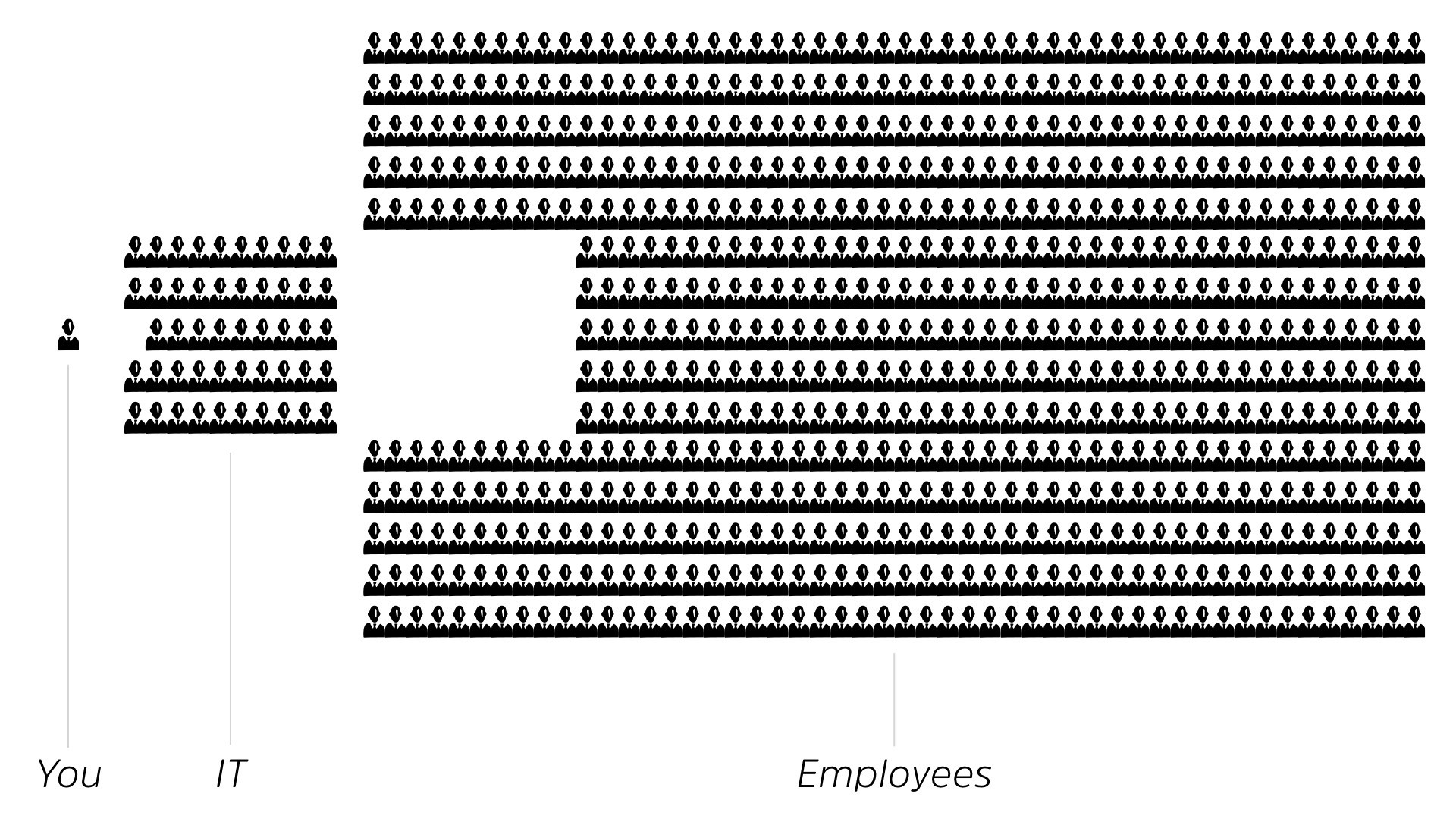

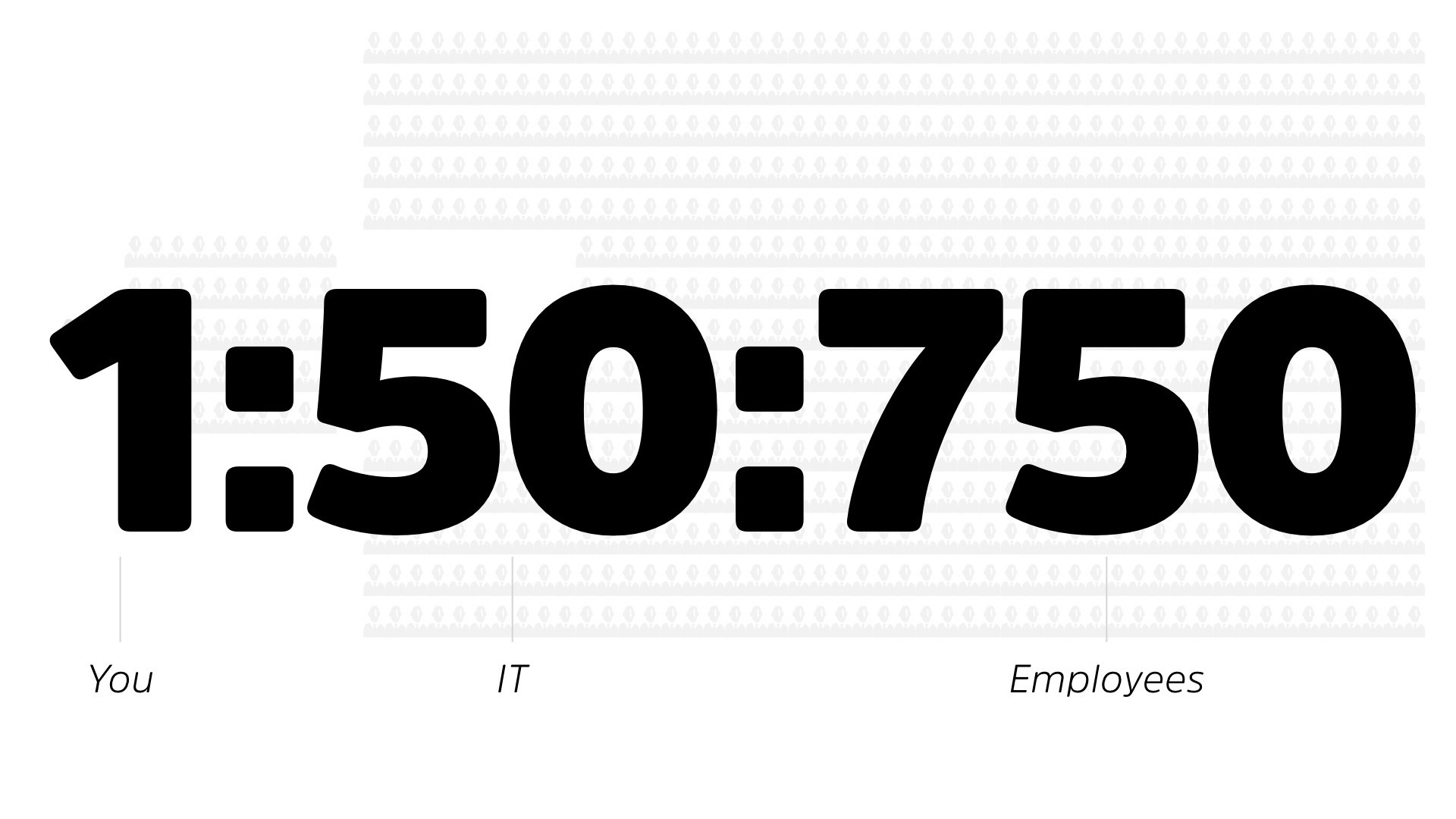

So if you have, your employees to your IT people. So this is the CIO in general, it works out to be about a 15-to-one ratio.

For every 15 employees, one of them is gonna be in the CIO. Okay? That’s not a bad ratio. It makes sense. It seems to be propelling our businesses forward, but cybersecurity is a much worse ratio.

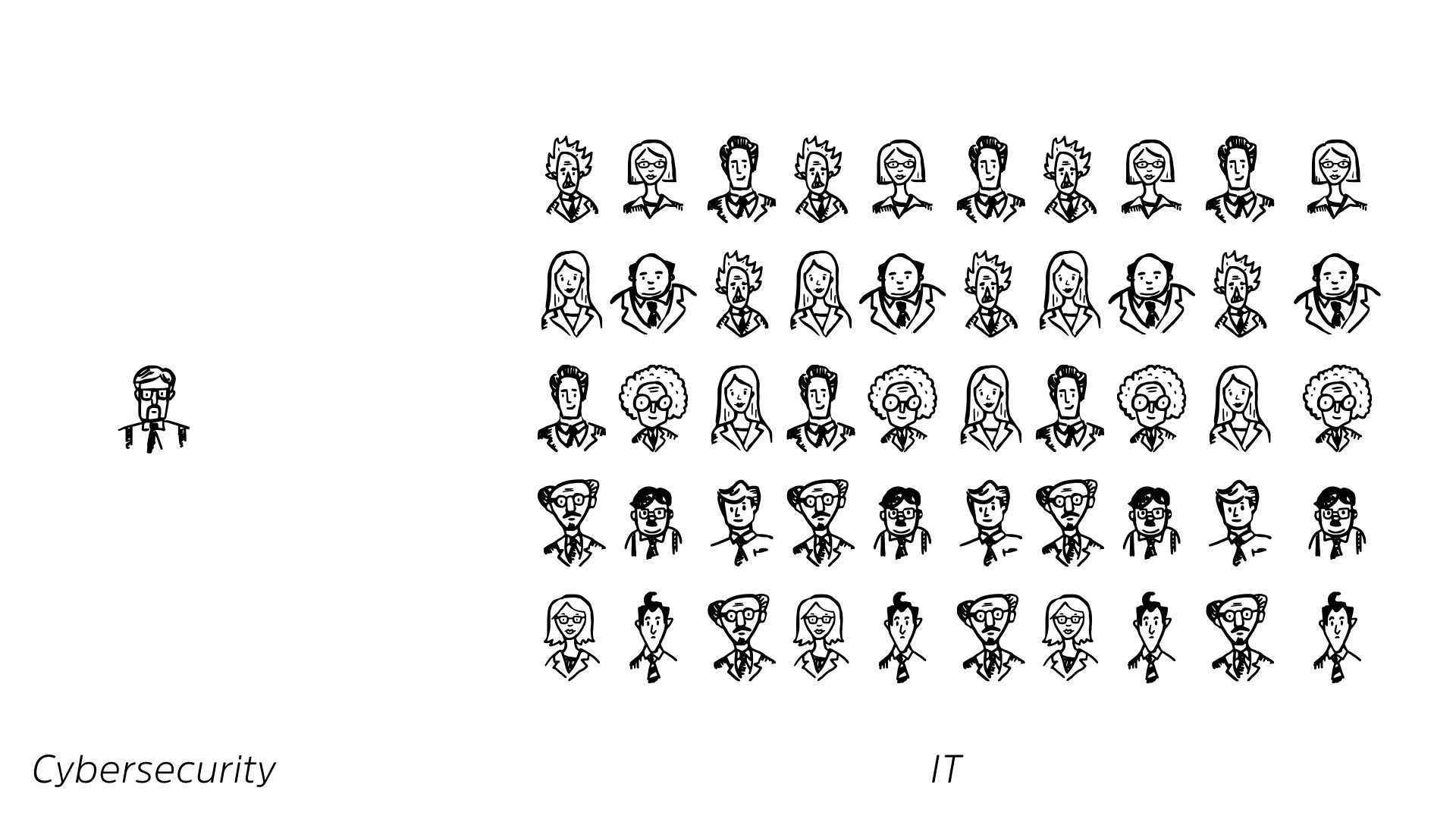

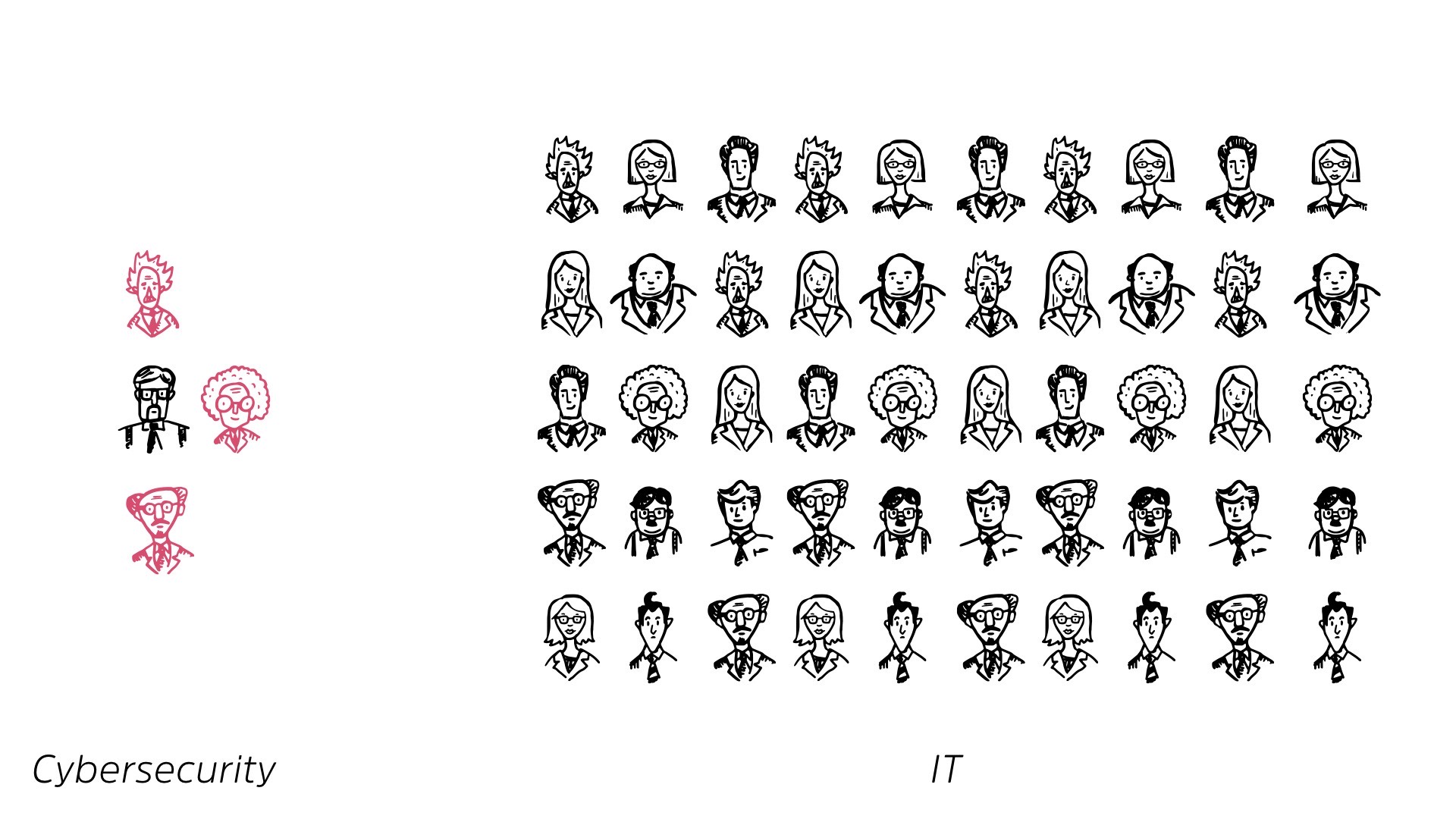

Of those IT people, for every 50 IT people, one is responsible for cybersecurity.

And because you’ve all gone dead quiet and have continued to stay dead quiet, I’m gonna be a little bit mean. I’m gonna ask everybody at the front tables to stand up for a second. Okay? So if you’re in this front row of tables, could you guys just stand up for a second?

I promise I’ll let you sit down at some point. It won’t be now, but … Okay. So everybody’s standing up here, based on the size of this room, this is your CIO organization.

Okay? So everybody in the back, you can see this. This is the CIO organization for our company in this room today. Okay?

And I’m gonna ask everybody, except these two gentlemen here, sit down. I’m sorry for picking on you. I’m sure you’re wonderful people.

These two gentlemen are responsible for the security of everything, everyone in this room does. So if you turn around, wave, we’ll give a round of applause for these gentlemen, who are doing a fantastic job.

As we all are. You guys can sit down. I appreciate it. Thank you. But two people to defend, not just everybody in this room, but every work product, every piece of data, every information system that everybody in this room touches on a daily basis. That’s crazy.

That’s absolutely out of whack, right? You cannot possibly, no matter how hard you guys work, and I’m sure you work very, very hard. There is no way that that ratio holds up. Okay?

To look at it in a different way is, this is our room. This is the CIO. That’s the cyber security folks. Let that sink in for a second. That’s the reality of what you’re dealing with today.

Do you think one person is capable of doing that much work? No. Right? And I think you all feel that, every day. You come in and, do you feel at the end of the day like you’ve made progress, or do you know that when you come back in the next day, there’s gonna be another mountain of work, right?

This is why, because it’s absolutely out of whack. So the actual ratio, and this is, according to Gartner’s latest statistics is, a one to 50 for IT, to service security. It’s one to 750 for cyber security to employees.

Okay? That’s why it doesn’t hold up.

So, lack of people, what’s the solution? Let’s hire more people. Right? Makes sense. If this isn’t scaling, let’s grab more people.

So even if you adjust the number of people in cybersecurity based on the budgetary spend, which on average is about four to 8% of the IT budget, you’re still not gonna be appropriate.

This is four to 50 now. Okay? It’s not bad. Right? One for every 12-and-a-half people. That’s decent, but it’s still not enough to do the work. So here’s the next question.

Can you find these people?

So as an advantage, and this should at least bring smiles, please. Please bring smiles to your face, as cyber security professionals, we are in heaven right now. We are driving the ship, right? We have negative unemployment within our discipline.

This is a great thing for us as individuals. For us as team leaders, this is a nightmare. It’s an interesting balance there, right? So can you find these people? The answer is no, you’re not gonna be able to find these people. It’s really quite simple, right?

There’s a, we all know the cybersecurity skills gap, the talent shortage, from Gartner. They use, a hundred, 1.8 million people by 2020. You saw a different number from Karen, it was 3.1 million by 22, 22.

There’s, the numbers all over the fa- all over the map. But roughly it boils down to, there’s at least a million open jobs right now. And anywhere from two to 3 million over the next couple of years, right?

We’re opening more and more cybersecurity jobs. We do not have enough people, right? Research continues to suggest that this is going to get worse. This is a win for us as individuals, yay. Anybody?

I’m hoping that’s from the party and not from like the tone of this talk, but I’ll take it anyway. So as individuals, this is great. As people trying to hire for a team, this is really, really hard.

Because they’ll give you another number, 770. This is the approximate number of graduates in Canada every year from cybersecurity programs. And this number has grown leaps and bounds over the last few years.

But if you contrast the 770, compared to the two to three million job openings over the next little while, we are never gonna catch up by educating more people, right?

We need to continue doing this. We need to continue our edu- efforts to improve university and college programs, but the scale just isn’t there.

So can you find these people? Probably not.

Even if you could though, can you keep these people? I know I’m going dark again. I’m really sorry. So can you keep these people comes down to two things, so do you have a positive work environment?

Well, if they’re always overwhelmed by work, it might not be that positive, but you can counter that with a positive culture. Unlike this talk, you can keep things light and fun and happy at work, but also, can you pay for them?

Because we have non- negative unemployment, which again is a win for us as individuals, can you pay more than the person next to you? Because if somebody is good, they’re gonna be poached because of this gap. So not only can you find them, can you keep them, right?

That’s a tough question.

Next thing is, will these people actually help? And I don’t mean that as a slam against the individual, but if you can’t hire enough people to just throw bodies at this problem, if you get an extra person on the team, are you gonna actually make a dent in the work?

Are you gonna make a difference in the outcome? It’s a key question. It’s one I don’t have an answer for, but it’s an interesting challenge.

So I’d like to say we’re moving on to happier stuff, but we’re probably not.

Number one, problem. Anybody?

Number one problem? You can at least give me something, people. Number one problem.

Audience: Shortage of personnel.

Phishing. Shortage of personnel. Absolutely. But phishing is the number one problem when it comes to attacks though. Okay?

So we look at attacks.

107

Verizon DBIR this year had 92.4% of malware attacks start through email. Okay? It’s oldie but a goodie, right? It works. Email is essentially a giant postcard exchange system, yet it is critical to how business is done around the world.

Of course, cyber criminals are going to exploit it.

So we respond with phishing awareness campaigns. Does anybody run a security awareness campaign around Phishing? Excellent.

Joking. It’s not excellent. I’m sorry.

Audience: [laughs]

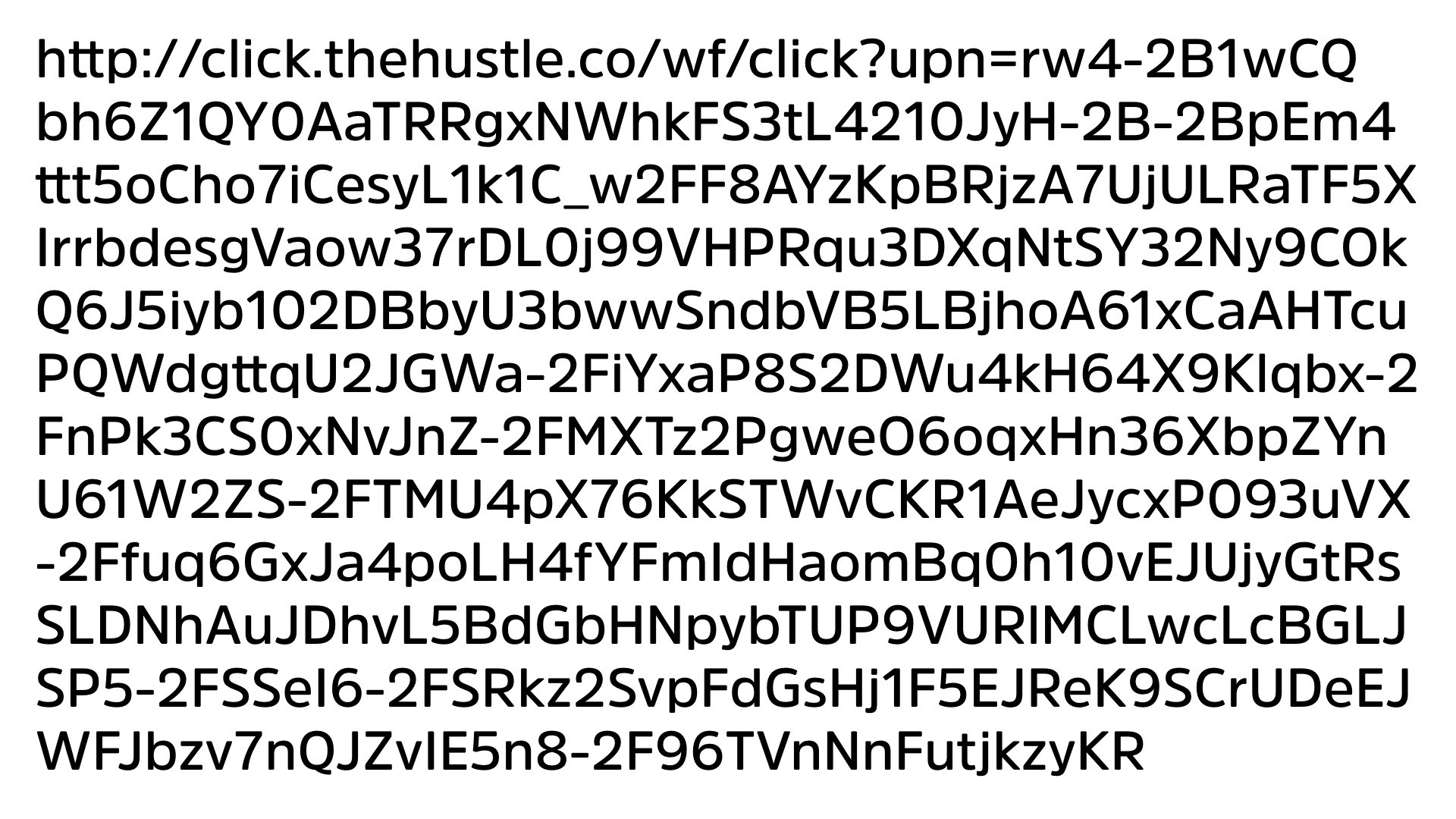

The reason why, can you tell me if this is a valid link or not? Anybody? Random guess? Is this a valid link?

Who knows, right? This was sent to me actually last night. It’s a marketing link. So if you ever wanna know who the worst offenders at making your phishing campaign ineffective, marketing.

Well, you know what? I’m not even gonna apologize to the marketing folks if they’re in the room. Marketing is the number one culprit for absolutely atrocious, horrible URLs sent around the world, right?

Because it’s, everything’s tracking, everything’s tracking design. So from a privacy standpoint, that’s an issue, but also from a, how do you teach someone this is fine to click on, right? I’ll give you another view.

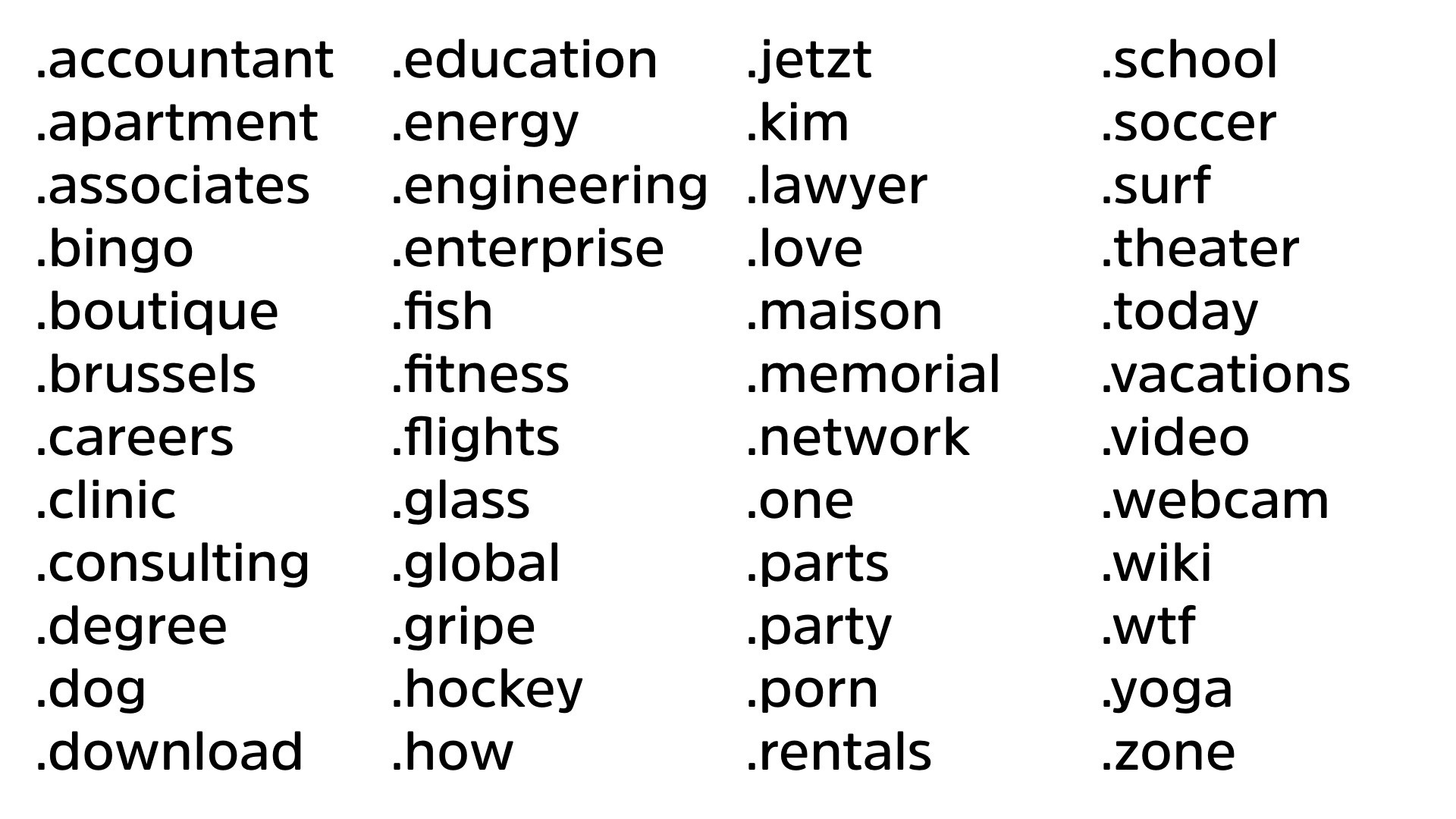

Can you spot the fake top level domain in this list? I’ll give you a second. Tell me which one is not a valid top level domain.

Fernando: It’s a trick!

Absolutely. Fernando’s right. It’s a trick question. These are all valid top level domains. Okay? And that’s fine. You might wanna dot webcam.

Well, it’s your business. I’m not gonna ask what you’re doing on dot webcam. Right? My favorite WTF, fantastic top level domain.

Okay? These are all top level domains that are valid and there’s hundreds more. Since the, ICANN opened it up, there are more and more going. So our approach right now of phishing awareness campaigns has two major flaws.

The first one is that, how do you teach people what’s valid and what’s not valid now is almost impossible. Because of marketing, links like this, because of a massive spread of top level domains, right?

And you see businesses using these all the time. Very challenging.

The second is that, asking people not to click on a link, runs counter to the whole point of a link.

That you’re not gonna get traction asking people not to click on a link when the only purpose in life of a link is to be clicked on. It’s just not gonna happen. Right?

This is where automated security controls in the backend, scanning email coming in and scanning web traffic going out, are really where you wanna focus, right?

Because if you tell people, “Hey, you know that great link that you got an email, don’t click on it. Don’t click on any link.” It’s just, you’re not gonna be paid attention to.

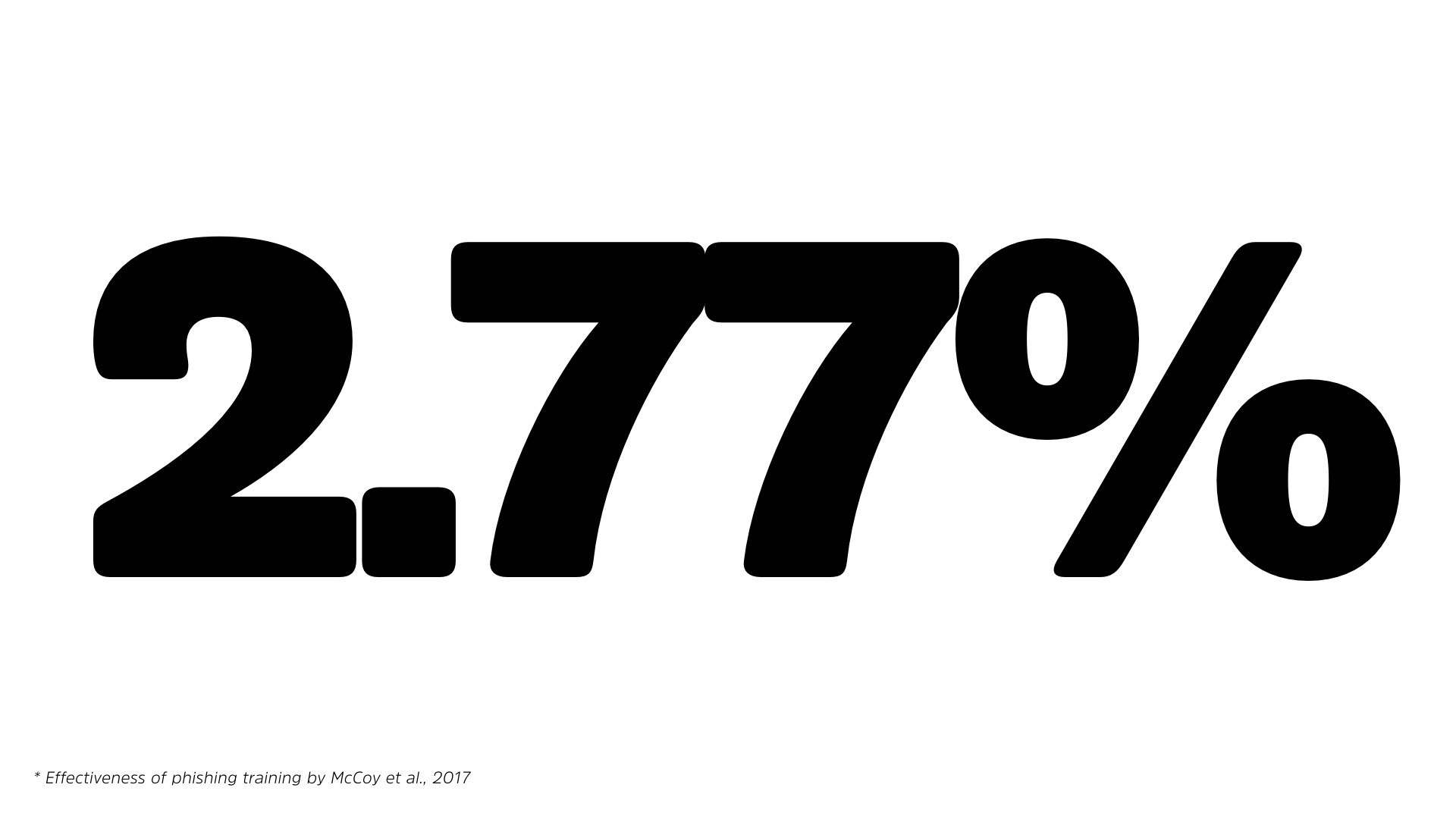

In fact, there was a study done by NYU.

They looked into the effectiveness of phishing campaigns, and they found the best phishing awareness campaigns improved the response rates of people, or, rates of people not clicking on links by just under 3%.

So that 92.4% becomes like 89 point something. Okay? That’s still a massive problem. It’s essentially a waste of breath. It actually positions your organization worse from a security perspective, is that people will pay less attention to you because you’re talking crazy.

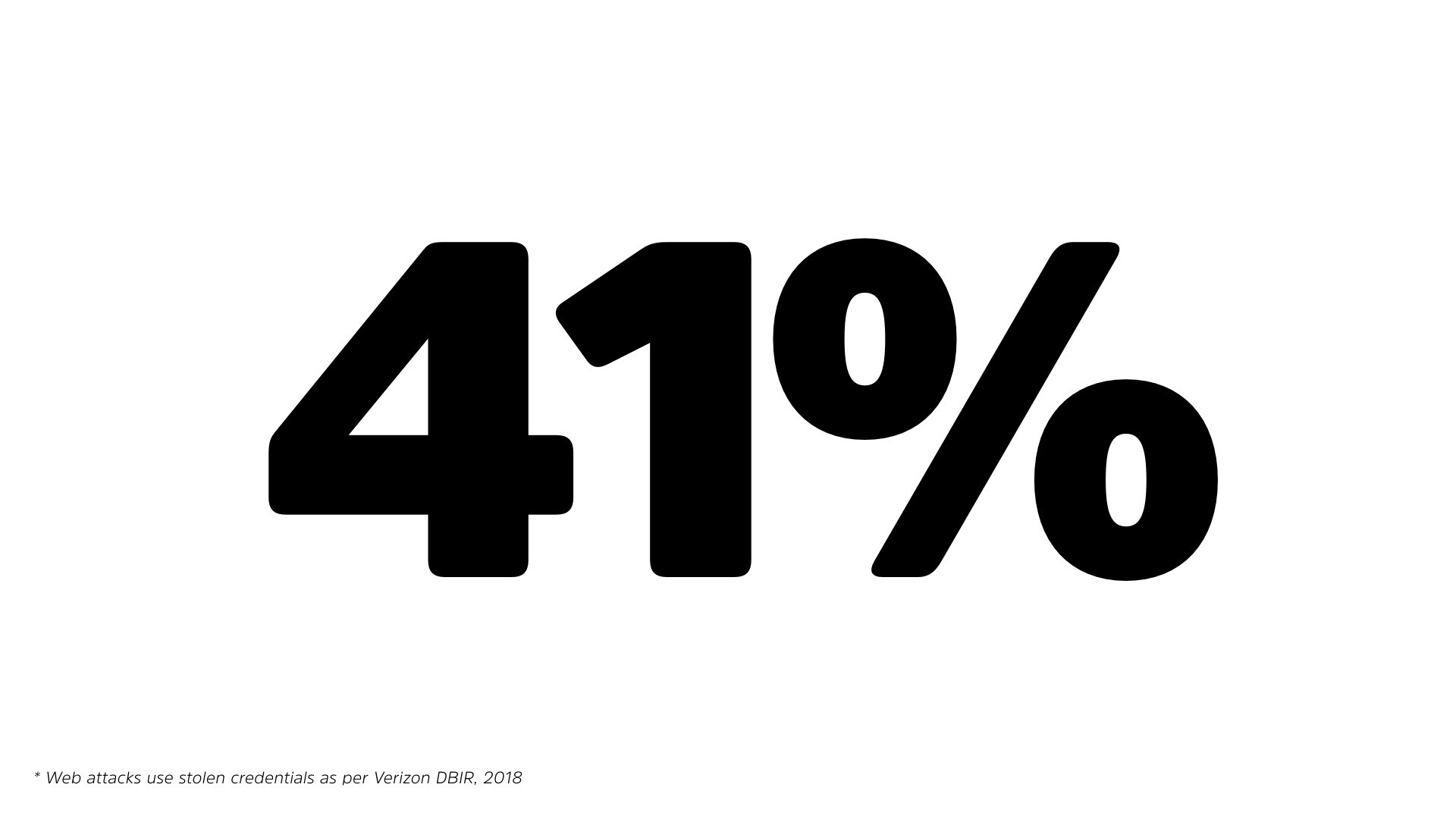

Another problem. When it comes to web attacks. A huge issue with web attacks, 41%, again, according to Verizon DBIR. 41% of web attacks use credentials, whether they are, stolen or detected, detected a third-party breach, 41% of these attacks are leveraging a stolen set of credentials.

So what do we do in response, as security professionals, we have password awareness campaigns. Now, I would ask you to show your hands, but I think you’re probably gun shy after I asked the last group around phishing.

But I’m just gonna assume that everybody who said they run an awareness campaign around phishing also does the same around password awareness, right?

And that’s not necessarily a bad thing, but you need to realize that, historically, we’ve done an absolute crap job when it comes to passwords. You heard it from Karen yesterday.

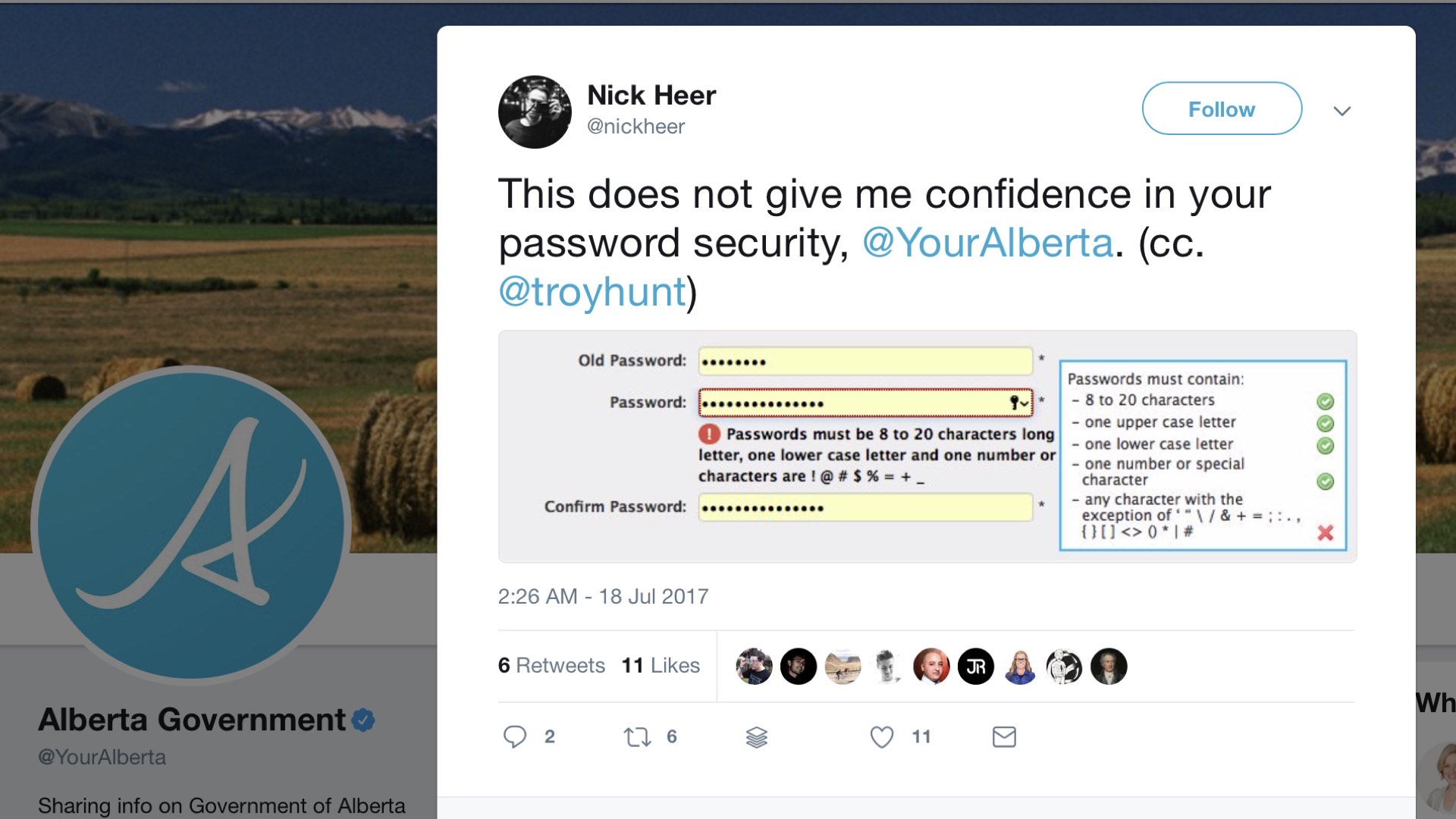

She broke down some of the, challenges around it. I’m gonna highlight it again. And this is not specifically to call out Alberta, though in Ontario I think I have a really low risk of having Albertans in the room, but still it’s a good example.

This was, linked to, from, one of Troy Hunt’s many blogs on passwords. He runs, Have I Been Pwned, and you can see here, the important one from this one is the password must contain.

This is the classic, right, eight to 20 characters in uppercase, lowercase, a special number, any character with the exception of these, that’s an impressive one.

We know that generates worse outcomes. Now, thankfully, NIST has finally updated the guidance and that happened last year. They finally updated the guidance to be, a password should be as long as possible, right?

You should use passphrases. You shouldn’t worry about complexity arguments like this, length is the number one determinant. The problem is, while NIST updated their, guidelines last year, math has not changed significantly in the last year.

I’m not a mathematician. I’m a forensic scientist by training, but I asked a mathematician friend of mine and he assured me that there have been no significant changes in how basic probability works over the last year or two.

We have known for an extremely, extremely long time that our password advice does not hold up to mathematics, let alone to psychology.

Yet we continue by rote to tell people that their password should be at least eight characters, contain an uppercase, a lowercase and a special character, even though that generates poor outcomes.

And we’re paying for it, 41% of web attacks are using these types of credentials.

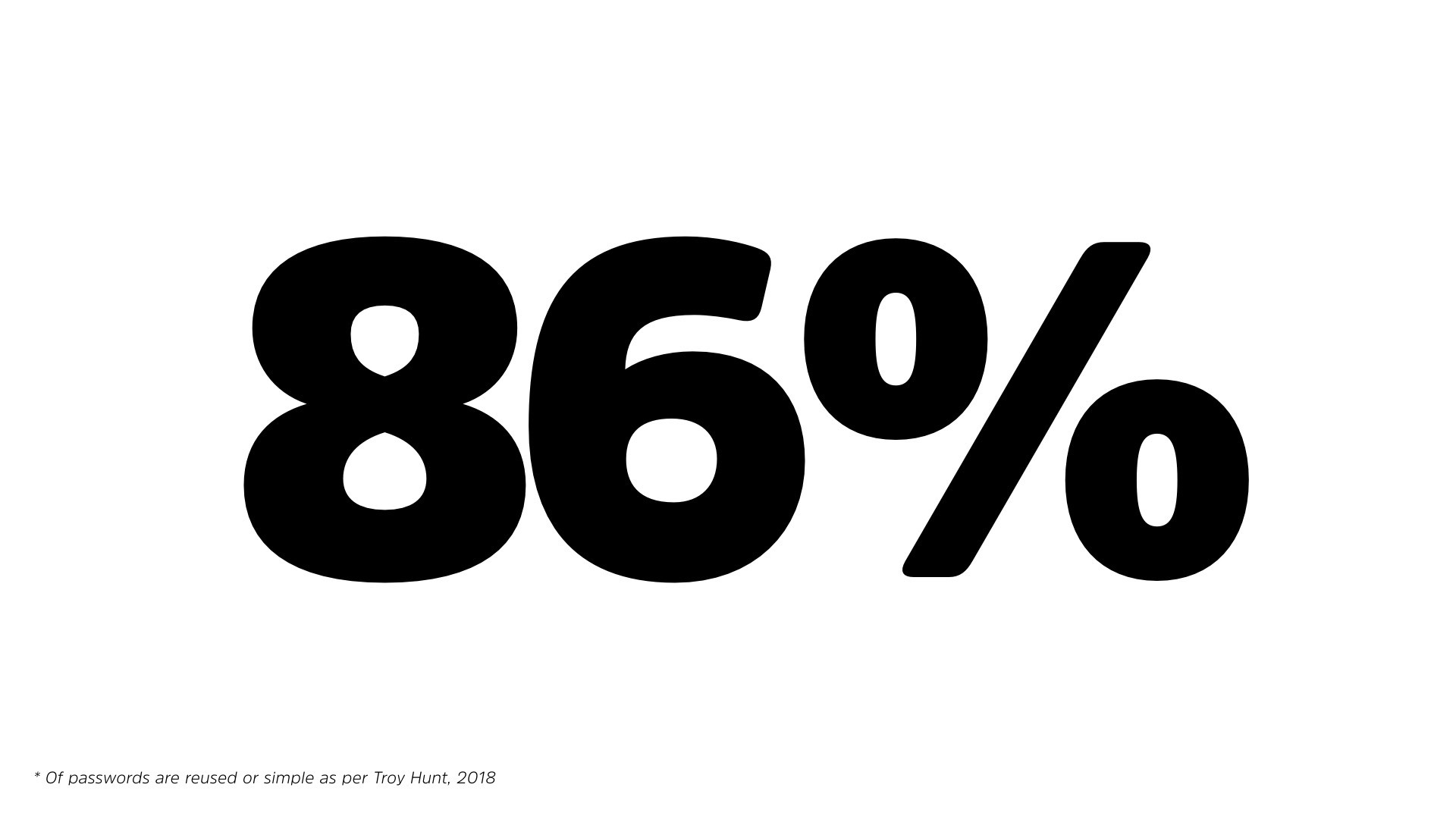

In fact, if you go back to Troy’s, statistics, he actually analyzed the, Have I Been Pwned, over a billion passwords. He found that 80%, 86% of all the passwords in all of the dumps he’s received were either simple or duplicated.

So in other people, in other words, people are vast majority of time, either reusing passwords regularly or picking absolutely horrible passwords.

And why wouldn’t they pick horrible passwords? We’ve been absolutely no help in educating them on how to pick strong passwords. And we make it a pain in their you-know-what by making them log in all the time into multiple systems.

A huge pet peeve of mine is when systems won’t let you paste in passwords for some reason. Apparently, that’s a security advantage. There is no logic to that.

Another fantastic example, does anybody know why when you type in a password, it shows dots or stars instead of the actual characters?

I know you know, Fernando. But would you like to enlighten us?

Fernando: Shoulder surfing!

Exactly. So Fernando said the answer is shoulder surfing, and that’s very, very true.

The reason why we, hide those passwords on screen is for shoulder surfing. So you would logically then think, in scenarios where shoulder surfing is not an issue, or where the user is willing to accept the risk of shoulder surfing, you would logically provide them an option to see the password.

Because we know that mistyped passwords are a massive issue from a service delivery perspective, but also from the reason why people pick the same password and use it over and over again.

We are starting to see, amazon.com is a good example, where they let you check a box to see your password, but they provide absolutely no context as to why you would want to check that box or uncheck it to hide your password or to show it, right?

That’s a huge issue.

They let you do the right thing, but they don’t tell you why. So now you are not necessarily going, “Okay. You know, it’s just me on my phone. I’m all alone. I can look at the password to make sure I type it in. Or better yet, just paste it in.”

So again, we handle this very, very poorly. So this leads us to a common feeling when I talk to security teams. Everyone kinda goes, “Ugh, users,” right?

They’re the source of all our problems. If we didn’t have users, we wouldn’t have any problems at all, right?

We could make the best systems, they’d be the most secure. It would be a wonderful, wonderful land full of unicorns and rainbows. Unfortunately, that’s led to an us-versus-them kind of attitude, right?

We are dealing with a breach because this user clicked on that link. That attacker gained a foothold in the network because Mark set a bad password, or he reused his password, right?

The problem of saying that users are the problem is that it’s a horrible thing to do.

I’ll give you another statistic. 100%. 100% of your organization’s profit and revenue is driven by users.

You cannot think of them as the enemy, they are the reason you have a job. If you think of them as the enemy, if you go us versus them, it leads to a whole bunch of bad decisions. It hurts morale. It’s frustrating because, you know what? People aren’t gonna change.

Human nature is, very, immutable. It moves on, we see these patterns time and time again. So you need to think us versus them, but us as an organization, as a community, including our users, against them, malicious actors, people trying to attack us with purpose.

Okay. Let’s talk containment. So this is a scenario I think that it’s gonna run true, ring true with a lot of folks.

You get someone from system delivery coming and saying, “This system needs to be deployed,” right?

They come to you as the CSO. They come to you in the soar- security organization. The standard response from us as security professionals is, “When? When does this need to be deployed?” “Monday.”

We’ve all been there, right? It’s that last minute, “Hey, guess what? I pitched this to the board. They’re super happy about it. We’re deploying it on Monday.” “Deploying what? I’ve never heard of this. I’ve never been involved,” right?

Security is regularly ignored. We are sometimes, if you’re in a formal project management process, we will be looped in at certain gate points, but we’re always after-the-fact. And that leads to some really interesting things.

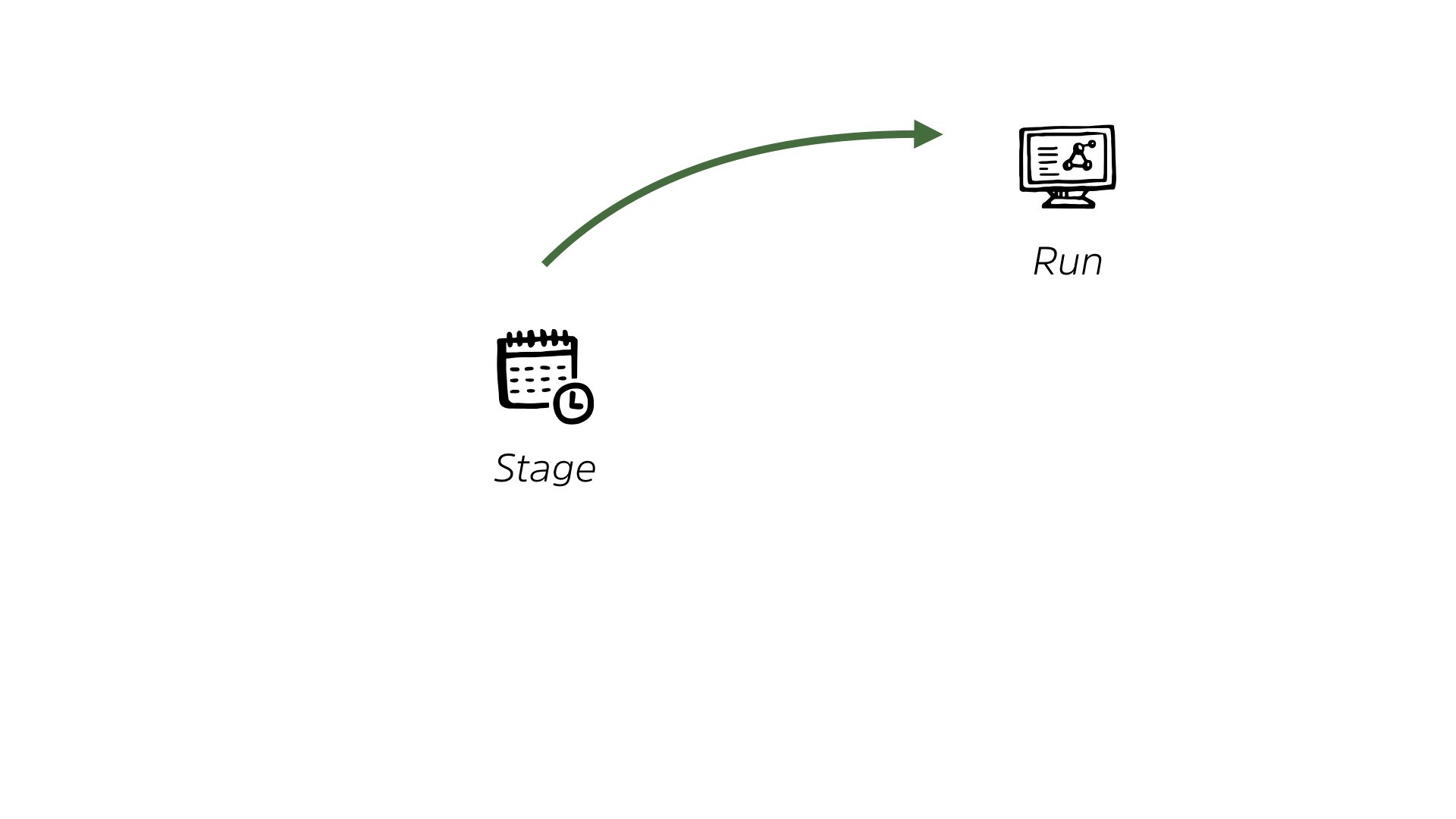

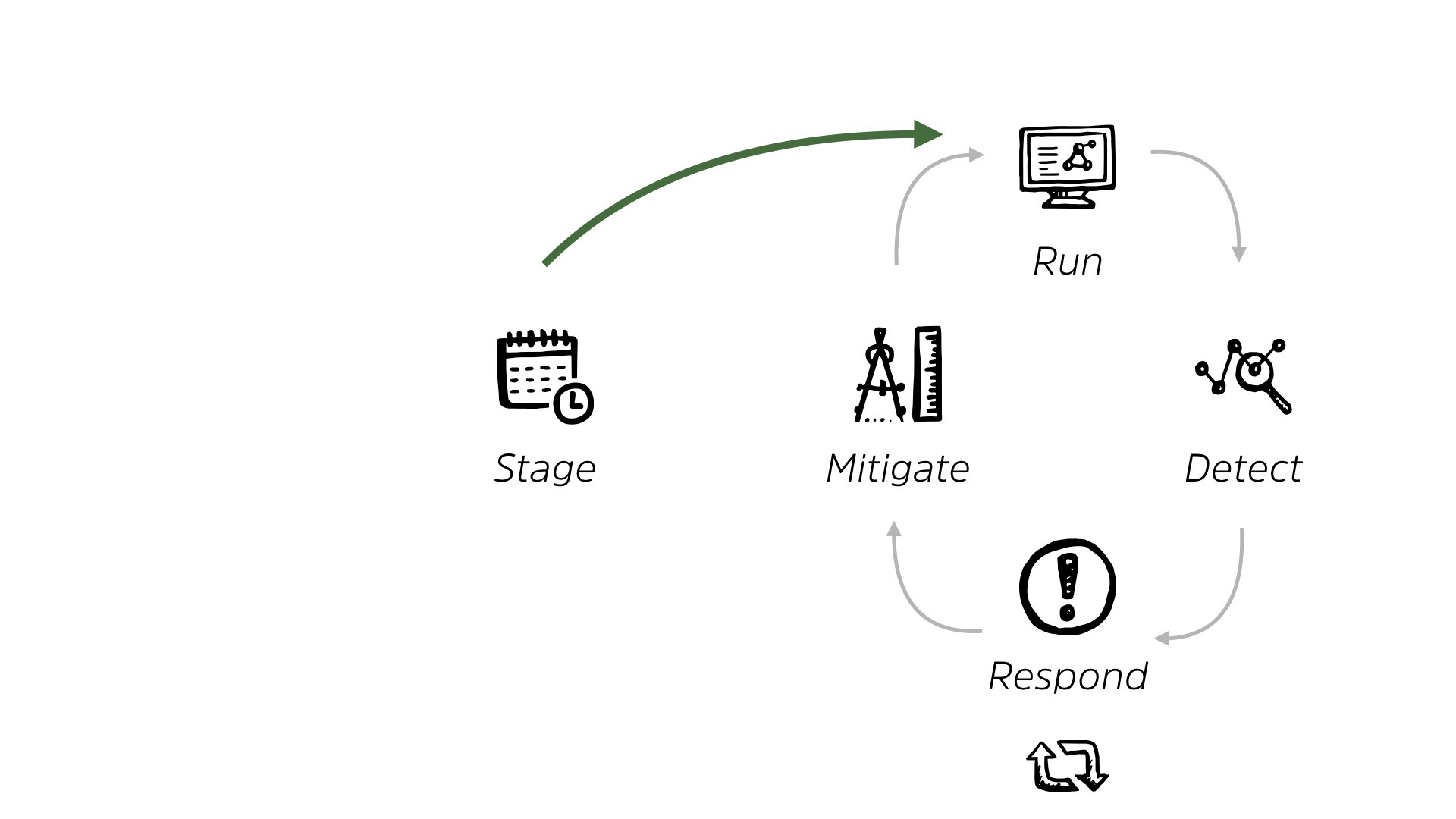

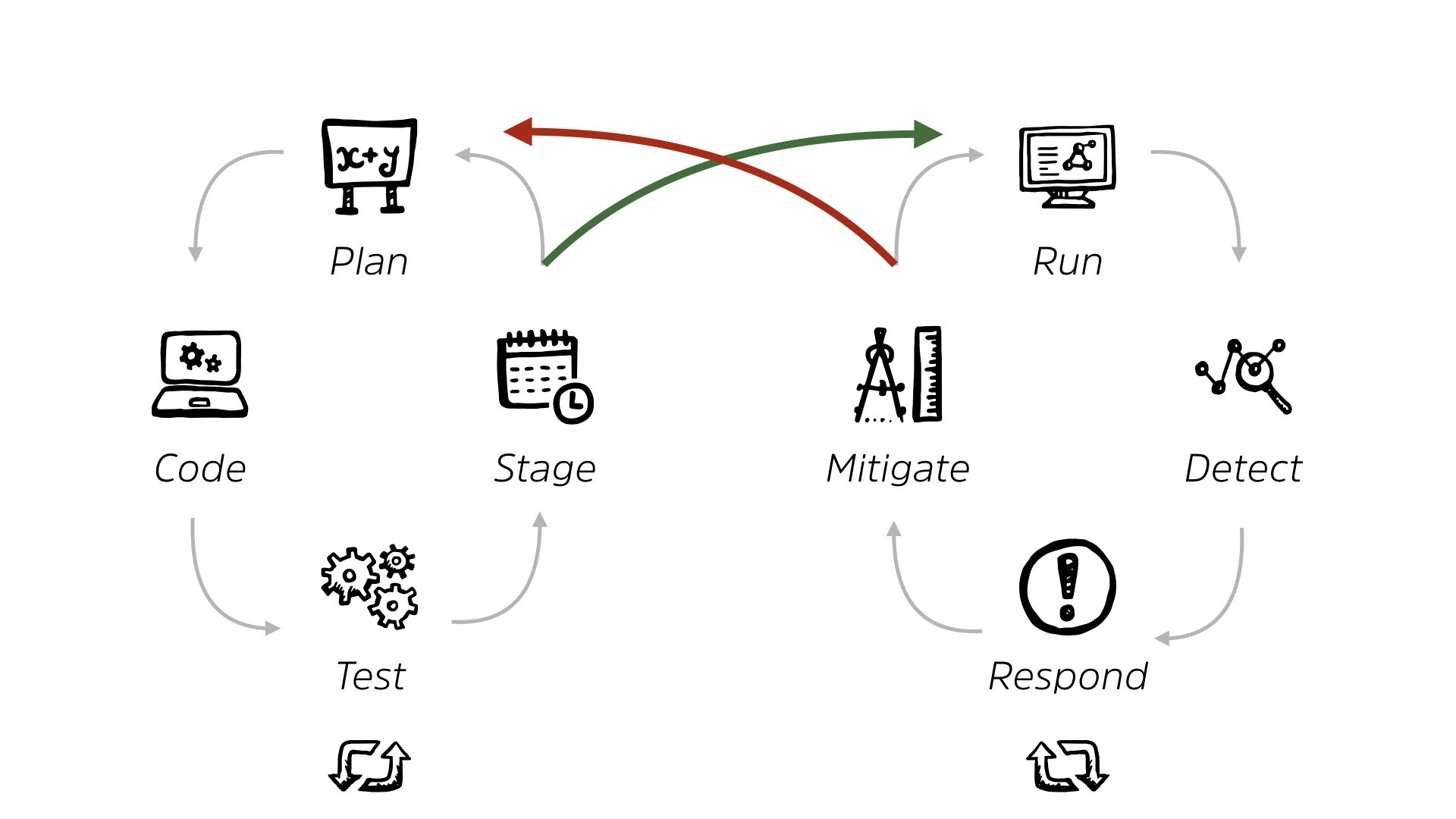

So essentially, we live in this world where a system is ready and staging, whether it’s home-built, whether it’s off-the-shelf, same kind of thing. And it’s given to us before it runs or after it runs. That happens just as often. And then we live in this loop, right?

We live in this loop of looking at, detecting, responding and mitigating. And we are very comfortable here. This is how we do 99% of security work today, right? We get a system, we put some controls in place, we look for issues from those controls.

We respond when possible and we mitigate and we continue running around chasing our tails. It’s just how we do it. It’s organized, it’s an organizational, output, based on how things are structured.

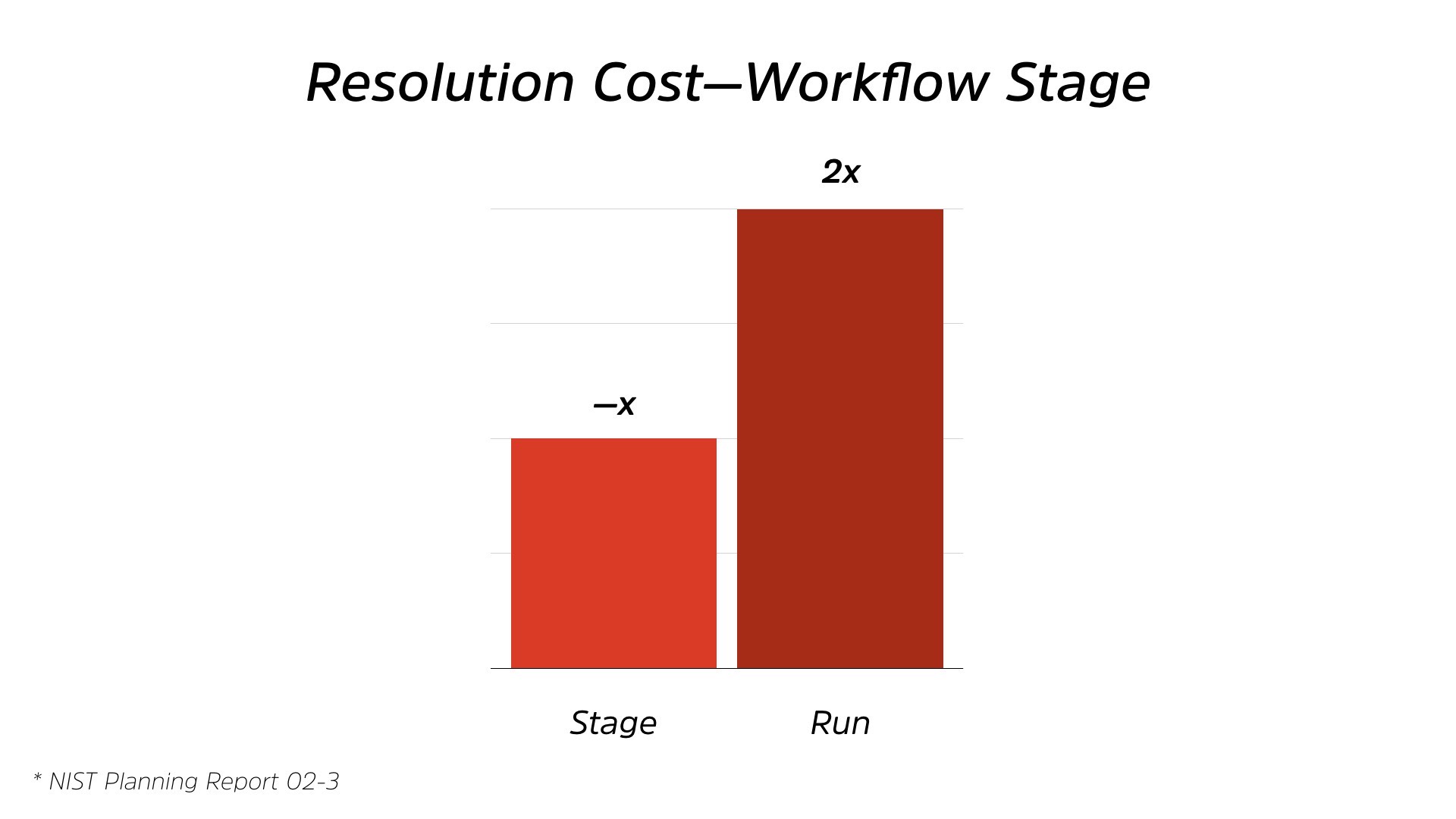

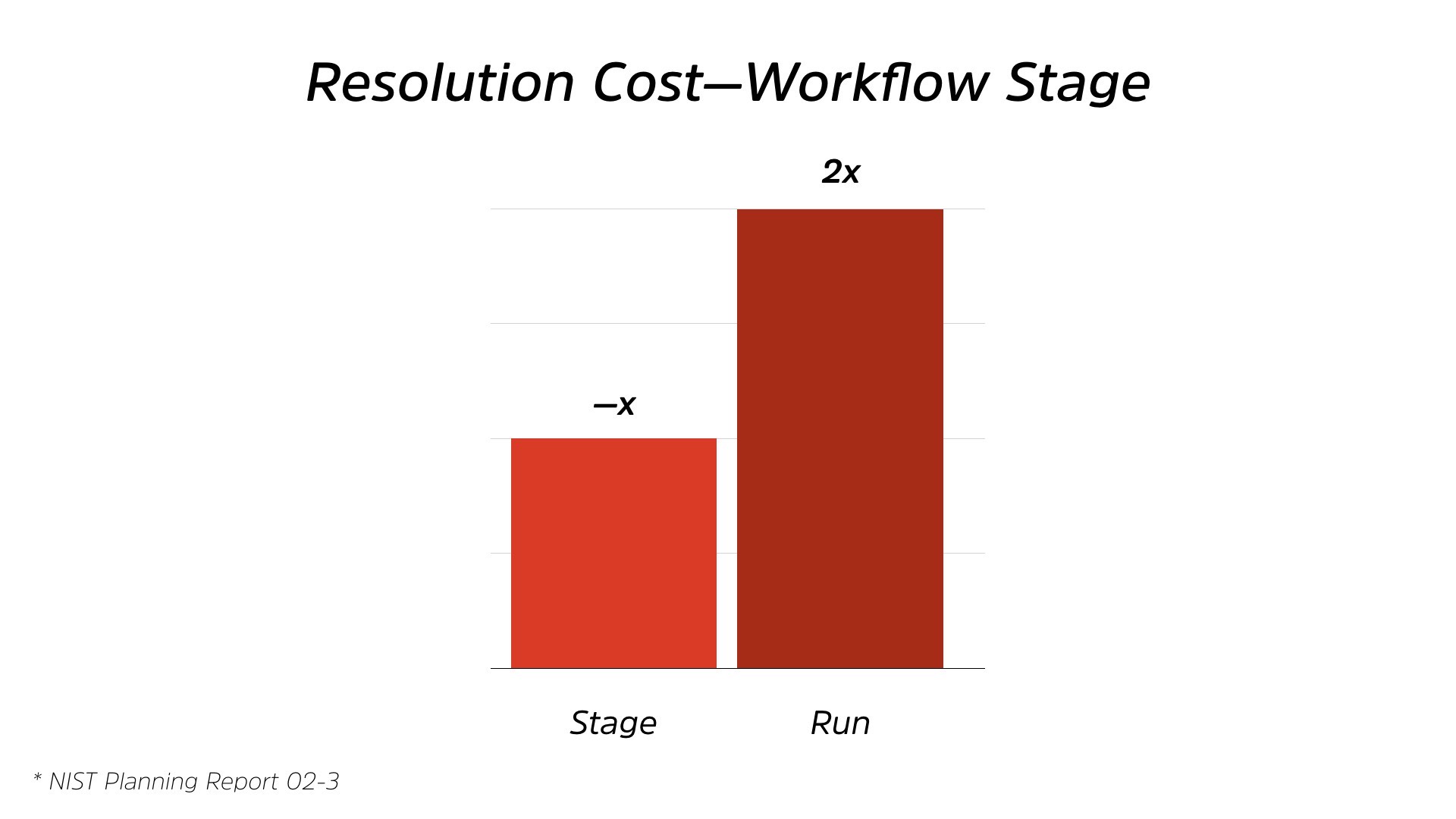

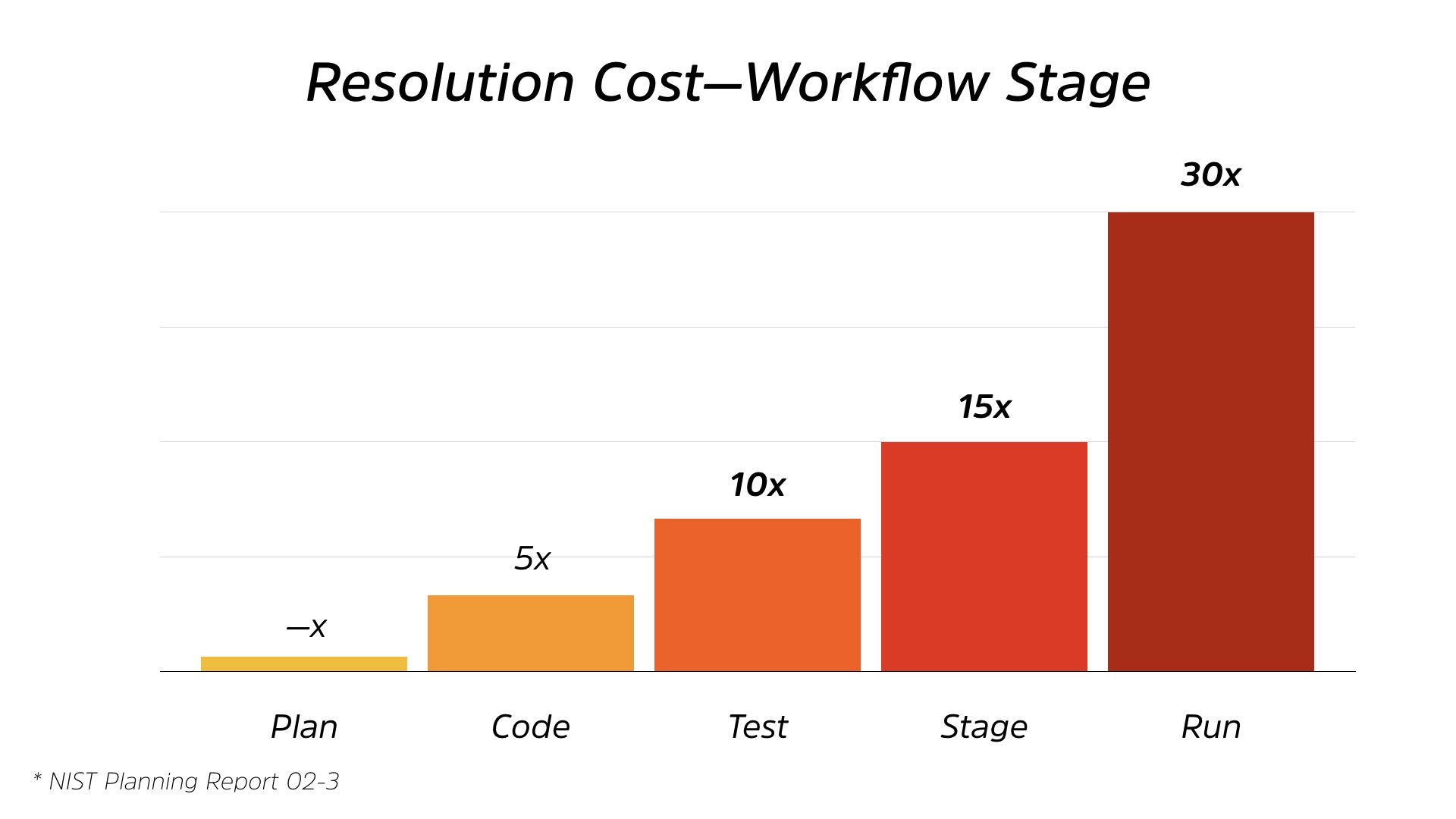

The interesting thing is, when you start to look at the cost of that. So this is based on a NIST report from 2003. There’s a ton of different reports over the years that show us this, issues around quality. And most of the time security ends up being a quality issue.

From staging to running, you’re paying double the cost. So if you’re fixing an issue in the run stage, so when it’s been deployed to production, it’s twice the cost of the staging. So if you could fix it before it hit users, it would be half that cost, right?

But we willingly pay the 2X costs, and I say willingly because we’re ignorant about that cost and we just pay it automatically. That’s just how we work.

So the other output of this is that a late stage risk assessment, looking at a system when it’s about to be deployed on Monday, limits our options. You can’t change how that system works.

You can’t change the technology, so- selection in that system, you need to figure out how to put a box around it, right? It goes back to our perimeter concept.

Even in a micro perimeter, you’re dealing with, “Okay, I have this set thing, how can I defend what’s going into it and what’s coming out of it? How can I’m add some sort of security control to what’s essentially a black box?”

It’s a really difficult thing to do, right? Now, and I work for a vendor, remember that? We have an entire expo hall full of companies. And if you’ve ever been to RSA, you’ll see multiple expo halls full of companies that are trying to help address this problem, right?

There’s any number of technologies that you can list off that people are trying to go, “Okay, we can help box this in based on the fact that you’ve been given something,” and said, “Run with it.”

It’s all bolt on, right? We’ve all heard that term before, bolt on security? This is the world we are forced to live in because of a series of choices.

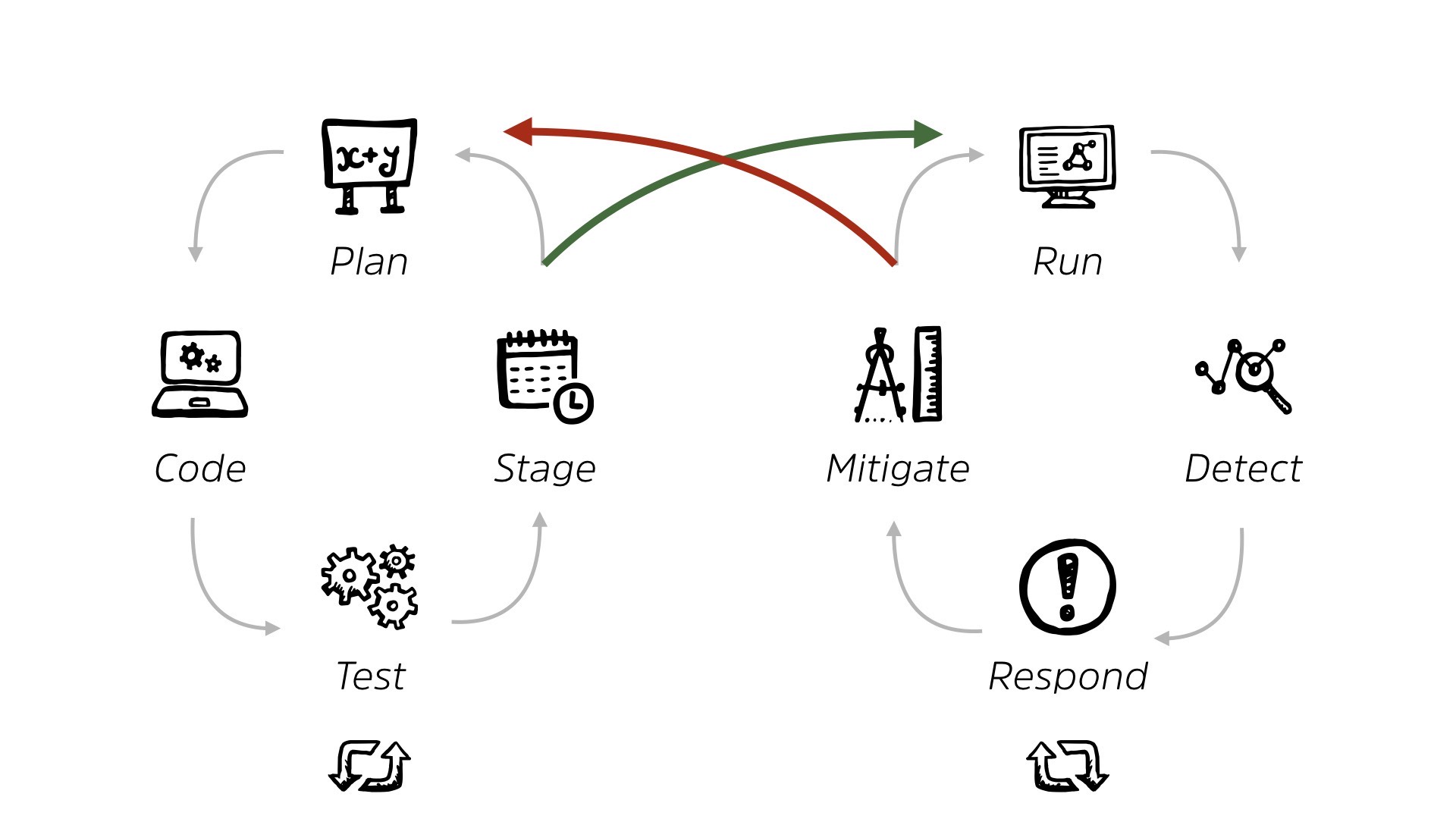

So it turns out though that there’s an entire other stage of IT systems development and deployment.

People plan it, they code it or they, and they test it. If they’re not coding it directly, they’re configuring it from off the shelf. This is an area where security traditionally has had very little to no impact, because we’re just simply not looped in.

Nobody invites us to the party. We’re sitting off in the corner sad, looking at a stream of alerts in our SOC and our logs, right?

Nobody wants us. I think we’re nice. It’d be nice if we were invited to the party. We could bring something. The challenge is, when you look back to this resolution cost, it’s actually much bigger than that.

When you look at the whole thing, if you start at the planning stage as your baseline costs, it’s steadily increases and we’re actually not paying double the cost. We’re paying 30 times the cost of resolving security issues.

So if we get involved right from day one as opposed to the end of it, “Hey, run this by Monday,” we’re reducing our cost by a factor of 30.

Easy example. If you talk about encrypting data at rest, right, or encrypting data in transit.

Let’s use data in transit. If you’re talking to the developers as they are deploying the system or people as they’re procuring it and you make that a set requirement, it’s far easier to implement, right?

Most frameworks in code today make you, allow you to create encrypted connections and normally with just an option flag, right? An extra argument into a function here, a certificate to point to and you’ve got encrypted communication everywhere.

As opposed to looking at it after the fact and saying, “Okay, I know that all these applications work in plain text. I’m gonna need to ensure that I have point to point, load balancers that encrypt that.

Or, “Between these two routers, I’m gonna apply network level encryption.” And those can be great additional controls. I’m not slagging those controls. They’re a valid thing, but wouldn’t it be far easier and cheaper to do it right from the get go, right?

And that repeats time and time and time again. Think about passwords. If you could talk to a developer who’s creating a password, system or a login page and tell them, “Here’s a great way to give advice to people about passwords. And please, please let them paste in passwords,”

How much easier would that be?

And that’s not just security costs, that’s, support costs. It’s a number of things, right? The earlier you get involved, the cheaper it ends up being.

Now, I’m not one for fads.

I don’t like jumping on the latest bandwagon. I’ve seen these things come and go and, unfortunately, and again, not to slag marketing, but when marketing gets a hold of any term, it loses its meaning very, very quickly.

But if you look at a report, from, the, state of DevOps, and if you look at the cycle, it looks oddly like the infinity loop that all the marketing folks are saying, “This is DevOps. This is the process,” right?

Regardless of how you map it out, there’s some data behind this that supports moving faster and moving smaller, which is really what DevOps is all about, is far more productive.

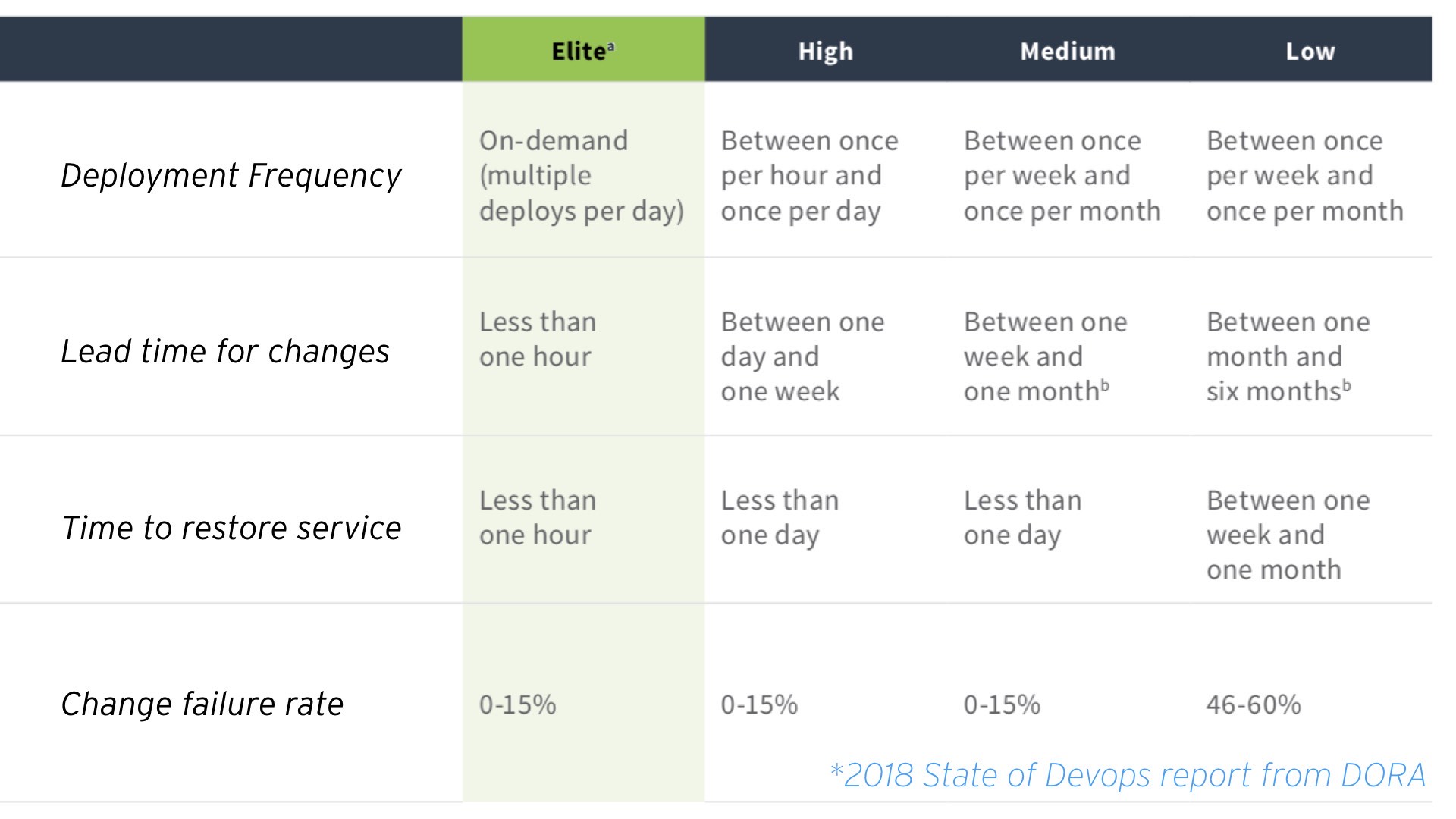

So from the state of DevOps 2018, this is from Gene Kim, who was actually a keynote speaker here I think about three years ago. His team went out and surveyed 30,000 teams globally, okay, across all sorts of verticals.

And what they found was there was about 7% of these 30,000 respondents, they classified into an elite cohort. So these were people who adopted a culture of moving fast and small. Now, from a security perspective, there’s a huge advantage to moving fast and small.

If somebody says, “Hey, we’ve updated our application and we did it, you know, this is the latest update and it has three lines of code that have changed,” it’s far easier to spot a problem than somebody who comes out once a month and says, “Hey, we updated the application. There has been 30,000 lines of code that have changed,” right?

So moving smaller has a security advantage. There’s huge business advantages as well, but I wanna call out the security ones here. So if you look at the elite cohort here, they’re the one, the green column on the left.

You can see that they’ll deploy multiple times per day into production. It seems scary, but it’s a really good thing, ‘cause these deployments are really, really small.

In fact, they only need about an hour to get a change from concept into production. So if you think of that as a latest patch, it’s patch Tuesday, you need some, Microsoft updates pushed to Windows, these teams can do it within an hour.

And they can do it without actually reducing quality. That’s absolutely critical. We’ve all been in a scenario where there’s been a zero day, patch that comes in and said, “Hey, this is critical. You need to deploy this patch right away.”

The rest of the CIO organization now is, “Hey, have you tested it? Are you sure this isn’t gonna break something? You know, it doesn’t matter. This is absolutely critical. It needs to go out.”

A, a horrible example not to call out anybody, and I apologize to anybody from Equifax in the room, but Apache Struts 2 was a great example of that.

And we’ve seen that twice this year where there have been critical vulnerabilities from Struts 2, and that’s a core application framework. And they come out and say, “Hey, you need to deploy this patch right now because it’s a remote execution. It only takes two lines of attacker code to breach our systems.”

They go, “I don’t care if it breaks anything. You need to push this out immediately.” And then we all kind of scramble. We go through a process that we invent on the fly, even though we’ve planned it out.

We take some level of risk and push out this thing, sidelining normal operations. In an elite cohort team, a team that is used to deploying small, that is not the scenario anymore.

Within an hour, that patch will be out, within the normal process that they use. It’s not an exception anymore. It’s a normal process. It goes through the normal testing.

It goes through the normal quality control checks and you see the bottom, the change failure rate doesn’t actually change from medium all the way to elite.

That’s a critical security statistic.

The quality does not decrease the faster these people move because they’ve embraced things like automated systems. They’re used to automating all these checks so that it’s not a weird scenario where they go, “Well, we’re just gonna ignore all these tests that we’ve built because we don’t have time.”

They’ve invested the time to automate all that, testing to ensure that they can do this.

Another critical stat here is time to service restore. Okay? If something went wrong, if that actually failed, so if you’re in the one of the 15% that failed on that patch, they can recover within an hour. Normally, that’s by failing forward.

That’s what we’ve been pushing for for years as a security team, to be able to dynamically adapt and respond. Because we know people are gonna make mistakes. We know that there are going to be issues moving forward and we need to prepare for it.

Yet somehow, it’s always a shock. The reason is, is we’re not involved early enough, right? So if we start to get involved early enough, new options open up. We talked about, you know, building encryption in as opposed to using a third-party control for that.

That’s a huge thing.

Also, that raises new questions for us as security people. Can you keep up with fast development cycles? Can you keep up with the fact that production may change six or seven times a day?

In the current way we do things, we can’t, which is why the resistance, when people say, “Hey, we’re looking at adopting a DevOps culture.” and hopefully they phrase it that way, as opposed to, “I bought a DevOps box.”

If anyone ever says that, by the way, “I bought a DevOps box,” or, “a tool,” it’s time to take a step back. It’s not how this has worked. It’s a culture movement. It’s a process movement, right?

But for us, we need to adjust how we keep up with this, because it’s a better way to work. We just saw the data, right? We just saw it on the last page that you can restore within an hour and not lose any quality.

We can go through our normal quality control process.

That’s a huge win for security.

That’s a huge win for the business, but it also challenges us as security folks. Are we comfortable talking with other parts of the business?

I mentioned we’re kind of sitting on the side, not invented to the party, and not invited to the party. There’s a different way of working. There’s a different way of discussion. We traditionally are known as the house of no.

So when it comes to the great idea, “Hey, I wanna do this, it’s gonna be great. I wanna, you know, start deploying three times a day.” “No.” “Why not.” “Well, no. No real reason. We’re not comfortable with it.”

We normally deny first, right?

We’re very much security people. We’ve absolutely internalized that denial by default and only allow in some exceptions. From a business perspective, we’ve gotten away from it. We’ve gotten away with it for a very long time, but that’s starting to change.

So let me, let me start to wrap this up for you, because I’m gonna assume by the absolute utter silence that you guys have been processing this. Because it is a very different way to look at security. You might think, “How does this affect me? How does this impact my day-to-day?”

Well, we’ve gotten away with spending $118 billion globally on cyber security, despite as you saw just a small amount of evidence that we’re not really being that effective. Right?

Now, there’s an entire industry popping up around cyber security insurance.

And businesses are looking now from a risk perspective and saying, “Well, if I’m not effective at stopping threats, isn’t it better just to insure these assets, and when inevitably I get breached, because inevitably there’s gonna be, some sort of attack, can I re- just get to recover financially and pay out any liability that way?” Right?

This is shifting because, I will say it this way. The advantage of security being a board level discussion is that we’re getting more visibility. We’re getting called to the table.

We’re finally being invited to the party. The disadvantage is that we as a culture need to adjust how we think about security. It’s no longer, “There’s a potential threat. We absolutely have to stop it no matter what.” We need to adjust our thinking to adapt to the business environment.

We’ve gotten away with being isolated. There’s been pros and cons to that. Now that we’re being invited to the party, we need to start to think in business terms. We need to very much apply, whether this we’re getting value back from our security investment.

So we know we can’t scale. We can’t build out the team. It’s not gonna work. We can’t get the people. We’re not training the people as a, community fast enough. And even if you could, you probably can’t keep them because somebody else can outbid you. Right?

There’s always a bigger company that’s willing to write a bigger check.

As individuals, awesome. Take advantage of it. If you’re not happy where you are, there, I’m sure there’s a ton of people in this room.

I would probably go out on a limb and say 90% of the people in this room are hiring at some level [laughs] in their organization. It’s a great time to be in security, but for us to actually tackle the problem, we’re gonna need to start to automate a lot more of the work that we do.

If you look at your day-to-day work, especially if you’re an incident analyst or an incident responder, the vast majority of the work that you do or, not your sock does, should be automated. It’s a waste of time.

You’re sitting there looking at an endless stream of alerts. Maybe one in 10 if you’re lucky, is valuable. More realistically, one in 50. We need to do better from a technology side. We need to automate a lot of this stuff away to take advantage of the humans we do have.

We need to maximize their value.

Second is, accept that users aren’t a problem. We need to get away from awareness. We need to start educating the rest of our company, because we can’t take on the workload. You saw that from the demonstration.

These two, good sports showed us that two people can’t defend everybody in this room, right? So you need to start to educate everybody else so that they can make contextual decisions.

I will give you a fantastic example that we all deal with, whether you’re on PC or Mac, when the operating system prompts you to give you your administrative password, can you honestly tell me, as a security professional, there is enough information in that dialogue box to make a decision in context?

Just pops up and says, “Give me your admin password.” And you go, “Okay, no problem.” There’s no context. And we do that to our users. We saw that through phishing. We saw that through credentials and passwords. We don’t give people the right education to have the context to make an informed decision.

We need to adjust that. We need to educate the rest of the business.

And finally, we are starting to be invited, which is great, but we need to force ourselves out there. We need to get out and be more, participating. We need to be more collaborative with the other teams within the organization.

Because, if we start to push our solutions and security thinking earlier into the development cycle, earlier into the product selection cycle, we’re gonna get security by design. Not only is that just better security outcomes, it’s cheaper. 30 times cheaper, if we can get right to the initial stage, but there’s benefits to being in at any stage other than the, “Here, go run this,” right?

There’s a huge business advantage to that. We can stretch our dollars.

So let me leave you with this. I’ll give you an alternative goal for cybersecurity. We saw the CIA triad is sort of our accepted goal.

Let me give you the way I like to say it. I think the goal of everything that we’re doing, everything that everyone in this room does, I think that our goal should be simple. It’s to make sure that the systems in our organization work as intended and only as intended.

And that may not seem like a radically different definition of our goal, but it has some significant implications. Primarily, you can’t do this alone.

You can’t go off in an isolated team, design some controls that sit around all of your systems and still meet this goal of making sure that everything in the business works as intended. You need to get out, you need to collaborate, you need to work with the rest of the organization, because it makes our lives easier.

Ignore the business benefits, that is cheaper and more effective and will do the job better, it just makes us have a better [laughs] work life. We can do more effective things. We can have a better time of it. And for selfish reasons alone, that’s worth it.

Let alone the fact that it’s 30 times cheaper, that it’s far more effective. And we can actually start to impact statistics like the 170 days that attackers sit on our networks.

So if you take anything away from this, and hopefully, this is a positive takeaway because I’m sorry to have totally bummed you out for an hour, this is our goal.

Make sure that our systems work as intended and only as intended.

Thank you very much. I hope you have a fantastic rest of the day.